Matrix multiplication

Suppose we are given two matrices \[ A = \left[ \begin{array}{cc} a&b \nl c&d \end{array} \right], \qquad B = \left[ \begin{array}{cc} e&f \nl g&h \end{array} \right], \] and we want to multiply them together.

Unlike matrix addition and subtraction, matrix products are not performed element-wise: \[ \left[ \begin{array}{cc} a&b \nl c&d \end{array} \right] \left[ \begin{array}{cc} e&f \nl g&h \end{array} \right] \neq \left[ \begin{array}{cc} ae&bf \nl cg&dh \end{array} \right]. \]

Instead, the matrix product is computed by taking the dot product of each row of the matrix on the left with each of the columns of the matrix on the right: \[ \begin{align*} \begin{array}{c} \begin{array}{c} \vec{r}_1 \nl \vec{r}_2 \end{array} \left[ \begin{array}{cc} a & b \nl c & d \end{array} \right] \nl \ \end{array} \begin{array}{c} \left[ \begin{array}{cc} e&f \nl g&h \end{array} \right] \nl {\vec{c}_1} \ \ {\vec{c}_2} \end{array} & \begin{array}{c} = \nl \ \end{array} \begin{array}{c} \left[ \begin{array}{cc} \vec{r}_1 \cdot \vec{c}_1 & \vec{r}_1 \cdot \vec{c}_2 \nl \vec{r}_2 \cdot \vec{c}_1 & \vec{r}_2 \cdot \vec{c}_2 \end{array} \right] \nl \ \end{array} \nl & = \left[ \begin{array}{cc} ae+ bg & af + bh \nl ce + dg & cf + dh \end{array} \right]. \end{align*} \] Recall that the dot product between to vectors $\vec{v}$ and $\vec{w}$ is given by $\vec{v}\cdot \vec{w} \equiv \sum_i v_iw_i$.

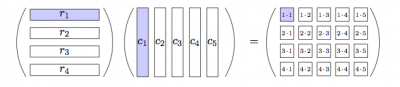

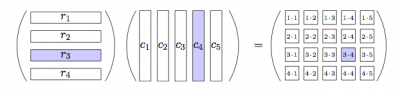

Let's now look at a picture which shows how to compute the product of a matrix with four rows and a matrix with five columns.

The top left entry of the product is computed by taking the dot product of the first row of the matrix on the left and the first column of the matrix on the right:

Similarly, the entry on the third row and fourth column of the product is computed by taking the dot product of the third row of the matrix on the left and the fourth column of the matrix on the right:

Note the size of the rows of the matrix on the left must equal the size of the columns of the matrix on the right for the product to be well defined.

Matrix multiplication rules

- Matrix multiplication is associative:

\[ (AB)C = A(BC) = ABC. \]

- The “touching” dimensions of the matrices must be the same.

For the triple product $ABC$ to exits, the number of rows of $A$ must

be equal to the number of columns of $B$ and the number of rows

of $B$ must equal the number of columns of $C$.

* Given two matrices $A \in \mathbb{R}^{m\times n}$ and $B \in \mathbb{R}^{n\times k}$,

the matrix product $AB$ will be a $m \times k$ matrix.

* The matrix product is //not commutative//:

{{:linear_algebra:linear_algebra--matrix_multiplication_not_commutative.png?300|Matrix multiplication is not commutative.}}

Explanations

Why is matrix multiplication defined like this? We will learn about this more in depth in the linear transformations section, but I don't want you to live in suspense until then, so I will tell you right now. You can think of multiplying some column vector $\vec{x} \in \mathbb{R}^n$ by a matrix $A \in \mathbb{R}^{m\times n}$ as analogous to applying the “vector function” $A$ on the vector input $\vec{x}$ to obtain a vector $\vec{y}$: \[ A: \mathbb{R}^n \to \mathbb{R}^m. \] Applying the vector function $A$ to the input $\vec{x}$ is the same as computing the matrix-vector product between $A\vec{x}$: \[ \textrm{for all } \vec{x} \in \mathbb{R}^n, \quad A\!\left(\vec{x}\right) \equiv A\vec{x}. \] Any linear function from $\mathbb{R}^n$ to $\mathbb{R}^m$ can be described as a matrix product by some matrix $A \in \mathbb{R}^{m\times n}$.

Okay, so what if you have some vector and you want to apply two linear operations on it. With functions, we call this function composition and we use a little circle to denote it: \[ z = g(f(x)) = g\circ f\:(x), \] where $g\circ f\;(x) $ means that you should apply $f$ to $x$ first to obtain some intermediary value $y$, and then you apply $g$ to $y$ to get the final output $z$. The notation $g \circ f$ is useful when you don't want to talk about the intermediary variable $y$ and you are interested in the overall functional relationship between $x$ and $z$. For example, we can define $h \equiv g\circ f$ and then talk about the properties of the function $h$.

With matrices, $B\circ A$ (applying $A$ then $B$) is equal to applying the product matrix $BA$: \[ \vec{z} = B\!\left( A(\vec{x}) \right) = (BA) \vec{x}. \] Similar to the case with functions, we can describe the overall map from $\vec{x}$'s to $\vec{z}$'s by a single entity $M\equiv BA$, and not only that, but we can even compute $M$ by taking the product of $B$ and $A$. So matrix multiplication turns out to be a very useful computational tool. You probably wouldn't have guessed this, given how tedious and boring the actual act of multiplying matrices is. But don't worry, you just have to multiply a couple of matrices by hand to learn how multiplication works. Most of the times, you will let computers multiply matrices for you. They are good at this kind of shit.

This perspective on matrices as linear transformations (functions on vectors) will also allow you to understand why matrix multiplication is not commutative. In general $BA \neq AB$ (non-commutativity of matrices), just the same way there is no reason to expect that $f \circ g$ will equal $g \circ f$ for two arbitrary functions.

Exercises

Basics

Compute the product \[ \left[ \begin{array}{cc} 1&2 \nl 3&4 \end{array} \right] \left[ \begin{array}{cc} 5&6 \nl 7&8 \end{array} \right] = \left[ \begin{array}{cc} \ \ \ & \ \ \ \nl \ \ \ & \ \ \ \end{array} \right] \]

Ans: $\left[ \begin{array}{cc}

19&22 \nl

43&50

\end{array}

\right]$