Definitions

Calculus is the study of functions $f(x)$ over the real numbers $\mathbb{R}$: \[ f: \mathbb{R} \to \mathbb{R}. \] The function $f$ takes as input some number, usually called $x$ and gives as output another number $f(x)=y$. You are familiar with many functions and have used them in many problems.

In this chapter we will learn about different operations that can be performed on functions. It worth understanding these operations because of the numerous applications which they have.

Differential calculus

Differential calculus is all about derivatives:

- $f'(x)$: the derivative of $f(x)$ is the rate of change of $f$ at $x$.

The derivative is also a function of the form

\[

f': \mathbb{R} \to \mathbb{R},

\]

The output of $f'(x)$ represents the //slope// of

a line parallel (tangent) to $f$ at the point $(x,f(x))$.

Integral calculus

Integral calculus is all about integration:

- $\int_a^b f(x)\:dx$: the integral of $f(x)$ from $x=a$ to $x=b$

corresponds to the area under $f(x)$ between $a$ and $b$:

\[

A(a,b) = \int_a^b f(x) \: dx.

\]

The $\int$ sign is a mnemonic for //sum//.

The integral is the "sum" of $f(x)$ over that interval.

* $F(x)=\int f(x)\:dx$: the anti-derivative of the function $f(x)$

contains the information about the area under the curve for

//all// limits of integration.

The area under $f(x)$ between $a$ and $b$ is computed as the

difference between $F(b)$ and $F(a)$:

\[

A(a,b) = \int_a^b f(x)\;dx = F(b)-F(a).

\]

Sequences and series

Functions are usually defined for continuous inputs $x\in \mathbb{R}$, but there are also functions which are defined only for natural numbers $n \in \mathbb{N}$. Sequences are the discrete analogue functions.

- $a_n$: sequence of numbers $\{ a_0, a_1, a_2, a_3, a_4, \ldots \}$.

You can think about each sequence as a function

\[

a: \mathbb{N} \to \mathbb{R},

\]

where the input $n$ is an integer (index into the sequence) and

the output is $a_n$ which could be any number.

NOINDENT The integral of a sequence is called a series.

- $\sum$: sum.

The summation sign is the short way to express

the sum of several objects:

\[

a_3 + a_4 + a_5 + a_6 + a_7

\equiv \sum_{3 \leq i \leq 7} a_i

\equiv \sum_{i=3}^{7} a_i.

\]

Note that summations could go up to infinity.

* $\sum a_i$: the series corresponds to the running total of a sequence until $n$:

\[

S_n = \sum_{i=1}^{n} a_i = a_1 + a_2 + \cdots + a_{n-1} + a_n.

\]

* $f(x)=\sum_{i=0}^\infty a_i x^i$: a //power series// is a series

which contains powers of some variable $x$.

Power series give us a way to express any function $f(x)$ as

an infinitely long polynomial.

For example, the power series of $\sin(x)$ is

\[

\sin(x)

= x - \frac{x^3}{3!} + \frac{x^5}{5!}

- \frac{x^7}{7!} + \frac{x^9}{9!}+ \ldots.

\]

Don't worry if you don't understand all the notions and the new notation in the above paragraphs. I just wanted to present all the calculus actors in the first scene. We will talk about each of them in more detail in the following sections.

Limits

Actually, we have not mentioned the main actor yet: the limit. In calculus, we do a lot of limit arguments in which we take some positive number $\epsilon>0$ and we make it progressively smaller and smaller:

- $\displaystyle\lim_{\epsilon \to 0}$: the mathematically rigorous

way of saying that the number $\epsilon$ becomes smaller and smaller. We can also take limits to infinity, that is, we imagine some number $N$ and we make that number bigger and bigger:

- $\displaystyle\lim_{N \to \infty}$: the mathematical

way of saying that the number $N$ will get larger and larger.

Indeed, it wouldn't be wrong to say that calculus is the study of the infinitely small and the infinitely many. Working with infinitely small quantities an infinitely large numbers can be tricky business but it is extremely important that you become comfortable with the concept of a limit which is the rigorous way of talking about infinity. Before we learn about derivatives, integrals and series we will spend some time learning about limits.

Infinity

Let's say you have a length $\ell$ and you want to divide it into infinitely many, infinitely short segments. There are infinitely many of them, but they are infinitely short so they add up to the total length $\ell$.

OK, that sounds complicated. Let's start from something simpler. We have a piece of length $\ell$ and we want to divide this length into $N$ pieces. Each piece will have length: \[ \delta = \frac{\ell}{N}. \] Let's check that, together, the $N$ pieces of length $\delta$ add up to the total length of the string: \[ N \delta = N \frac{\ell}{N} = \ell. \] Good.

Now imagine that $N$ is a very large number. In fact it can take on any value, but always larger and larger. The larger $N$ gets, the more fine grained the notion of “small piece of string” becomes. In this case we would have: \[ \lim_{N\to \infty} \delta = \lim_{N\to \infty} \frac{\ell}{N} = 0, \] so effectively the pieces of string are infinitely small. However, when you add them up you will still get: \[ \lim_{N\to \infty} \left( N \delta \right) = \lim_{N\to \infty} \left( N \frac{\ell}{N} \right) = \ell. \]

The lesson to learn here is that, if you keep things well defined you can use the notion of infinity in your equations. This is the central idea of this course.

Infinitely large

The number $\infty$ is really large. How large? Larger than any number you can think of! Say you think of a number $n$, then it is true that $\infty > n$. But no, you say, actually I thought of a different number $N > n$, well still it will be true that $\infty > N$. In fact any finite number you can think of, no matter how large will always be strictly smaller than $\infty$.

Infinitely small

If instead of a really large number, we want to have a really small number $\epsilon$, we can simply define it as the reciprocal of (one over) a really large number $N$: \[ \epsilon = \lim_{N \to \infty \atop N \neq \infty} \frac{1}{N}. \] However small $\epsilon$ must get, it remains strictly greater than zero $\epsilon > 0$. This is ensured by the condition $N\neq \infty$, otherwise if we would have $\lim_{N \to \infty} \frac{1}{N} = 0$.

The infinitely small $\epsilon>0$ is a new beast like nothing you have seen before. It is a non-zero number that is smaller than any number you can think of. Say you think $0.00001$ is pretty small, well it is true that $0.00001 > \epsilon > 0$. Then you say, no actually I was thinking about $10^{-16}$, a number with 15 zeros after the decimal point. It will still be true that $10^{-16} > \epsilon$, or even $10^{-123} > \epsilon > 0$. Like I said, I can make $\epsilon$ smaller than any number you can think of simply by choosing $N$ to be larger and larger, yet $\epsilon$ always remains non-zero.

Infinity for limits

When evaluating a limit, we often make the variable $x$ go to infinity. This is useful information, for example if we want to know what the function $f(x)$ looks like for very large values of $x$. Does it get closer and closer to some finite number, or does it blow up? For example the negative-power exponential function tends to zero for large values of $x$: \[ \lim_{x \to \infty} e^{-x} = 0. \] In the above examples we also saw that the inverse-$x$ function also tends to zero: \[ \lim_{x \to \infty} \frac{1}{x} = 0. \]

Note that in both cases, the functions will never actually reach zero. They get closer and closer to zero but never actually reach it. This is why the limit is a useful quantity, because it says that the functions get arbitrarily close to 0.

Sometimes infinity might come out as an answer to a limit question: \[ \lim_{x\to 3^-} \frac{1}{3-x} = \infty, \] because as $x$ gets closer to $3$ from below, i.e., $x$ will take on values like $2.9$, $2.99$, $2.999$, and so on and so forth, the number in the denominator will get smaller and smaller, thus the fraction will get larger and larger.

Infinity for derivatives

The derivative of a function is its slope, defined as the “rise over run” for an infinitesimally short run: \[ f'(x) = \lim_{\epsilon \to 0} \frac{\text{rise}}{\text{run}} = \lim_{\epsilon \to 0} \frac{f(x+\epsilon)\ - \ f(x)}{x+\epsilon \ - \ x}. \]

Infinity for integrals

The area under the curve $f(x)$ for values of $x$ between $a$ and $b$, can be though of as consisting of many little rectangles of width $\epsilon$ and height $f(x)$: \[ \epsilon f(a) + \epsilon f(a+\epsilon) + \epsilon f(a+2\epsilon) + \cdots + \epsilon f(b-\epsilon). \] In the limit where we take infinitesimally small rectangles, we obtain the exact value of the integral \[ \int_a^b f(x) \ dx= A(a,b) = \lim_{\epsilon \to 0}\left[ \epsilon f(a) + \epsilon f(a+\epsilon) + \epsilon f(a+2\epsilon) + \cdots + \epsilon f(b-\epsilon) \right], \]

Infinity for series

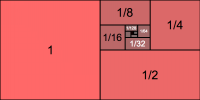

For a given $|r|<1$, what is the sum \[ S = 1 + r + r^2 + r^3 + r^4 + \ldots = \sum_{k=0}^\infty r^k \ \ ? \] Obviously, taking your calculator and performing the summation is not practical since there are infinitely many terms to add.

For several such infinite series, there is actually a closed form formula for their sum. The above series is called the geometric series and its sum is $S=\frac{1}{1-r}$. How were we able to tame the infinite? In this case, we used the fact that $S$ is similar to a shifted version of itself $S=1+rS$, and then solved for $S$.

Limits

To understand the ideas behind derivatives and integrals, you need to understand what a limit is and how to deal with the infinitely small, infinitely large and the infinitely many. In practice, using calculus doesn't actually involve taking limits since we will learn direct formulas and algebraic rules that are more convenient than doing limits. Do not skip this section though just because it is “not on the exam”. If you do so, you will not know what I mean when I write things like $0,\infty$ and $\lim$ in later sections.

Introduction in three acts

Zeno's paradox

The ancient greek philosopher Zeno once came up with the following argument. Suppose an archer shoots an arrow and sends it flying towards a target. After some time it will have travelled half the distance, and then at some later time it will have travelled the half of the remaining distance and so on always getting closer to the target. Zeno observed that no matter how little distance remains to the target, there will always be some later instant when the arrow will have travelled half of that distance. Thus, he reasoned, the arrow must keep getting closer and closer to the target, but never reaches it.

Zeno, my brothers and sisters, was making some sort of limit argument, but he didn't do it right. We have to commend him for thinking about such things centuries before calculus was invented (17th century), but shouldn't repeat his mistake. We better learn how to take limits, because limits are important. I mean a wrong argument about limits could get you killed for God's sake! Imagine if Zeno tried to verify experimentally his theory about the arrow by placing himself in front of one such arrow!

Two monks

Two young monks were sitting in silence in a Zen garden one autumn afternoon.

“Can something be so small as to become nothing?” asked one of the monks, braking the silence.

“No,” replied the second monk, “if it is something then it is not nothing.”

“Yes, but what if no matter how close you look you cannot see it, yet you know it is not nothing?”, asked the first monk, desiring to see his reasoning to the end.

The second monk didn't know what to say, but then he found a counterargument. “What if, though I cannot see it with my naked eye,

I could see it using a magnifying glass?”.

The first monk was happy to hear this question, because he had already prepared a response for it. “If I know that you will be looking with a magnifying glass, then I will make it so small that you cannot see with you magnifying glass.”

“What if I use a microscope then?”

“I can make the thing so small that even with a microscope you cannot see it.”

“What about an electron microscope?”

“Even then, I can make it smaller, yet still not zero.” said the first monk victoriously and then proceeded to add “In fact, for any magnifying device you can come up with, you just tell me the resolution and I can make the thing smaller than can be seen”.

They went back to concentrating on their breathing.

Epsilon and delta

The monks have the right reasoning but didn't have the right language to express what they mean. Zeno has the right language, the wonderful Greek language with letters like $\epsilon$ and $\delta$, but he didn't have the right reasoning. We need to combine aspects of both of the above stories to understand limits.

Let's analyze first Zeno's paradox. The poor brother didn't know about physics and the uniform velocity equation of motion. If an object is moving with constant speed $v$ (we ignore the effects of air friction on the arrow), then its position $x$ as a function of time is given by \[ x(t) = vt+x_i, \] where $x_i$ is the initial location where the object starts from at $t=0$. Suppose that the archer who fired the arrow was at the origin $x_i=0$ and that the target is at $x=L$ metres. The arrow will hit the target exactly at $t=L/v$ seconds. Shlook!

It is true that there are times when the arrow will be $\frac{1}{2}$, $\frac{1}{4}$, $\frac{1}{8}$th, $\frac{1}{16}$th, and so forth distance from the target. In fact there infinitely many of those fractional time instants before the arrow hits, but that is beside the point. Zeno's misconception is that he thought that these infinitely many timestamps couldn't all fit in the timeline since it is finite. No such problem exists though. Any non-zero interval on the number line contains infinitely many numbers ($\mathbb{Q}$ or $\mathbb{R}$).

Now let's get to the monks conversation. The first monk was talking about the function $f(x)=\frac{1}{x}$. This function becomes smaller and smaller but it never actually becomes zero: \[ \frac{1}{x} \neq 0, \textrm{ even for very large values of } x, \] which is what the monk told us.

Remember that the monk also claimed that the function $f(x)$ can be made arbitrarily small. He wants to show that, in the limit of large values of $x$, the function $f(x)$ goes to zero. Written in math this becomes \[ \lim_{x\to \infty}\frac{1}{x}=0. \]

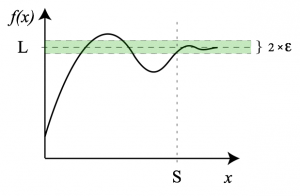

To convince the second monk that he can really make $f(x)$ arbitrarily small, he invents the following game. The second monk announces a precision $\epsilon$ at which he will be convinced. The first monk then has to choose an $S_\epsilon$ such that for all $x > S_\epsilon$ we will have \[ \left| \frac{1}{x} - 0 \right| < \epsilon. \] The above expression indicates that $\frac{1}{x}\approx 0$ at least up to a precision of $\epsilon$.

The second monk will have no choice but to agree that indeed $\frac{1}{x}$ goes to 0 since the argument can be repeated for any required precision $\epsilon >0$. By showing that the function $f(x)$ approaches $0$ arbitrary closely for large values of $x$, we have proven that $\lim_{x\to \infty}f(x)=0$.

More generally, the function $f(x)$ can converge to any number $L$

for as $x$ takes on larger and larger values:

\[

\lim_{x \to \infty} f(x) = L.

\]

The above expressions means that, for any precision $\epsilon>0$,

there exists a starting point $S_\epsilon$,

after which $f(x)$ equals its limit $L$ to within $\epsilon$ precision:

\[

\left|f(x) - L\right| <\epsilon, \qquad \forall x \geq S_\epsilon.

\]

More generally, the function $f(x)$ can converge to any number $L$

for as $x$ takes on larger and larger values:

\[

\lim_{x \to \infty} f(x) = L.

\]

The above expressions means that, for any precision $\epsilon>0$,

there exists a starting point $S_\epsilon$,

after which $f(x)$ equals its limit $L$ to within $\epsilon$ precision:

\[

\left|f(x) - L\right| <\epsilon, \qquad \forall x \geq S_\epsilon.

\]

Example

You are asked to calculate $\lim_{x\to \infty} \frac{2x+1}{x}$, that is you are given the function $f(x)=\frac{2x+1}{x}$ and you have to figure out what the function looks like for very large values of $x$. Note that we can rewrite the function as $\frac{2x+1}{x}=2+\frac{1}{x}$ which will make it easier to see what is going on: \[ \lim_{x\to \infty} \frac{2x+1}{x} = \lim_{x\to \infty}\left( 2 + \frac{1}{x} \right) = 2 + \lim_{x\to \infty}\left( \frac{1}{x} \right) = 2 + 0, \] since $\frac{1}{x}$ tends to zero for large values of $x$.

In a first calculus course you are not required to prove statements like $\lim_{x\to \infty}\frac{1}{x}=0$, you can just assume that the result is obvious. As the denominator $x$ becomes larger and larger, the fraction $\frac{1}{x}$ becomes smaller and smaller.

Types of limits

Limits to infinity

\[ \lim_{x\to \infty} f(x) \] what happens to $f(x)$ for very large values of $x$.

Limits to a number

The limit of $f(x)$ approaching $x=a$ from above (from the right) is denoted: \[ \lim_{x\to a^+} f(x) \] Similarly, the expression \[ \lim_{x\to a^-} f(x) \] describes what happens to $f(x)$ as $x$ approaches $a$ from below (from the left), i.e., with values like $x=a-\delta$, with $\delta>0, \delta \to 0$. If both limits from the left and from the right of some number are equal, then we can talk about the limit as $x\to a$ without specifying the direction: \[ \lim_{x\to a} f(x) = \lim_{x\to a^+} f(x) = \lim_{x\to a^-} f(x). \]

Example 2

You now asked to calculate $\lim_{x\to 5} \frac{2x+1}{x}$. \[ \lim_{x\to 5} \frac{2x+1}{x} = \frac{2(5)+1}{5} = \frac{11}{5}. \]

Example 3

Find $\lim_{x\to 0} \frac{2x+1}{x}$. If we just plug $x=0$ into the fraction we get an error divide by zero $\frac{2(0)+1}{0}$ so a more careful treatment will be required.

Consider first the limit from the right $\lim_{x\to 0+} \frac{2x+1}{x}$. We want to approach the value $x=0$ with small positive numbers. The best way to carry out the calculation is to define some small positive number $\delta>0$, to choose $x=\delta$, and to compute the limit: \[ \lim_{\delta\to 0} \frac{2(\delta)+1}{\delta} = 2 + \lim_{\delta\to 0} \frac{1}{\delta} = 2 + \infty = \infty. \] We took it for granted that $\lim_{\delta\to 0} \frac{1}{\delta}=\infty$. Intuitively, we can imagine how we get closer and closer to $x=0$ in the limit. When $\delta=10^{-3}$ the function value will be $\frac{1}{\delta}=10^3$. When $\delta=10^{-6}$, $\frac{1}{\delta}=10^6$. As $\delta \to 0$ the function will blow up—$f(x)$ will go up all the way to infinity.

If we take the limit from the left (small negative values of $x$) we get \[ \lim_{\delta\to 0} f(-\delta) =\frac{2(-\delta)+1}{-\delta}= -\infty. \] Therefore, since $\lim_{x\to 0^+}f(x)$ does not equal $\lim_{x\to 0^-} f(x)$, we say that $\lim_{x\to 0} f(x)$ does not exist.

Continuity

A function $f(x)$ is continuous at $a$ if the limit of $f$ as $x\to a$ converges to $f(a)$: \[ \lim_{x \to a} f(x) = f(a). \]

Most functions we will study in calculus are continuous, but not all functions are. For example, functions which make sudden jumps are not continuous. Another examples is the function $f(x)=\frac{2x+1}{x}$ which is discontinuous at $x=0$ (because the limit $\lim_{x \to 0} f(x)$ doesn't exist and $f(0)$ is not defined). Note that $f(x)$ is continuous everywhere else on the real line.

Formulas

We now switch gears into reference mode, as I will state a whole bunch known formulas for limits of various kinds of functions. You are not meant to know why these limit formulas are true, but simply understand what they mean.

The following statements tell you about the relative sizes of functions. If the limit of the ratio of two functions is equal to $1$, then these functions must behave similarly in the limit. If the limit of the ratio goes to zero, then one function must be much larger than the other in the limit.

Limits of trigonometric functions: \[ \lim_{x\rightarrow0}\frac{\sin(x)}{x}=1,\quad \lim_{x\rightarrow0} \cos(x)=1,\quad \lim_{x\rightarrow 0}\frac{1-\cos x }{x}=0, \quad \lim_{x\rightarrow0}\frac{\tan(x)}{x}=1. \]

The number $e$ is defined as one of the following limits: \[ e \equiv \lim_{n\rightarrow\infty}\left(1+\frac{1}{n}\right)^n = \lim_{\epsilon\to 0 }(1+\epsilon)^{1/\epsilon}. \] The first limit corresponds to a compound interest calculation, with annual interest rate of $100\%$ and compounding performed infinitely often.

For future reference, we state some other limits involving the exponential function: \[ \lim_{x\rightarrow0}\frac{{\rm e}^x-1}{x}=1,\qquad \quad \lim_{n\rightarrow\infty}\left(1+\frac{x}{n}\right)^n={\rm e}^x. \]

These are some limits involving logarithms: \[ \lim_{x\rightarrow 0^+}x^a\ln(x)=0,\qquad \lim_{x\rightarrow\infty}\frac{\ln^p(x)}{x^a}=0, \ \forall p < \infty \] \[ \lim_{x\rightarrow0}\frac{\ln(x+a)}{x}=a,\qquad \lim_{x\rightarrow0}\left(a^{1/x}-1\right)=\ln(a). \]

A polynomial of degree $p$ and the exponential function base $a$ with $a > 1$ both go to infinity as $x$ goes to infinity: \[ \lim_{x\rightarrow\infty} x^p= \infty, \qquad \qquad \lim_{x\rightarrow\infty} a^x= \infty. \] Though both functions go to infinity, the exponential function does so much faster, so their relative ratio goes to zero: \[ \lim_{x\rightarrow\infty}\frac{x^p}{a^x}=0, \qquad \mbox{for all } p \in \mathbb{R}, |a|>1. \] In computer science, people make a big deal of this distinction when comparing the running time of algorithms. We say that a function is computable if the number of steps it takes to compute that function is polynomial in the size of the input. If the algorithm takes an exponential number of steps, then for all intents and purposes it is useless, because if you give it a large enough input the function will take longer than the age of the universe to finish.

Other limits: \[ \lim_{x\rightarrow0}\frac{\arcsin(x)}{x}=1,\qquad \lim_{x\rightarrow\infty}\sqrt[x]{x}=1. \]

Limit rules

If you are taking the limit of a fraction $\frac{f(x)}{g(x)}$, and you have $\lim_{x\to\infty}f(x)=0$ and $\lim_{x\to\infty}g(x)=\infty$, then we can informally write: \[ \lim_{x\to \infty} \frac{f(x)}{g(x)} = \frac{\lim_{x\to \infty} f(x)}{ \lim_{x\to \infty} g(x)} = \frac{0}{\infty} = 0, \] since both functions are helping to drive the fraction to zero.

Alternately if you ever get a fraction of the form $\frac{\infty}{0}$ as a limit, then both functions are helping to make the fraction grow to infinity so we have $\frac{\infty}{0} = \infty$.

L'Hopital's rule

Sometimes when evaluating limits of fractions $\frac{f(x)}{g(x)}$, you might end up with a fraction like \[ \frac{0}{0}, \qquad \text{or} \qquad \frac{\infty}{\infty}. \] These are undecidable conditions. Is the effect of the numerator stronger or the effect of the denominator stronger?

One way to find out, is to compare the ratio of their derivatives. This is called L'Hopital's rule: \[ \lim_{x\rightarrow a}\frac{f(x)}{g(x)} \ \ \ \overset{\textrm{H.R.}}{=} \ \ \ \lim_{x\rightarrow a}\frac{f'(x)}{g'(x)}. \]

Derivatives

The derivative of a function $f(x)$ is another function, which we will call $f'(x)$ that tells you the slope of $f(x)$. For example, the constant function $f(x)=c$ has slope $f'(x)=0$, since a constant function is flat. What is the derivative of a line $f(x)=mx+b$? The derivative is the slope right, so we must have $f'(x)=m$. What about more complicated functions?

Definition

The derivative of a function is defined as: \[ f'(x) \equiv \lim_{ \epsilon \rightarrow 0}\frac{f(x+\epsilon)-f(x)}{\epsilon}. \] You can think of $\epsilon$ as a really small number. I mean really small. The above formula is nothing more than the rise-over-run rule for calculating the slope of a line, \[ \frac{ rise } { run } = \frac{ \Delta y } { \Delta x } = \frac{y_f - y_i}{x_f - x_i} = \frac{f(x+\epsilon)\ - \ f(x)}{x + \epsilon \ -\ x}, \] but by taking $\epsilon$ to be really small, we will get the slope at the point $x$.

Derivatives occur so often in math that people have come up with many different notations for them. Don't be fooled by that. All of them mean the same thing $Df(x) = f'(x)=\frac{df}{dx}=\dot{f}=\nabla f$.

Applications

Knowing how to take derivatives is very useful in life. Given some phenomenon described by $f(x)$ you can say how it changes over time. Many times we don't actually care about the value of $f'(x)$, just its sign. If the derivative is positive $f'(x) > 0$, then the function is increasing. If $f'(x) < 0$ then the function is decreasing.

When the function is flat at a certain $x$ then $f'(x)=0$. The points where $f'(x)=0$ (the roots of $f'(x)$) are very important for finding the maximum and minimum values of $f(x)$. Recall how we calculated the maximum height $h$ that projectile reaches by first finding the time $t_{top}$ when its velocity in the $y$ direction was zero $y^\prime(t_{top})=v(t_{top})=0$ and then substituting this time in $y(t)$ to obtain $h=\max\{ y(t) \} =y(t_{top})$.

Example

Now let's take a derivative of $f(x)=2x^2 + 3$ to see how that complicated-looking formula works: \[ f'(x)=\lim_{\epsilon \rightarrow 0} \frac{f(x+\epsilon)-f(x)}{\epsilon} = \lim_{\epsilon \rightarrow 0} \frac{2(x+\epsilon)^2+3 \ \ - \ \ 2x^2 + 3}{\epsilon}. \] Let's simplify the right-hand side a bit \[ \frac{2x^2+ 4x\epsilon +\epsilon^2 - 2x^2}{\epsilon} = \frac{4x\epsilon +\epsilon^2}{\epsilon}= \frac{4x\epsilon}{\epsilon} + \frac{\epsilon^2}{\epsilon}. \] Now when we take the limit, the second term disappears: \[ f'(x) = \lim_{\epsilon \rightarrow 0} \left( \frac{4x\epsilon}{\epsilon} + \frac{\epsilon^2}{\epsilon} \right) = 4x + 0. \] Congratulations, you have just taken your first derivative! The calculations were not that complicated, but it was pretty long and tedious. The good news is that you only need to calculate the derivative from first principles only once. Once you find a derivative formula for a particular function, you can use the formula every time you see a function of that form.

A derivative formula

\[ f(x) = x^n \qquad \Rightarrow \qquad f'(x) = n x^{n-1}. \]

Example

Use the above formula to find the derivatives of the following three functions: \[ f(x) = x^{10}, \quad g(x) = \sqrt{x^3}, \qquad h(x) = \frac{1}{x^3}. \] In the first case, we use the formula directly to find the derivative $f'(x)=10x^9$. In the second case, we first use the fact that square root is equivalent to an exponent of $\frac{1}{2}$ to rewrite the function as $g(x)=x^{\frac{3}{2} }$, then using the formula we find that $g'(x)=\frac{3}{2}x^{\frac{1}{2} } =\frac{3}{2}\sqrt{x}$. We can also rewrite the third function as $h(x)=x^{-3}$ and then compute the derivative $h'(x)=-3x^{-4}=\frac{-3}{x^4}$ using the formula.

Discussion

In the next section we will develop derivative formulas for other functions.

Formulas to memorize

\[ \begin{align*} F(x) & \ - \textrm{ diff. } \to \quad F'(x) \nl \int f(x)\;dx & \ \ \leftarrow \textrm{ int. } - \quad f(x) \nl a &\qquad\qquad\qquad 0 \nl x &\qquad\qquad\qquad 1 \nl af(x) &\qquad\qquad\qquad af'(x) \nl f(x)+g(x) &\qquad\qquad\qquad f'(x)+g'(x) \nl x^n &\qquad\qquad\qquad nx^{n-1} \nl 1/x=x^{-1} &\qquad\qquad\qquad -x^{-2} \nl \sqrt{x}=x^{\frac{1}{2}} &\qquad\qquad\qquad \frac{1}{2}x^{-\frac{1}{2}} \nl {\rm e}^x &\qquad\qquad\qquad {\rm e}^x \nl a^x &\qquad\qquad\qquad a^x\ln(a) \nl \ln(x) &\qquad\qquad\qquad 1/x \nl \log_a(x) &\qquad\qquad\qquad (x\ln(a))^{-1} \nl \sin(x) &\qquad\qquad\qquad \cos(x) \nl \cos(x) &\qquad\qquad\qquad -\sin(x) \nl \tan(x) &\qquad\qquad\qquad \sec^2(x)\equiv\cos^{-2}(x) \nl \csc(x) \equiv \frac{1}{\sin(x)} &\qquad\qquad\qquad -\sin^{-2}(x)\cos(x) \nl \sec(x) \equiv \frac{1}{\cos(x)} &\qquad\qquad\qquad \tan(x)\sec(x) \nl \cot(x) \equiv \frac{1}{\tan(x)} &\qquad\qquad\qquad -\csc^2(x) \nl \sinh(x) &\qquad\qquad\qquad \cosh(x) \nl \cosh(x) &\qquad\qquad\qquad \sinh(x) \nl \sin^{-1}(x) &\qquad\qquad\qquad \frac{1}{\sqrt{1-x^2}} \nl \cos^{-1}(x) &\qquad\qquad\qquad \frac{-1}{\sqrt{1-x^2}} \nl \tan^{-1}(x) &\qquad\qquad\qquad \frac{1}{1+x^2} \end{align*} \]

Derivative rules

Taking derivatives is a simple task: you just have to lookup the appropriate formula in the table of derivative formulas. However the tables of derivatives usually don't have the formulas for composite functions. In this section, we will learn about some important rules for derivatives, so that you will know how to handle derivatives of composite functions.

Formulas

Linearity

The derivative of a sum of two functions is the sum of the derivatives: \[ \left[f(x) + g(x)\right]^\prime= f^\prime(x) + g^\prime(x), \] and for any constant $a$, we have \[ \left[a f(x)\right]^\prime= a f^\prime(x). \] The fact that the derivative operation obeys these two conditions means that derivatives are linear operations.

Product rule

The derivative of a product of two functions is obtained as follows: \[ \left[ f(x)g(x) \right]^\prime = f^\prime(x)g(x) + f(x)g^\prime(x). \]

Quotient rule

As a special case the product rule, we obtain the derivative rule for a fraction of two functions: \[ \frac{d}{dx}\left[ \frac{f(x)}{g(x)}\right]^\prime=\frac{f'(x)g(x)-f(x)g'(x)}{g(x)^2}. \]

Chain rule

If you have a situation with an inner function and outer function like $f(g(x))$, then the derivative is obtained in a two step process: \[ \left[ f(g(x)) \right]^\prime = f^\prime(g(x))g^\prime(x). \] In the first step you leave $g(x)$ alone and focus on taking the derivative of the outer function. Just copy over whatever $g(x)$ is inside the $f'$ expression. The second step is to multiply the resulting expression by the derivative of the inner function $g'(x)$.

In words, the chain rule tells us that the rate of change of a composite function can be calculated as the product of the rate of change of the components.

Example

\[ \frac{d}{dx}\left[ \sin(x^2)) \right] = \cos(x^2)[x^2]' = \cos(x^2)2x. \]

More complicated example

The chain rule also applies to functions of functions of functions $f(g(h(x)))$. To take the derivative, just start from the outermost function and then work your way towards $x$. \[ \left[ f(g(h(x))) \right]' = f'(g(h(x))) g'(h(x)) h'(x). \] Now let's try this \[ \frac{d}{dx} \left[ \sin( \ln( x^3) ) \right] = \cos( \ln(x^3) ) \frac{1}{x^3} 3x^2 = \cos( \ln(x^3) ) \frac{3}{x}. \] Simple right?

Examples

The above rules are all that you need to take the derivative of any function no matter how complicated. To convince you of this, I will now show you some examples of really hairy functions. Don't be scared by complexity: as long as you follow the rules, you will get the right answer in the end.

Example

Calculate the derivative of \[ f(x) = e^{x^2}. \] We just need the chain rule for this one: \[ \begin{align} f'(x) & = e^{x^2}[x^2]' \nl & = e^{x^2}2x. \end{align} \]

Example 2

\[ f(x) = \sin(x)e^{x^2}. \] We will need the product rule for this one: \[ \begin{align} f'(x) & = \cos(x)e^{x^2} + \sin(x)2xe^{x^2}. \end{align} \]

Example 3

\[ f(x) = \sin(x)e^{x^2}\ln(x). \] This is still the product rule, but now we will have three terms. In each term, we take the derivative of one of the functions and multiply by the other two: \[ \begin{align} f'(x) & = \cos(x)e^{x^2}\ln(x) + \sin(x)2xe^{x^2}\ln(x) + \sin(x)e^{x^2}\frac{1}{x}. \end{align} \]

Example 4

Ok let's go crazy now: \[ f(x) = \sin\!\left( \cos\!\left( \tan(x) \right) \right). \] We need a triple chain rule for this one: \[ \begin{align} f'(x) & = \cos\!\left( \cos\!\left( \tan(x) \right) \right) \left[ \cos\!\left( \tan(x) \right) \right]^\prime \nl & = -\cos\!\left( \cos\!\left( \tan(x) \right) \right) \sin\!\left( \tan(x) \right)\left[ \tan(x) \right]^\prime \nl & = -\cos\!\left( \cos\!\left( \tan(x) \right) \right) \sin\!\left( \tan(x) \right)\sec^2(x). \end{align} \]

Explanations

Proof of the product rule

By definition, the derivative of $f(x)g(x)$ is \[ \left( f(x)g(x) \right)' = \lim_{\epsilon \rightarrow 0} \frac{f(x+\epsilon)g(x+\epsilon)-f(x)g(x)}{\epsilon}. \] Consider the numerator of the fraction. If we add and subtract $f(x)g(x+\epsilon)$, we can factor the expression into two terms like this: \[ \begin{align} & f(x+\epsilon)g(x+\epsilon) \ \overbrace{-f(x)g(x+\epsilon) +f(x)g(x+\epsilon)}^{=0} \ - f(x)g(x) \nl & \ \ \ = [f(x+\epsilon)-f(x) ]g(x+\epsilon) + f(x)[ g(x+\epsilon)- g(x)], \end{align} \] thus the expression for the derivative of the product becomes \[ \left( f(x)g(x) \right)' = \left\{ \lim_{\epsilon \rightarrow 0} \frac{[f(x+\epsilon)-f(x) ]}{\epsilon}g(x+\epsilon) + f(x) \frac{[ g(x+\epsilon)- g(x)]}{\epsilon} \right\}. \] This looks almost exactly like the product rule formula, except that we have $g(x+\epsilon)$ instead of $g(x)$. This is not a problem, though, since we assumed that $f(x)$ and $g(x)$ are differentiable functions, which implies that they are continuous functions. For continuous functions, we have $\lim_{\epsilon \rightarrow 0}g(x+\epsilon) = g(x)$ and we obtain the final form of the product rule: \[ \left( f(x)g(x) \right)' = f'(x)g(x) + f(x)g'(x). \]

Proof of the chain rule

Before we begin the proof, I want to make a remark on the notation used in the definition of the derivative. I like the greek letter epsilon $\epsilon$ so I defined the derivative of $f(x)$ as \[ f'(x)=\lim_{\epsilon \rightarrow 0} \frac{f(x+\epsilon)-f(x)}{\epsilon}, \] but I could have used any other variable instead: \[ f'(x) \equiv \lim_{\delta \rightarrow 0} \frac{f(x+\delta)-f(x)}{\delta} \equiv \lim_{h \rightarrow 0} \frac{f(x+h)-f(x)}{h}. \] All that matters is that we divide by the same quantity that is added to $x$ in the numerator, and that this quantity goes to zero.

The derivative of $f(g(x))$ is \[ \left( f(g(x)) \right)' = \lim_{\epsilon \rightarrow 0} \frac{f(g(x+\epsilon))-f(g(x))}{\epsilon}. \] The trick is to define a new quantity \[ \delta = g(x+\epsilon)-g(x), \] and then substitute $g(x+\epsilon) = g(x) + \delta$ into the expression for the derivative as follows \[ \left( f(g(x)) \right)' = \lim_{\epsilon \rightarrow 0} \frac{f(g(x) + \delta)-f(g(x))}{\epsilon}. \] This is starting to look more like a derivative formula, but the quantity added in the input is different from the quantity by which we divide. To fix this we will multiply and divide by $\delta$ to obtain \[ \lim_{\epsilon \rightarrow 0} \frac{f(g(x) + \delta)-f(g(x))}{\epsilon}\frac{\delta}{\delta} = \lim_{\epsilon \rightarrow 0} \frac{f(g(x) + \delta)-f(g(x))}{\delta}\frac{\delta}{\epsilon}. \] We now use the definition of the quantity $\delta$ and rearrange the fraction as follows: \[ \left( f(g(x)) \right)' = \lim_{\epsilon \rightarrow 0} \frac{f(g(x) + \delta)-f(g(x))}{\delta}\frac{g(x+\epsilon)-g(x)}{\epsilon}. \] This is starting to look a lot like $f'(g(x))g'(x)$, and in fact it is: taking the limit $\epsilon \to 0$ implies that the quantity $\delta(\epsilon) \to 0$. This is because the function $g(x)$ is continuous: $\lim_{\epsilon \rightarrow 0} g(x+\epsilon)-g(x)=0$. And so the quantity $\delta$ is just as good as $\epsilon$ for taking a derivative. Thus, we have proved that: \[ \left( f(g(x)) \right)' = f'(g(x))g'(x). \]

Alternate notation

The presence of so much primes and brackets in the above expressions can make them difficult to read. This is why we sometimes use a different notation for derivatives. The three rules of derivatives in the alternate notation are as follows:

Linearity: \[ \frac{d}{dx}(\alpha f(x) + \beta g(x))= \alpha\frac{df}{dx} + \beta\frac{dg}{dx}. \] Product rule: \[ \frac{d}{dx}(f(x)g(x)) = \frac{df}{dx}g(x) + f(x)\frac{dg}{dx}. \] Chain rule: \[ \frac{d}{dx}\left( f(g(x)) \right) = \frac{df}{dg}\frac{dg}{dx}. \]

Optimization: calculus' killer app

The reason why you need to learn about derivatives is that this skill will allow you to optimize any function. Suppose you have control over the input of the function $f(x)$ and you want to pick the best value of $x$. Best usually means maximum (if the function measures something good like profits) or minimum (if the function describes something bad like costs).

Example

The drug boss for the whole of lower Chicago area has recently had a lot of problems with the police intercepting his people on the street. It is clear that the more drugs he sells the more, money he will make, but if he starts to sell too much, the police arrests start to become more frequent and he loses money.

Fed up with this situation, he decides he needs to find the optimal amount of drugs to put out on the streets: as much as possible, but not too much for the police raids to kick in. So one day he tells his brothers and sisters in crime to leave the room and picks up a pencil and a piece of paper to do some calculus.

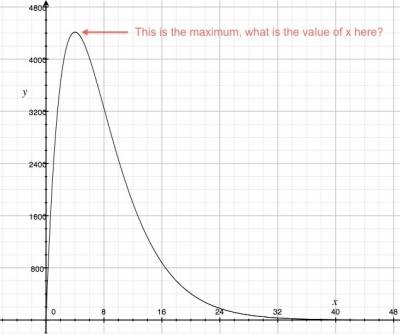

If $x$ is the amount of drugs he puts out on the street every day, then the amount of money he makes is given by the function: \[ f(x) = 3000x e^{-0.25x}, \] where the linear part $3000x$ represents his profits if there is no police and the $e^{-0.25x}$ represents the effects of the police stepping up their actions when more drugs is pumped on the street.

Looking at the function he asks “What is the value of $x$ which will give me the most profits from my criminal dealings?” Stated mathematically, he is asking for \[ \mathop{\text{argmax}}_x \ 3000x e^{-0.25x} \ = \ ?, \] which is read “find the value of the argument $x$ that gives the maximum value of $f(x)$.”

He remembers the steps required to find the maximum of a function from a conversation with a crooked stock trader he met in prison. First he must take the derivative of the function. Because the function is a product of two functions, he has to use the product rule $(fg)' = f'g+fg'$. When he takes the derivative of $f(x)$ he gets: \[ f'(x) = 3000e^{-0.25x} + 3000x(-0.25)e^{-0.25x}. \]

Whenever $f'(x)=0$ this means the function $f(x)$ has zero slope. A maximum is just the kind of place where there is zero slope: think of the peak of a mountain that has steep slopes to the left and to right, but right at the peak it is momentarily horizontal.

So when is the derivative zero? \[ f'(x) = 3000e^{-0.25x} + 3000x(-0.25)e^{-0.25x} = 0. \] We can factor out the $3000$ and the exponential function to get \[ 3000e^{-0.25x}( 1 -0.25x) = 0. \] Now $3000\neq0$ and the exponential function $e^{-0.25x}$ is never equal to zero either so it must be the term in the bracket which is equal to zero: \[ (1 -0.25x) = 0, \] or $x=4$. The slope of $f(x)$ is equal to zero when $x=4$. This correspond to the peak of the curve.

Right then and there the crime boss called his posse back

into the room and proudly announced that from now on his

organization will put out exactly four kilograms of drugs

on the street per day.

“Boss, how much will we make per day if we sell four kilograms?”, asks

one of the gangsters in sweatpants.

“We will make the maximum possible!”, replies the boss.

“Yes I know Boss, but how much money is the maximum?”

The dude in sweatpants is asking a good question.

It is one thing to know where the maximum occurs and it is another to know

the value of the function at this point.

He is asking the following mathematical question:

\[

\max_x \ 3000x e^{-0.25x} \ = \ ?.

\]

Since we already know the value $x^*=4$ where the maximum occurs,

we simply have to plug it into the function $f(x)$ to get:

\[

\max_x f(x) = f(4) = 3000(4)e^{-0.25(4)} = \frac{12000}{e} \approx 4414.55.

\]

After that conversation, everyone, including the boss, started to question

their choice of occupation in life.

Is crime really worth it when you do the numbers?

As you may know, the system is obsessed with this whole optimization thing. Optimize to make more profits, optimize to minimize costs, optimize stealing of natural resources from Third World countries, optimize anything that moves basically. Therefore, the system wants you, the young and powerful generation of the future, to learn this important skill and become faithful employees in the corporations. They want you to know so that you can help them optimize things, so that the whole enterprise will continue to run smoothly.

Mathematics makes no value judgments about what should and should not be optimized; this part is up to you. If, like me, you don't want to use optimization for system shit, you can use calculus for science. It doesn't matter whether it will be physics or medicine or building your own business, it is all good. Just stay away from the system. Please do this for me.

Optimization algorithm

In this section we show and explain the details of the algorithm for finding the maximum of a function. This is called optimization, as in finding the optimal value(s).

Say you have the function $f(x)$ that represents a real world phenomenon. For example, $f(x)$ could represent how much fun you have as a function of alcohol consumed during one evening. We all know that too much $x$ and the fun stops and you find yourself, like the Irish say, “talking to God on the big white phone.” Too little $x$ and you might not have enough Dutch courage to chat up that girl/guy from the table across the room. To have as much fun as possible, you want to find the alcohol consumption $x^*$ where $f$ takes on its maximum value.

This is one of the prominent applications of calculus (optimization not alcohol consumption). This is why you have been learning about all those limits, derivative formulas and differentiation rules in the previous sections.

Definitions

- $x$: the variable we have control over.

- $[x_i,x_f]$: some interval of values where $x$ can be chosen from, i.e., $x_i \leq x \leq x_f$. These are the constraints on the optimization problem. (For the drinking optimization problem $x\geq 0$ since you can't drink negative alcohol, and probably $x<2$ (in litres of hard booze) because roughly around there you will die from alcohol poisoning. So we can say we are searching for the optimal amount of alcohol $x$ in the interval $[0,2]$.)

- $f(x)$: the function we want to optimize. This function has to be differentiable, meaning that we can take its derivative.

- $f'(x)$: The derivative of $f(x)$. The derivative contains the information about the slope of $f(x)$.

- maximum: A place where the function reaches a peak. Furthermore, when there are multiple peaks, we call the highest of them the global maximum, while all others are called local maxima.

- minimum: A place where the function reaches a low point: the bottom of a valley. The global minimum is the lowest point overall, whereas a local minimum is only the minimum in some neighbourhood.

- extremum: An extremum is a general term that includes maximum and minimum.

- saddle point: A place where $f'(x)=0$ but that point is neither a max nor a min. Ex: $f(x)=x^5$ when $x=0$.

Suppose some function $f(x)$ has a global maximum at $x^*$ and the value of that maximum is $f(x^*)=M$. The following mathematical notations apply:

- $\mathop{\text{argmax}}_x \ f(x)=x^*$, to refer the location (the argument) where the maximum occurs.

- $\max_x \ f(x) = M$, to refer to the maximum value.

Algorithm for finding extrema

Input: Some function $f(x)$ and a constraint region $C=[x_i,x_f]$.

Output: The location and value of all maxima and minima of $f(x)$.

You should proceed as follows to find the extrema of a function:

- First look at $f(x)$. If you can, plot it. If not, just try to imagine it.

- Find the derivative $f'(x)$.

- Solve the equation $f'(x)=0$. There will usually be multiple solutions. Make a list of them. We will call this the list of candidates.

- For each candidate $x^*$ in the list check if is a max, a min or a saddle point.

- If $f'(x^*-0.1)$ is positive and $f'(x^*+0.1)$ is negative, then the point $x^*$ is a max.

The function was going up, then flattens at $x^*$ then goes down after $x^*$. Therefore $x^*$ must be a peak.

- If $f'(x^*-0.1)$ is negative and $f'(x^*+0.1)$ is positive, then the point $x^*$ is a min.

The function goes down, flattens then goes up, so the point must be a minimum.

- If $f'(x^*-0.1)$ and $f'(x^*+0.1)$ have the same sign, then the point $x^*$ is a saddle point. Remove it from the list of candidates.

- Now go through the list one more time and reject all candidates $x^*$ that do not satisfy the constraints C. In other words if $x\in [x_i,x_f]$ it stays, but if $x \not\in [x_i,x_f]$, we remove it since it is not feasible. For example, if you have a candidate solution in the alcohol consumption problem that says you should drink 5[L] of booze, you have to reject it, because otherwise you would die.

- Add $x_i$ and $x_f$ to the list of candidates. These are the boundaries of the constraint region and should also be considered. If no constrain was specified use the default constraint $x \in \mathbb{R}\equiv[-\infty,\infty]$ and add $-\infty$ and $\infty$ to the list.

- For each candidate $x^*$, calculate the function value $f(x^*)$.

The resulting list is a list of local extrema: maxima, minima and endpoints. The global maximum is the largest value from the list of local maxima. The global minimum is the smallest of the local minima.

Note that in dealing with points at infinity like $x^*=\infty$, you are not actually calculating a value but the limit $\lim_{x\to\infty}f(x)$. Usually the function either blows up $f(\infty)=\infty$ (like $x$, $x^2$, $e^x$, $\ldots$), drops down indefinitely $f(\infty)=-\infty$ (like $-x$, $-x^2$, $-e^x$, $\ldots$), or reaches some value (like $\lim_{x\to\infty} \frac{1}{x}=0, \ \lim_{x\to\infty} e^{-x}=0$). If a function goes to positive $\infty$ it doesn't have a global maximum: it simply keeps growing indefinitely. Similarly, functions that go towards negative $\infty$ don't have a global minimum.

Example 1

Find all the maxima and minima of the function \[ f(x)=x^4-8x^2+356. \]

Since no interval is specified we will use the default interval $x \in \mathbb{R}= -\infty,\infty$. Let's go through the steps of the algorithm.

- We don't know how a $x^4$ function looks like, but it is probably similar to the $x^2$ – it goes up to infinity on the far left and the far right.

- Taking the derivative is simple for polynomials:

\[ f'(x)=4x^3-16x. \]

- Now we have to solve

\[ 4x^3-16x=0, \]

which is the same as

\[

4x(x^2-4)=0,

\]

which is the same as

\[

4x(x-2)(x+2)=0.

\]

So our list of candidates is $\{ x=-2, x=0, x=2 \}$.

- For each of these we have to check if it is a max, a min or a saddle point.

- For $x=-2$, we check $f'(-2.1)=4(-2.1)(-2.1-2)(-2.1+2) < 0$ and

$f'(-1.9)=4(-1.9)(-1.9-2)(-1.9+2) > 0$ so $x=-2$ must be minimum.

- For $x=0$ we try $f'(-0.1)=4(-0.1)(-0.1-2)(-0.1+2) > 0$ and

$f'(0.1)=4(0.1)(0.1-2)(0.1+2) < 0$ so we have a maximum.

- For $x=2$, we check $f'(1.9)=4(1.9)(1.9-2)(1.9+2) < 0$

and $f'(2.1)=4(2.1)(2.1-2)(2.1+2) > 0$ so $x=2$ must be a minimum.

- We don't have any constraints so all of the above candidates make the cut.

- We add the two constraint boundaries $-\infty$ and $\infty$ to the list of candidates. At this point our final shortlist of candidates contains $\{ x=-\infty, x=-2, x=0, x=2, x=\infty \}$.

- We now evaluate the function $f(x)$ for each of the values to

get location-value pairs $(x,f(x))$ like so: $\{ (-\infty,\infty),$ $(-2,340),$ $(0,356),$ $(2,340),$ $(\infty,\infty) \}$.

Note that $f(\infty)=\lim_{x\to\infty} f(x) =$ $\infty^4 - 8\infty^2+356$ $= \infty$ and same for $f(-\infty)=\infty$.

We are done now. The function has no global maximum since it goes up to infinity. It has a local maximum at $x=0$ with value $356$ and two global minima at $x=-2$ and $x=2$ both of which have value $340$. Thank you, come again.

Alternate algorithm

Instead of checking nearby points to the left and to the right of each critical point, we can use an alternate Step 4 of the algorithm known as the second derivative test. Recall that the second derivative tells you the curvature of the function: if the second derivative is positive at a critical point $x^*$, then the point $x^*$ must be a minimum. If on the other hand the second derivative at a critical point is negative, then the function must be a maximum at $x^*$. If the second derivative is zero, the test is inconclusive.

Alternate Step 4

- For each candidate $x^*$ in the list check if is a max, a min or a saddle point.

- If $f^{\prime\prime}(x^*) < 0$ then $x^*$ is a max.

- If $f^{\prime\prime}(x^*) > 0$ then $x^*$ is a min.

- If $f^{\prime\prime}(x^*) = 0$ then, revert back to checking nearby values: $f'(x^*-\epsilon)$ and $f'(x^*+\epsilon)$,

to determine if $x^*$ is max, min or saddle point.

Limitations

The above optimization algorithm applies to differentiable functions of a single variable. It just happens to be that most functions you will face in life are of this kind, so what you have learned is very general. Not all functions are differentiable however. Functions with sharp corners like the absolute value function $|x|$ are not differentiable everywhere and therefore we cannot use the algorithms above. Functions with jumps in them (like the Heaviside step function) are not continuous and therefore not differentiable either so the algorithm cannot be used on them either.

There are also more general kinds of functions and optimization scenarios. We can optimize functions of multiple variables $f(x,y)$. You will learn how to do this in multivariable calculus. The techniques will be very similar to the above, but with more variables and intricate constraint regions.

At last, I want to comment on the fact that you can only maximize one function. Say the Chicago crime boss in the example above wanted to maximize his funds $f(x)$ and his gangster street cred $g(x)$. This is not a well posed problem, either you maximize $f(x)$ or you maximize $g(x)$, but you can't do both. There is no reason why a single $x$ will give the highest value for $f(x)$ and $g(x)$. If both functions are important to you, you can make a new function that combines the other two $F(x)=f(x)+g(x)$ and maximize $F(x)$. If gangster street cred is three times more important to you than funds, you could optimize $F(x)=f(x)+3g(x)$, but it is mathematically and logically impossible to maximize two things at the same time.

Exercises

The function $f(x)=x^3-2x^2+x$ has a local maximum on the interval $x \in [0,1]$. Find where this maximum occurs and the value of $f$ at that point. ANS:$\left(\frac{1}{3},\frac{4}{27}\right)$.

Integrals

We now begin our discussion of integrals, which is the second topic in calculus. Integrals are a fancy way to add up the value of a function to get “the whole” or the sum of its values over some interval. Normally integral calculus is taught as a separate course after differential calculus, but this separation is not necessary and can be even counter-productive.

The derivative $f'(x)$ measures the change in $f(x)$, i.e., the derivative measures the differences in $f$ for an $\epsilon$-small change in the input variable $x$: \[ \text{derivative } \ \propto \ \ f(x+\epsilon)-f(x). \] Integrals, on the other hand, measure the sum of the values of $f$, between $a$ and $b$ at regular intervals of $\epsilon$: \[ \text{integral } \propto \ \ \ f(a) + f(a+\epsilon) + f(a+2\epsilon) + \ldots + f(b-2\epsilon) + f(b-\epsilon). \] The best way to understand integration is to think of it as the opposite operation of differentiation: adding up all the changes in function gives you the function value.

In Calculus I we learned how to take a function $f(x)$ and find its derivative $f'(x)$. In integral calculus, we will be given a function $f(x)$ and we will be asked to find its integral on various intervals.

Definitions

These are some concepts that you should already be familiar with:

- $\mathbb{R}$: The set of real numbers.

- $f(x)$: A function:

\[ f: \mathbb{R} \to \mathbb{R}, \]

which means that $f$ takes as input some number (usually we call that number $x$)

and it produces as an output another number $f(x)$ (sometimes we also give an alias for the output $y=f(x)$).

* $\lim_{\epsilon \to 0}$: limits are the mathematically rigorous

way of speaking about very small numbers.

* $f'(x)$: the derivative of $f(x)$ is the rate of change of $f$ at $x$:

\[

f'(x) = \lim_{\epsilon \to 0} \frac{f(x+\epsilon)\ - \ f(x)}{\epsilon}.

\]

The derivative is also a function of the form

\[

f': \mathbb{R} \to \mathbb{R}.

\]

The function $f'(x)$ represents the //slope// of

the function $f(x)$ at the point $(x,f(x))$.

NOINDENT These are the new concepts:

- $x_i=a$: where the integral starts, i.e., some given point on the $x$ axis.

- $x_f=b$: where the integral stops.

- $A(x_i,x_f)$: The value of the area under the curve $f(x)$ from $x=x_i$ to $x=x_f$.

- $\int f(x)\; dx$: the integral of $f(x)$.

More precisely we can define the antiderivative of $f(x)$ as follows:

\[

F(b) = \int_0^b f(x) dx \ \ + \ \ F(0).

\]

The area $A$ of the region under $f(x)$ from $x=a$ to $x=b$ is given by:

\[

\int_a^b f(x) dx = F(b) - F(a) = A(a,b).

\]

The $\int$ sign is a mnemonic for //sum//.

Indeed the integral is nothing more than the "sum" of $f(x)$ for all values of $x$ between $a$ and $b$:

\[

A(a,b) = \lim_{\epsilon \to 0}\left[ \epsilon f(a) + \epsilon f(a+\epsilon) + \ldots + \epsilon f(b-2\epsilon) + \epsilon f(b-\epsilon) \right],

\]

where we imagine the total area broken-up into thin rectangular

strips of width $\epsilon$ and height $f(x)$.

* The name antiderivative comes from the fact that

\[

F'(x) = f(x),

\]

so we have:

\[

F(x) \!= \text{int}\!\left( \text{diff}( F(x) ) \right)= \int_0^x \left( \frac{d}{dt} F(t) \right) \ dt = \int_0^x \! f'(t) \ dt = F(x).

\]

Indeed, the //fundamental theorem of calculus//,

tells us that the derivative and integral are //inverse operations//,

so we also have:

\[

f(x) \!= \text{diff}\!\left( \text{int}( f(x) ) \right)

= \frac{d}{dx}\left[\int_0^x f(t) dt\right]

= \frac{d}{dx}\left[ F(x) - F(0) \right]

= f(x).

\]

Formulas

Riemann Sum

The Riemann sum is a good way to define the integral from first principles. We will brake up the area under the curve into many little strips of height varying according to $f(x)$. To obtain the total area, we sum-up all the areas of the rectangles. We will discuss Riemann sums in the next section, but first we look at the properties of integrals.

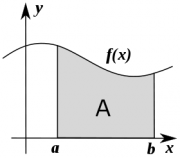

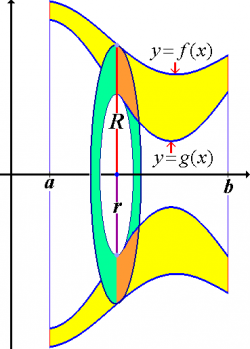

Area under the curve

The value of an integral corresponds to the area $A$,

under the curve $f(x)$ between $x=a$ and $x=b$:

\[

A(a,b) = \int_a^b f(x) \; dx.

\]

The value of an integral corresponds to the area $A$,

under the curve $f(x)$ between $x=a$ and $x=b$:

\[

A(a,b) = \int_a^b f(x) \; dx.

\]

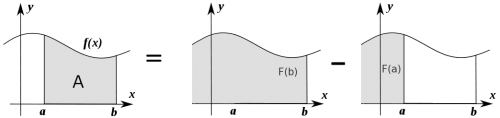

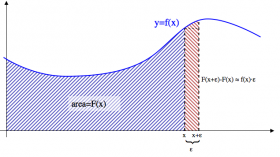

For certain functions it is possible to find an anti-derivative function $F(\tau)$, which describes the “running total” of the area under the curve starting from some arbitrary left endpoint and going all the way until $t=\tau$. We can compute the area under $f(t)$ between $a$ and $b$ by looking at the change in $F(\tau)$ between $a$ and $b$. \[ A(a,b) = F(b) - F(a). \]

We can illustrate the reasoning behind the above formula graphically:

The area $A(a,b)$ is equal to the “running total” until $x=b$

minus the running total until $x=a$.

The area $A(a,b)$ is equal to the “running total” until $x=b$

minus the running total until $x=a$.

Indefinite integral

The problem of finding the anti-derivative is also called integration. We say that we are finding an indefinite integral, because we haven't defined the limits $x_i$ and $x_f$.

So an integration problem is one in which you are given the $f(x)$, and you have to find the function $F(x)$. For example, if $f(x)=3x^2$, then $F(x)=x^3$. This is called “finding the integral of $f(x)$”.

Definite integrals

A definite integral specifies the function to integrate as well as the limits of integration $x_i$ and $x_f$: \[ \int_{x_i=a}^{x_f=b} f(x) \; dx = \int_{a}^{b} f(x) \; dx. \]

To find the value of the definite integral first calculate the indefinite integral (the antiderivative): \[ F(x) = \int f(x)\; dx, \] and then use it to compute the area as the difference of $F(x)$ at the two endpoints: \[ A(a,b) = \int_{x=a}^{x=b} f(x) \; dx = F(b) - F(a) \equiv F(x)\bigg|_{x=a}^{x=b}. \]

Note the new “vertical bar” notation: $g(x)\big\vert_{\alpha}^\beta=g(\beta)-g(\alpha)$, which is shorthand notation to denote the expression to the left evaluated at the top limit minus the same expression evaluated at the bottom limit.

Example

What is the value of the integral $\int_a^b x^2 \ dx$? We have \[ \int_a^b x^2 dx = \frac{1}{3}x^3\bigg|_{x=a}^{x=b} = \frac{1}{3}(b^3-a^3). \]

Signed area

If $a < b$ and $f(x) > 0$, then the area \[ A(a,b) = \int_{a}^{b} f(x) \ dx, \] will be positive.

However if we swap the limits of integration, in other words we start at $x=b$ and integrate backwards all the way to $x=a$, then the area under the curve will be negative! This is because $dx$ will always consist of tiny negative steps. Thus we have that: \[ A(b,a) = \int_{b}^{a} f(x) \ dx = - \int_{a}^{b} f(x) \ dx = - A(a,b). \] In all expressions involving integrals, if you want to swap the limits of integration, you have to add a negative sign in front of the integral.

The area could also come out negative if we integrate a negative function from $a$ to $b$. In general, if $f(x)$ is above the $x$ axis in some places these will be positive contributions to the total area under the curve, and places where $f(x)$ is below the $x$ axis will count as negative contributions to the total area $A(a,b)$.

Additivity

The integral from $a$ to $b$ plus the integral from $b$ to $c$ is equal to the integral from $a$ to $c$: \[ A(a,b) + A(b,c) = \int_a^b f(x) \; dx + \int_b^c f(x) \; dx = \int_a^c f(x) \; dx = A(a,c). \]

Linearity

Integration is a linear operation: \[ \int [\alpha f(x) + \beta g(x)]\; dx = \alpha \int f(x)\; dx + \beta \int g(x)\; dx, \] for arbitrary constants $\alpha, \beta$.

Recall that this was true for differentiation: \[ [\alpha f(x) + \beta g(x)]' = \alpha f'(x) + \beta g'(x), \] so we can say that the operations of calculus as a whole are linear operations.

The integral as a function

So far we have looked only at definite integrals where the limits of integration were constants $x_i=a$ and $x_f=b$, and so the integral was a number $A(a,b)$.

More generally, we can have one (or more) variable integration limits. For example we can have $x_i=a$ and $x_f=x$. Recall that area under the curve $f(x)$ is, by definition, computed as a difference of the anti-derivative function $F(x)$ evaluated at the limits: \[ A(x_i,x_f) = A(a,x) = F(x) - F(a). \]

The expression $A(a,x)$ is a bit misleading as a function name since it looks like both $a$ and $x$ are variable when in fact $a$ is a constant parameter, and only $x$ is the variable. Let's call it $A_a(x)$ instead. \[ A_a(x) = \int_a^x f(t) \; dt = F(x) - F(a). \]

Two observations. First, note that $A_a(x)$ and $F(x)$ differ only by a constant, so in fact the anti-derivative is the integral up to a constant which is usually not important. Second, note that because the variable $x$ appears in the upper limit of the expression, I had to use a dummy variable $t$ inside the integral. If we don't use a different variable, we could confuse the running variable inside the integral, with the limit of integration.

Fundamental theorem of calculus

Let $f(x)$ be a continuous function, and let $F(x)$ be its antiderivative on the interval $[a,b]$: \[ F(x) = \int_a^x f(t) \; dt, \] then, the derivative of $F(x)$ is equal to $f(x)$: \[ F'(x) = f(x), \] for any $x \in (a,b)$.

We see that differentiation and integration are inverse operations: \[ F(x) \!= \text{int}\left( \text{diff}( F(x) ) \right)= \int_0^x \left( \frac{d}{dt} F(t) \right) \; dt = \int_0^x f(t) \; dt = F(x) + C, \] \[ f(x) \!= \text{diff}\left( \text{int}( f(x) ) \right) = \frac{d}{dx}\left[\int_0^x f(t) dt\right] = \frac{d}{dx}\left[ F(x) - F(0) \right] = f(x). \]

We can think of the inverse operators $\frac{d}{dt}$ and $\int\cdot dt$ symbolically on the same footing as the other mathematical operations that you know about. The usual equation solving techniques can then be applied to solve equations which involve derivatives. For example, suppose that you want to solve for $f(t)$ in the equation \[ \frac{d}{dt} \; f(t) = 100. \] To get to $f(t)$ we must undo the $\frac{d}{dt}$ operation. We apply the integration operation to both sides of the equation: \[ \int \left(\frac{d}{dt}\; f(t)\right) dt = f(t) = \int 100\;dt = 100t + C. \] The solution to the equation $f'(t)=100$ is $f(t)=100t+C$ where $C$ is called the integration constant.

Gimme some of that

OK, enough theory. Let's do some anti-derivatives. But how does one do anti-derivatives? It's in the name, really. Derivative and anti. Whatever the derivative does, the integral must do the opposite. If you have: \[ F(x)=x^4 \qquad \overset{\frac{d}{dx} }{\longrightarrow} \qquad F'(x)=4x^3 \equiv f(x), \] then it must be that: \[ f(x)=4x^3 \qquad \overset{\ \int\!dx }{\longrightarrow} \qquad F(x)=x^4 + C. \] Each time you integrate, you will always get the answer up to an arbitrary additive constant $C$, which will always appear in your answers.

Let us look at some more examples:

- The integral of $\cos\theta$ is:

\[ \int \cos\theta \ d\theta = \sin\theta + C, \]

since $\frac{d}{d\theta}\sin\theta = \cos\theta$,

and similarly the integral for $\sin\theta$ is:

\[

\int \sin\theta \ d\theta = - \cos\theta + C,

\]

since $\frac{d}{d\theta}\cos\theta = - \sin\theta$.

* The integral of $x^n$ for any number $n \neq -1$ is:

\[

\int x^n \ dx = \frac{1}{n+1}x^{n+1} + C,

\]

since $\frac{d}{d\theta}x^n = nx^{n-1}$.

* The integral of $x^{-1}=\frac{1}{x}$ is

\[

\int \frac{1}{x} \ dx = \ln x + C,

\]

since $\frac{d}{dx}\ln x = \frac{1}{x}$.

I could go on but I think you get the point: all the derivative formulas you learned can be used in the opposite direction as an integral formula.

With limits now

What is the area under the curve $f(x)=\sin(x)$, between $x=0$ and $x=\pi$? First we take the anti derivative \[ F(x) = \int \sin(x) \ dx = - \cos(x) + C. \] Now we calculate the difference between $F(x)$ at the end-point minus $F(x)$ at the start-point: \[ \begin{align} A(0,\pi) & = \int_{x=0}^{x=\pi} \sin(x) \ dx \nl & = \underbrace{\left[ - \cos(x) + C \right]}_{F(x)} \bigg\vert_0^\pi \nl & = [- \cos\pi + C] - [- \cos(0) + C] \nl & = \cos(0) - \cos\pi \ \ = \ \ 1 - (-1) = 2. \end{align} \]

The constant $C$ does not appear in the answer, because it is in both the upper and the lower limits.

What next

If integration is nothing more than backwards differentiation and you already know differentiation inside out from differential calculus, you might be wondering what you are going to do during an entire semester of integral calculus. For all intents and purposes, if you understood the conceptual material in this section, then you understand integral calculus. Give yourself a tap on the back—you are done.

The establishment, however, doesn't just want you to know the concepts of integral calculus, but also wants you to know how to apply them in the real world. Thus, you need not only understand, but also practice the techniques of integration. There are a bunch of techniques, which allow you to integrate complicated functions. For example, if I asked you to integrate $f(x)=\sin^2(x) = (\sin(x))^2$ from $0$ to $\pi$ and you look in the formula sheet you won't find a function $F(x)$ who's derivative equals $f(x)$. So how do we solve: \[ \int_0^\pi \sin^2(x) \ dx = ?. \] One way to approach this problem is to use the trigonometric identity which says that $\sin^2(x)=\frac{1-\cos(2x)}{2}$ so we will have \[ \int_0^\pi \! \sin^2(x) dx = \int_0^\pi \left[ \frac{1}{2} - \frac{1}{2}\cos(2x) \right] dx = \underbrace{ \frac{1}{2} \int_0^\pi 1 \ dx}_{T_1} - \underbrace{ \frac{1}{2} \int_0^\pi \cos(2x) \ dx }_{T_2}. \] The fact that we can split the integral into two parts, and factor out the constant $\frac{1}{2}$ comes from the fact that integration is linear.

Let's continue the calculation of our integral, where we left off: \[ \int_0^\pi \sin^2(x) \ dx = T_1 - T_2. \] The value of the integral in the first term is: \[ T_1 = \frac{1}{2} \int_0^\pi 1 \ dx = \frac{1}{2} x \bigg\vert_0^\pi = \frac{\pi-0}{2} =\frac{\pi}{2}. \] The value of the second term is \[ T_2 =\frac{1}{2} \int_0^\pi \cos(2x) \ dx = \frac{1}{4} \sin(2x) \bigg\vert_0^\pi = \frac{\sin(2\pi) - \sin(0) }{4} = \frac{0 - 0 }{4} = 0. \] Thus we find the final answer for the integral to be: \[ \int_0^\pi \sin^2(x) \ dx = T_1 - T_2 = \frac{\pi}{2} - 0 = \frac{\pi}{2}. \]

Do you see how integration can quickly get tricky? You need to learn all kinds of tricks to solve integrals. I will teach you all the necessary tricks, but to become proficient you can't just read: you have to practice the techniques. Promise me you will practice! As my student, I expect nothing less than a total ass kicking of the questions you will face on the final exam.

Riemann sum

We defined the integral operation $\int f(x)\;dx$ as the inverse operation of $\frac{d}{dx}$, but it is important to know how to think of the integral operation on its own. No course on calculus would be complete without a telling of the classical “rectangles story” of integral calculus.

Definitions

- $x$: $\in \mathbb{R}$, the argument of the function.

- $f(x)$: a function $f \colon \mathbb{R} \to \mathbb{R}$.

- $x_i$: where the sum starts, i.e., some given point on the $x$ axis.

- $x_f$: where the sum stops.

- $A(x_i,x_f)$: Exact value of the area under the curve $f(x)$ from $x=x_i$ to $x=x_f$.

- $S_n(x_i,x_f)$: An approximation to the area $A$ in terms of

$n$ rectangles.

- $s_k$: Area of $k$-th rectangle when counting from the left.

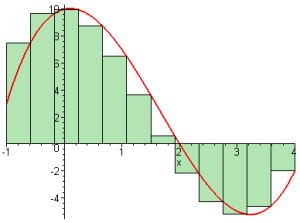

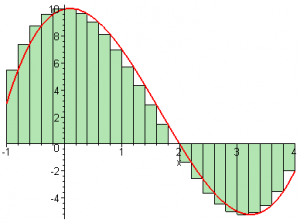

In the picture on the right, we are approximating the function $f(x)=x^3-5x^2+x+10$ between $x_i=-1$ and $x_f=4$ using $n=12$ rectangles.

The sum of the areas of the 12 rectangles is what we call $S_{12}(-1,4)$.

We say that $S_{12}(-1,4) \approx A(-1,4)$.

In the picture on the right, we are approximating the function $f(x)=x^3-5x^2+x+10$ between $x_i=-1$ and $x_f=4$ using $n=12$ rectangles.

The sum of the areas of the 12 rectangles is what we call $S_{12}(-1,4)$.

We say that $S_{12}(-1,4) \approx A(-1,4)$.

Formulas

The main formula you need to know is that the combined area approximation is given by the sum of the areas of the little rectangles: \[ S_n = \sum_{k=1}^{n} s_k. \]

Each of the little rectangles has an area $s_k$ given by its height multiplied by its width. The height of each rectangle will vary, but the width is constant. Why constant? Riemann figured that having each rectangle with a constant width $\Delta x$ would make it very easy to calculate the approximation. The total length of the interval from $x_i$ to $x_f$ is $(x_f-x_i)$. If we divide this length into $n$ equally spaced segments, each of width $\Delta x$ given by: \[ \Delta x = \frac{x_f - x_i}{n}. \]

OK, we have the formula for the width figured out, let's see what the height will be for the $k$-th rectangle, where $k$ is our counter from left to right in the sequence of rectangles. The height of the function varies as we move along the $x$ axis. For the rectangles, we pick isolated “samples” of $f(x)$ for the following values \[ x_k = x_i + k\Delta x, \textrm{ for } k \in \{ 1, 2, 3, \ldots, n \}, \] all of them equally spaced $\Delta x$ apart.

The area of each rectangle is height times width: \[ s_k = f(x_i + k\Delta x)\Delta x. \]

Now, my dear students, I want you to stare at the above equation and do some simple calculations to check that you understand. There is no point in continuing if you are just taking my word for it. Verify that when $k=1$, the formula gives the area of the first little rectangle. Verify also that when $k=n$, the formula for the $x_n$ gives the right value ($x_f$).

Ok let's put our formula for $s_k$ in the sum where it belongs. The Riemann sum approximation using $n$ rectangles is given by \[ S_n = \sum_{k=1}^{n} f(x_i + k\Delta x)\Delta x, \] where $\Delta x =\frac{|x_f - x_i|}{n}$.

Let us get back to the picture where we try to approximate the area under the curve $f(x)=x^3-5x^2+x+10$ by using 12 pieces.

For this scenario the value we would get for the 12-rectangle

approximation to the area under the curve with

\[

S_{12} = \sum_{k=1}^{12} f(x_i + k\Delta x)\Delta x = 11.802662.

\]

You shouldn't trust me though, but always check for yourself using

live.sympy.org by typing in the following expressions:

>>> n=12.0; xk = -1 + k*5/n; sk = (xk**3-5*xk**2+xk+10)*(5/n);

>>> summation( sk, (k,1,n) )

11.802662...

More is better

Who cares though? This is such a crappy approximation! You can clearly see that some rectangles lie outside of the curve (overestimates), and some are too far inside (underestimates). You might be wondering why I wasted so much of your time to achieve such a lousy approximation. We have not been wasting our time. You see, the Riemann sum formula $S_n$ gets better and better as you cut the region into smaller and smaller rectangles.

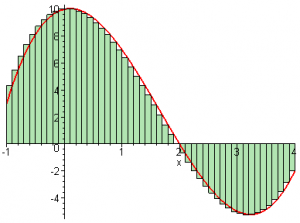

With $n=25$, we get a more fine grained approximation

in which the sum of the rectangles is given by:

\[

S_{25} = \sum_{k=1}^{25} f(x_i + k\Delta x)\Delta x = 12.4.

\]

Then for $n=50$ we get:

\[

S_{50} = 12.6625.

\]

Then for $n=50$ we get:

\[

S_{50} = 12.6625.

\]

For $n=100$ the sum of the rectangles areas is starting to look pretttttty much like the function.

The calculation gives us $S_{100} = 12.790625$.

For $n=100$ the sum of the rectangles areas is starting to look pretttttty much like the function.

The calculation gives us $S_{100} = 12.790625$.

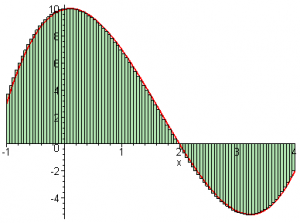

For $n=1000$ we get $S_{1000} = 12.9041562$ which is very close to the actual value of the area under the curve: \[ A(-1,4) = 12.91666\ldots \]

You see in the long run, when $n$ gets really large the rectangle approximation (Riemann sum) can be made arbitrarily good. Imagine you cut the region into $n=10000$ rectangles, wouldn't $S_{10000}(-1,4)$ be a pretty accurate approximation of the actual area $A(-1,4)$?

Integral

The fact that you can approximate the area under the curve with a bunch of rectangles is what integral calculus is all about. Instead of mucking about with bigger and bigger values of $n$, mathematicians go right away for the kill and make $n$ go to infinity.

In the limit of $n \to \infty$, you can get arbitrarily close approximations to the area under the curve. All this time, that which we were calling $A(-1,4)$ was actually the “integral” of $f(x)$ between $x=-1$ and $x=4$, or written mathematically: \[ A(-1,4) \equiv \int_{-1}^4 f(x)\;dx \equiv \lim_{n \to \infty} S_{n} = \lim_{n \to \infty} \sum_{k=1}^{n} f(x_i + k\Delta x)\Delta x. \]

While it is not computationally practical to make $n \to \infty$,

we can convince ourselves that the approximation becomes better and

better as $n$ becomes larger. For example the approximation using $n=1$M rectangles is accurate up to the fourth decimal place as

can be verified using the following commands on live.sympy.org:

>>> n=1000000.0; xk = -1 + k*5/n; sk = (xk**3-5*xk**2+xk+10)*(5/n);

>>> summation( sk, (k,1,n) )

12.9166541666563

>>> integrate( x**3-5*x**2+x+10, (x,-1,4) ).evalf()

12.9166666666667

In practice, when we want to compute the area under the curve, we don't use Riemann sums. There are formulas for directly calculating the integrals of functions. In fact, you already know the integration formulas: they are simply the derivative formulas used in the opposite direction. In the next section we will discuss the derivative-integral inverse relationship in more details.

Links

[ Riemann sum wizard ]

http://mathworld.wolfram.com/RiemannSum.html

Fundamental theorem of calculus

Though it may not be apparent at first, the study of derivatives (Calculus I) and integrals (Calculus II) are intimately related. Differentiation and integration are inverse operations.

You have previously studied the inverse relationship for functions. Recall that for any bijective function $f$ (a one-to-one relationship) there exists an inverse functions $f^{-1}$ which undoes the effects of $f$: \[ (f^{-1}\!\circ f) (x) \equiv f^{-1}(f(x)) = x. \] and \[ (f \circ f^{-1}) (y) \equiv f(f^{-1}(y)) = y. \] The circle $\circ$ stands for composition of functions, i.e., first you apply one function and then you apply the second function. When you apply a function followed by its inverse to some input you get back the original input.

The integral is the “inverse operation” to the derivative. If perform the integral operation followed by the derivative operation on some function, you will get back the same function. This is stated more formally as the Fundamental Theorem of Calculus.

Statement

Let $f(x)$ be a continuous function and let $F(x)$ be its antiderivative on the interval $[a,b]$: \[ F(x) = \int_a^x f(t) \; dt, \] then, the derivative of $F(x)$ is equal to $f(x)$: \[ F'(x) = f(x), \] for any $x \in (a,b)$.

Thus, we see that differentiation is the inverse operation of integration. We obtained $F(x)$ by integrating $f(x)$. If we then take the derivative of $F(x)$ we get back to $f(x)$. It works the other way too. If you integrate a function and then take its derivative, you get back to the original function. Differential calculus and integral calculus are two sides of the same coin. If you understand this fact, then you understand something very deep about calculus.

Note that $F(x)$ is not a unique anti-derivative. We can add an arbitrary constant $C$ to $F(x)$ and it will still satisfy the above conditions since the derivative of a constant is zero.

Formulas

If you are given some function $f(x)$, you take its integral and then take the derivative of the result, you will get back the same function: \[ \left(\frac{d}{dx} \circ \int dx \right) f(x) \equiv \frac{d}{dx} \int_a^x f(t) dt = f(x). \] Alternately, you can first take the derivative, and then take the integral, and you will get back the function (up to a constant): \[ \left( \int dx \circ \frac{d}{dx}\right) f(x) \equiv \int_a^x f'(t) dt = f(x) - f(a). \]

Note that we had to use a dummy variable $t$ inside the integral since $x$ is used in the limit. Indeed, all integrals are functions of their limits and the inner variable is not important: we could write $\int_a^x f(y)\;dy$ or $\int_a^x f(z)\;dz$ or even $\int_a^x f(\xi)\;d\xi$ and the answer for all of these will be $F(x)-F(a)$.

Discussion

As a consequence of the Fundamental theorem, you can reuse all your knowledge of differential calculus to solve integrals.

Example: Reverse engineering

Suppose you are asked find this integral: \[ \int x^2 dx. \] Using the Fundamental theorem, we can rephrase this question as the search for some function $F(x)$ such that \[ F'(x) = x^2. \] Now since you remember your derivative formulas well, you will guess right away that $F(x)$ must contain a $x^3$ term. This is because you get back quadratic term when you take the derivative of cubic term. So we must have $F(x)=cx^3$, for some constant $c$. We must pick the constant that makes this work out: \[ F'(x) = 3cx^2 = x^2, \] therefore $c=\frac{1}{3}$ and the integral is: \[ \int x^2 dx = \frac{1}{3}x^3 + C. \] Did you see what just happened? We were able to take an integral using only derivative formulas and “reverse engineering”. You can check that, indeed, $\frac{d}{dx}\left[\frac{1}{3}x^3\right] = x^2$.

You can also use the Fundamental theorem to check your answers.

Example: Integral verification

Suppose a friend tells you that

\[

\int \ln(x) dx = x\ln(x) - x + C,

\]

but he is a shady character and you don't trust him.

How can you check his answer?

If you had a smartphone handy, you can check on live.sympy.org,

but what if you just have pen and paper?

If $x\ln(x) - x$ is really the antiderivative of $\ln(x)$, then

by the Fundamental theorem of calculus, if we take the derivative

we should get back $\ln(x)$. Let's check:

\[

\frac{d}{dx}\!\left[ x\ln(x) - x \right] = \underbrace{\frac{d}{dx}\!\left[x\right]\ln(x)+ x \left[\frac{d}{dx} \ln(x) \right]}_{\text{product rule} } - \frac{d}{dx}\left[ x \right] = 1\ln(x) + x\frac{1}{x} - 1 = \ln(x).

\]

OK, so your friend is correct.

Proof of the Fundamental theorem

There exists an unspoken rule in mathematics which states that if the word theorem appears in your writing, it has to be followed by the word proof. We therefore have to look into the proof of the Fundamental Theorem of Calculus (FTC). It is not that important that you understand the details of the proof, but I still recommend that you read this section for your general math culture. If you are in a rush though, feel free to skip it.

Before we get to the proof of the FTC, let me first introduce the squeezing principle, which will be used in the proof. Suppose you have three functions $f, \ell$, and $u$, such that: \[ \ell(x) \leq f(x) \leq u(x) \qquad \text{ for all } x. \] We say that $\ell(x)$ is a lower bound on $f(x)$ since its graph is always below that of $f(x)$. Similarly $u(x)$ is an upper bound on $f(x)$. Whatever the value of $f(x)$ is, we know that it is in between that of $\ell(x)$ and $u(x)$.