<texit info> author=Ivan Savov title=Minireference backgroundtext=off </texit>

MATH and PHYSICS

The MATH and PHYSICS minireference is a comprehensive course on Calculus, Mechanics, Linear algebra, Electricity and Magnetism. All the important topics are explained in an intuitive and concise manner so that you the student can learn more efficiently. When there is only a couple of days left before the exam, what would you like to read: 400 page in a regular textbook or 50 pages in the minireference?

About $\ \ $ | $\ \ $ Table of contents $\ \ $ | $\ \ $ Buy in print

Math fundamentals

As soon as the keyword “math” comes-up during a conversation, people start to feel uneasy. There are a number of common strategies that people use to escape this subject of conversation. The most common approach is to say something like “I always hated math”, or “I am terrible at math”, which is a clear social cue that a change of subject is requested. Another approach is to generally be sympathetic to the idea of mathematics, so long as it appears in the third person: “she solved the equation” is fine but “I solved the equation” is not thinkable. The usual motivation for this mathematics pour les autres approach is that it is highly specialized knowledge that does not contain any true value for the general audience. A variant of the above is to believe that a special kind of brain is required in order to do math.

Mathematical knowledge is actually really cool. Knowing math is like having analytic superpowers. You can use the power of abstraction to see the math behind any real world situation. And once in the math world you can jot down some numbers and functions on a piece of paper and you can calculate the answer. Unfortunately, this is not the image that most people have of mathematics. Math is taught usually taught with a lot of focus placed on the mechanical steps. Mindlessly number crunching and following steps without understanding what they are doing is not cool. If this is how you learned about the basic ideas of math, I can't blame you if you hate it, as it is kind of boring.

Often times, my students ask me to review some basic notion from high school math which is needed for a more advanced topic. This chapter is a collection of short review articles that cover a lot of useful topics from high school math.

Topics math

This chapter should help you learn most of the useful concepts from the high school math curriculum, and in particular all the prerequisite topics for University-level math and physics courses.

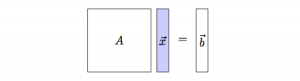

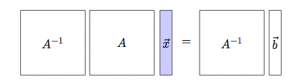

Solving equations

Most math skills boil down to being able to manipulate and solve equations. To solve an equation means to find the value of the unknown in the equation.

Check this shit out: \[ x^2-4=45. \]

To solve the above equation is to answer the question “What is $x$?” More precisely, we want to find the number which can take the place of $x$ in the equation so that the equality holds. In other words, we are asking \[ \text{"Which number times itself minus four gives 45?"} \]

That is quite a mouthful don't you think? To remedy this verbosity, mathematicians often use specialized mathematical symbols. The problem is that the specialized symbols used by mathematicians are confuse people. Sometimes even the simplest concepts are inaccessible if you don't know what the symbols mean.

What are your feelings about math, dear reader? Are you afraid of it? Do you have anxiety attacks because you think it will be too difficult for you? Chill! Relax my brothers and sisters. There is nothing to it. Nobody can magically guess what the solution is immediately. You have to break the problem down into simpler steps.

To find $x$, we can manipulate the original equation until we transform it to a different equation (as true as the first) that looks like this: \[ x= just \ some \ numbers. \]

That's what it means to solve. The equation is solved because you could type the numbers on the right hand side of the equation into a calculator and get the exact value of $x$.

To get $x$, all you have to do is make the right manipulations on the original equation to get it to the final form. The only requirement is that the manipulations you make transform one true equation into another true equation.

Before we continue our discussion, let us take the time to clarify what the equality symbol $=$ means. It means that all that is to the left of $=$ is equal to all that is to the right of $=$. To keep this equality statement true, you have to do everything that you want to do to the left side also to the right side.

In our example from earlier, the first simplifying step will be to add the number four to both sides of the equation: \[ x^2-4 +4 =45 +4, \] which simplifies to \[ x^2 =49. \] You must agree that the expression looks simpler now. How did I know to do this operation? I was trying to “undo” the effects of the operation $-4$. We undo an operation by applying its inverse. In the case where the operation is subtraction of some amount, the inverse operation is the addition of the same amount.

Now we are getting closer to our goal, namely to isolate $x$ on one side of the equation and have just numbers on the other side. What is the next step? Well if you know about functions and their inverses, then you would know that the inverse of $x^2$ ($x$ squared) is to take the square root $\sqrt{ }$ like this: \[ \sqrt{x^2} = \sqrt{49}. \] Notice that I applied the inverse operation on both sides of the equation. If we don't do the same thing on both sides we would be breaking the equality!

We are done now, since we have isolated $x$ with just numbers on the other side: \[ x = \pm 7. \]

What is up with the $\pm$ symbol? It means that both $x=7$ and $x=-7$ satisfy the above equation. Seven squared is 49, and so is $(-7)^2 = 49$ because two negatives cancel out.

If you feel comfortable with the notions of high school math and you could have solved the equation $x^2-4=25$ on your own, then you should consider skipping ahead to Chapter 2. If on the other hand you are wondering how the squiggle killed the power two, then this chapter is for you! In the next sections we will review all the essential concepts from high school math which you will need for the rest of the book. First let me tell you about the different kinds of numbers.

Numbers

We will start the exposition like a philosophy paper and define precisely what we are going to be talking about. At the beginning of all matters we have to define the players in the world of math: numbers.

Definitions

Numbers are the basic objects which you can type into a calculator and which you use to calculate things. Mathematicians like to classify the different kinds of number-like objects into sets:

- The Naturals: $\mathbb{N} = \{0,1,2,3,4,5,6,7, \ldots \}$,

- The Integers: $\mathbb{Z} = \{\ldots, -3,-2,-1,0,1,2,3 , \ldots \}$,

- The Rationals: $\mathbb{Q} = \{-1,0,0.125,1,1.5, \frac{5}{3}, \frac{22}{7}, \ldots \} $,

- The Reals: $\mathbb{R} = \{-1,0,1,e,\pi, -1.539..,\ 4.94.., \ \ldots \}$,

- The Complex numbers: $\mathbb{C} = \{ -1, 0, 1, i, 1+i, 2+3i, \ldots \}$.

These categories of numbers should be somewhat familiar to you. Think of them as neat classification labels for everything that you would normally call a number. Each item in the above list is a set. A set is a collection of items of the same kind. Each collection has a name and a precise definition. We don't need to go into the details of sets and set notation for our purposes, but you have to be aware of the different categories. Note also that each of the sets in the above list contains all the sets above it.

Why do you need so many different sets of numbers? The answer is partly historical and partly mathematical. Each of the set of numbers is associated with more and more advanced mathematical problems.

The simplest kind of numbers are the natural numbers $\mathbb{N}$, which are sufficient for all your math needs if all you are going to do is count things. How many goats? Five goats here and six goats there so the total is 11. The sum of any two natural numbers is also a natural number.

However, as soon as you start to use subtraction (the inverse operation of addition), you start to run into negative numbers, which are numbers outside of the set of natural numbers. If the only mathematical operations you will ever use are addition and subtraction then the set of integers $\mathbb{Z} = \{ \ldots, -2, -1, 0, 1, 2, \ldots \}$ would be sufficient. Think about it. Any integer plus or minus any other integer is still an integer.

You can do a lot of interesting math with integers. There is an entire field in math called number theory which deals with integers. However, if you restrict yourself to integers you would be limiting yourself somewhat. You can't use the notion of 2.5 goats for example. You would get totally confused by the menu at Rotisserie Romados which offers $\frac{1}{4}$ of a chicken.

If you want to use division in your mathematical calculations then you will need the rationals $\mathbb{Q}$. The rationals are the set of quotients of two integers: \[ \mathbb{Q} = \{ \text{ all } z \text{ such that } z=\frac{x}{y}, x \text{ is in } \mathbb{Z}, y \text{ is in } \mathbb{N}, y \neq 0 \}. \] You can add, subtract, multiply and divide rational numbers and the result will always be a rational number. However even rationals are not enough for all of math!

In geometry, we can obtain quantities like $\sqrt{2}$ (the diagonal of a square with side 1) and $\pi$ (the ratio between a circle's circumference and its diameter) which are irrational. There are no integers $x$ and $y$ such that $\sqrt{2}=\frac{x}{y}$, therefore, $\sqrt{2}$ is not part of $\mathbb{Q}$. We say that $\sqrt{2}$ is irrational. An irrational number has an infinitely long decimal expansion. For example, $\pi = 3.1415926535897931..$ where the dots indicate that the decimal expansion of $\pi$ continues all the way to infinity.

If you add the irrational numbers to the rationals you get all the useful numbers, which we call the set of real numbers $\mathbb{R}$. The set $\mathbb{R}$ contains the integers, the fractions $\mathbb{Q}$, as well as irrational numbers like $\sqrt{2}=1.4142135..$. You will see that using the reals you can compute pretty much anything you want. From here on in the text, if I say number I will mean an element of the set of real numbers $\mathbb{R}$.

The only thing you can't do with the reals is take the square root of a negative number—you need the complex numbers for that. We defer the discussion on $\mathbb{C}$ until Chapter 3.

Operations on numbers

Addition

You can add and subtract numbers. I will assume you are familiar with this kind of stuff. \[ 2+5=7,\ 45+56=101,\ 65-66=-1,\ 9999 + 1 = 10000,\ \ldots \]

The visual way to think of addition is the number line. Adding numbers is like adding sticks together: the resulting stick has length equal to the sum of the two constituent sticks.

Addition is commutative, which means that $a+b=b+a$. It is also associative, which means that if you have a long summation like $a+b+c$ you can compute it in any order $(a+b)+c$ or $a+(b+c)$ and you will get the same answer.

Subtraction is the inverse operation of addition.

Multiplication

You can also multiply numbers together. \[ ab = \underbrace{a+a+\cdots+a}_{b \ times}=\underbrace{b+b+\cdots+b}_{a \ times}. \] Note that multiplication can be defined in terms of repeated addition.

The visual way to think about multiplication is through the concept of area. The area of a rectangle of base $a$ and height $b$ is equal to $ab$. A rectangle which has height equal to its base is a square, so this why we call $aa=a^2$ “$a$ squared.”

Multiplication of numbers is also commutative $ab=ba$, and associative $abc=(ab)c=a(bc)$. In modern notation, no special symbol is used to denote multiplication; we simply put the two factors next to each other and say that the multiplication is implicit. Some other ways to denote multiplication are $a\cdot b$, $a\times b$ and, on computer systems, $a*b$.

Division

Division is the inverse of multiplication. \[ a/b = \frac{a}{b} = \text{ one } b^{th} \text{ of } a. \] Whatever $a$ is, you need to divide it into $b$ equal pieces and take one such piece. Some texts denote division by $a\div b$.

Note that you cannot divide by $0$. Try it on your

calculator or computer. It will say error divide by zero,

because it simply doesn't make sense.

What would it mean to divide something into zero equal pieces?

Exponentiation

Very often you have to multiply things together many times. We call that exponentiation and denote that with a superscript: \[ a^b = \underbrace{aaa\cdots a}_{b\ times}. \]

We can also have negative exponents. The negative in the exponent does not mean “subtract”, but rather “divide by”: \[ a^{-b}=\frac{1}{a^b}=\frac{1}{\underbrace{aaa\cdots a}_{b\ times}}. \]

An exponent which is a fraction means that it is some sort of square-root-like operation: \[ a^{\frac{1}{2}} \equiv \sqrt{a} \equiv \sqrt[2]{a}, \qquad a^{\frac{1}{3}} \equiv \sqrt[3]{a}, \qquad a^{\frac{1}{4}} \equiv \sqrt[4]{a} = a^{\frac{1}{2}\frac{1}{2}}=\left(a^{\frac{1}{2}}\right)^{\frac{1}{2}} = \sqrt{\sqrt{a}}. \] Square root $\sqrt{x}$ is the inverse operation of $x^2$. Similarly, for any $n$ we define the function $\sqrt[n]{x}$ (the $n$th root of $x$) to be the inverse function of $x^n$.

It is worth clarifying what “taking the $n$th root” means and what this operation can be used for. The $n$th root of $a$ is a number which, when multiplied together $n$ times, will give $a$. So for example a cube root satisfies \[ \sqrt[3]{a} \sqrt[3]{a} \sqrt[3]{a} = \left( \sqrt[3]{a} \right)^3 = a = \sqrt[3]{a^3}. \] Do you see now why $\sqrt[3]{x}$ and $x^3$ are inverse operations?

The fractional exponent notation makes the meaning of roots much more explicit: \[ \sqrt[n]{a} \equiv a^{\frac{1}{n}}, \] which means that $n$th root is equal to one $n$th of a number with respect to multiplication. Thus, if we want the whole number, we have to multiply the number $a^{\frac{1}{n}}$ times itself $n$ times: \[ \underbrace{a^{\frac{1}{n}}a^{\frac{1}{n}}a^{\frac{1}{n}}a^{\frac{1}{n}} \cdots a^{\frac{1}{n}}a^{\frac{1}{n}}}_{n\ times} = \left(a^{\frac{1}{n}}\right)^n = a^{\frac{n}{n}} = a^1 = a. \] The $n$-fold product of $\frac{1}{n}$ fractional exponents of any number products the number with exponent one, therefore the inverse operation of $\sqrt[n]{x}$ is $x^n$.

The commutative law of multiplication $ab=ba$ implies that we can see any fraction $\frac{a}{b}$ in two different ways $\frac{a}{b}=a\frac{1}{b}=\frac{1}{b}a$. First we multiply by $a$ and then divide the result by $b$, or first we divide by $b$ and then we multiply the result by $a$. This means that when we have a fraction in the exponent, we can write the answer in two equivalent ways: \[ a^{\frac{2}{3} }=\sqrt[3]{a^2} = (\sqrt[3]{a})^2, \qquad a^{-\frac{1}{2}}=\frac{1}{a^{\frac{1}{2}}} = \frac{1}{\sqrt{a}}, \qquad a^{\frac{m}{n}} = \left(\sqrt[n]{a}\right)^m = \sqrt[n]{a^m}. \]

Make sure the above notation makes sense to you. As an exercises try to compute $5^{\frac{4}{3}}$ on your calculator, and check that you get around 8.54987973.. as an answer.

Operator precedence

There is a standard convention for the order in which mathematical operations have to be performed. The three basic operations have the following precedence:

- Exponents and roots.

- Products and divisions.

- Additions and subtractions.

This means that the expression $5\times3^2+13$ is interpreted as “first take the square of $3$, then multiply by $5$ and then add $13$.” If you want the operations to be carried out in a different order, say you wanted to multiply $5$ times $3$ first and then take the square you should use parentheses: $(5\times 3)^2 + 13$, which now shows that the square acts on $(5 \times 3)$ as a whole and not on $3$ alone.

Other operations

We can define all kinds of operations on numbers. The above three are special since they have a very simple intuitive feel to them, but we can define arbitrary transformations on numbers. We call those functions. Before we learn about functions, let us talk about variables first.

Functions

Your function vocabulary determines how well you will be able to express yourself mathematically in the same way that your English vocabulary determines how well you can express yourself in English.

The purpose of the following pages is to embiggen your vocabulary a bit so you won't be caught with your pants down when the teacher tries to pull some trick on you at the final. I give you the minimum necessary, but I recommend you explore these functions on your own via wikipedia and by plotting their graphs on Wolfram alpha.

To “know” a function you have to understand and connect several different aspects of the function. First you have to know its mathematical properties (what does it do, what is its inverse) and at the same time have a good idea of its graph, i.e., what it looks like if you plot $x$ versus $f(x)$ in the Cartesian plane. It is also really good idea if you can remember the function values for some important inputs.

Definition

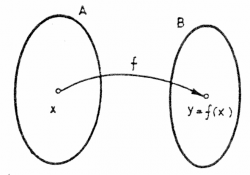

A function is a mathematical object that takes inputs and gives outputs. We use the notation \[ f \colon X \to Y, \] to denote a functions from the set $X$ to the set $Y$. In this book, we will study mostly functions which take real numbers as inputs and give real numbers as outputs: $f\colon\mathbb{R} \to \mathbb{R}$.

We now define some technical terms used to describe the input and output sets.

- The domain of a function is the set of allowed input values.

- The image or range of the function $f$ is the set of all possible

output values of the function.

- The codomain of a function is the type of outputs that the functions has.

To illustrate the subtle difference between the image of a function and its codomain, let us consider the function $f(x)=x^2$. The quadratic function is of the form $f\colon\mathbb{R} \to \mathbb{R}$. The domain is $\mathbb{R}$ (it takes real numbers as inputs) and the codomain is $\mathbb{R}$ (the outputs are real numbers too), however, not all outputs are possible. Indeed, the image the function $f$ consists only of the positive numbers $\mathbb{R}_+$. Note that the word “range” is also sometimes used refer to the function codomain.

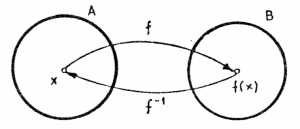

A function is not a number, it is a mapping from numbers to numbers. If you specify a given $x$ as input, we denote as $f(x)$ is the output value of $f$ for that input. Here is a graphical representation of a function with domain $A$ and codomain $B$.

The function corresponds to the arrow in the above picture.

We say that “$f$ maps $x$ to $y=f(x)$” and use the following terminology to classify the type of mapping that a function performs:

- A function is one-to-one or injective if it maps different inputs to different outputs.

- A function is onto or surjective if it covers the entire output set,

i.e., if the image of the function is equal to the function codomain.

- A function is bijective if it is both injective and surjective.

In this case $f$ is a one-to-one correspondence between the input

set and the output set: for each input of the possible outputs $y \in Y$ there exists (surjective part) exactly one input $x \in X$, such that $f(x)=y$ (injective part).

The term injective is a 1940s allusion inviting us to think of injective functions as some form of fluid flow. Since fluids cannot be compressed, the output space must be at least as large as the input space. A modern synonym for injective functions is to say that they are two-to-two. If you imagine two specks of paint inserted somewhere in the “input fluid”, then an injective function will lead to two distinct specks of paint in the “output fluid.” In contrast, functions which are not injective could map several different inputs to the same output. For example $f(x)=x^2$ is not injective since the inputs $2$ and $-2$ both get mapped to output value $4$.

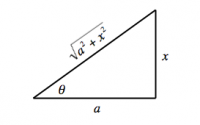

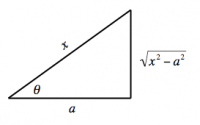

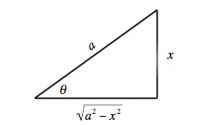

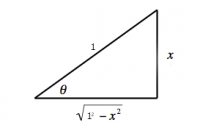

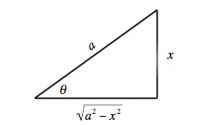

Function names

Mathematicians have defined symbols $+$, $-$, $\times$ (usually omitted) and $\div$ (usually denoted as a fraction) for most important functions used in everyday life. We also use the weird surd notation to denote $n$th root $\sqrt[n]{\ }$ and the superscript notation to denote exponents. All other functions are identified and used by their name. If I want to compute the cosine of the angle $60^\circ$ (a function which describes the ratio between the length of one side of a right-angle triangle and the hypotenuse), then I would write $\cos(60^\circ)$, which means that we want the value of the $\cos$ function for the input $60^\circ$.

Incidentally, for that specific angle the function $\cos$ has a nice value: $\cos(60^\circ)=\frac{1}{2}$. This means that seeing $\cos(60^\circ)$ somewhere in an equation is the same as seeing $0.5$ there. For other values of the function like say $\cos(33.13^\circ)$, you will need to use a calculator. A scientific calculator will have a $\cos$ button on it for that purpose.

Handles on functions

When you learn about functions you learn about different “handles” onto these mathematical objects. Most often you will have the function equation, which is a precise way to calculate the output when you know the input. This is an important handle, especially when you will be doing arithmetic, but it is much more important to “feel” the function.

How do you get a feel for some function?

One way is to look at list of input-output pairs $\{ \{ \text{input}=x_1, \text{output}=f(x_1) \},$ $\{ \text{input}=x_2,$ $\text{output}=f(x_2) \},$ $\{ \text{input}=x_3, \text{output}=f(x_3) \}, \ldots \}$. A more compact notation for the input-output pairs $\{ (x_1,f(x_1)),$ $(x_2,f(x_2)),$ $(x_3,f(x_3)), \ldots \}$. You can make a little table of values for yourself, pick some random inputs and record the output of the function in the second column: \[ \begin{align*} \textrm{input}=x \qquad &\rightarrow \qquad f(x)=\textrm{output} \nl 0 \qquad &\rightarrow \qquad f(0) \nl 1 \qquad &\rightarrow \qquad f(1) \nl 55 \qquad &\rightarrow \qquad f(55) \nl x_4 \qquad &\rightarrow \qquad f(x_4) \end{align*} \]

Apart from random numbers it is also generally a good idea to check the value of the function at $x=0$, $x=1$, $x=100$, $x=-1$ and any other important looking $x$ value.

One of the best ways to feel a function is to look at its graph.

A graph is a line on a piece of paper that passes through all input-output

pairs of the function.

What? What line? What points?

Ok let's backtrack a little.

Imagine that you have a piece of paper you have drawn

a coordinate system on the paper.

The horizontal axis will be used to measure $x$, this is also called the abscissa. The vertical axis will be used to measure $f(x)$, but because writing out $f(x)$ all the time is long and tedious, we will invent a short single-letter alias to denote the output value of $f$ as follows: \[ y \equiv f(x) = \text{output}. \]

Now you can take each of the input-output pairs for the function $f$ and think of them as points $(x,y)$ in the coordinate system. Thus the graph of a function is a graphical representation of everything the function does. If you understand the simple “drawing” on this page, you will basically understand everything there is to know about the function.

Another way to feel functions is through the properties of the function: either the way it is defined, or its relation to other functions. This boils down to memorizing facts about the function and its relations to other functions. An example of a mathematical fact is $\sin(30^\circ)=\frac{1}{2}$. An example of a mathematical relation is the equation $\sin^2 x + \cos^2 x =1$, which is a link between the $\sin$ and the $\cos$ functions.

The last part may sound contrary to my initial promise about the book saying that I will not make you memorize stuff for nothing. Well, this is not for nothing. The more you know about any function, the more “paths” you have in your brain that connect to that function. Real math knowledge is not memorization but an establishment of a graph of associations between different areas of knowledge in your brain. Each concept is a node in this graph, and each fact you know about this concept is an edge in the graph. Analytical thought is the usage of this graph to produce calculations and mathematical arguments (proofs). For example, knowing the fact $\sin(30^\circ)=\frac{1}{2}$ about $\sin$ and the relationship $\sin^2 x + \cos^2 x = 1$ between $\sin$ and $\cos$, you could show that $\cos(30^\circ)=\frac{\sqrt{3}}{2}$. Note that the notation $\sin^2(x)$ means $(\sin(x))^2$.

To develop mathematical skills, it is therefore important to practice this path-building between related concepts by solving exercises and reading and writing mathematical proofs. My textbook can only show you the paths between the concepts, it is up to you to practice the exercises in the back of each chapter to develop the actual skills.

Example: Quadratic function

Consider the function from the real numbers ($\mathbb{R}$) to the real numbers ($\mathbb{R}$) \[ f \colon \mathbb{R} \to \mathbb{R} \] given by \[ f(x)=x^2+2x+3. \] The value of $f$ when $x=1$ is $f(1)=1^2+2(1)+3=1+2+3=6$. When $x=2$, we have $f(2)=2^2+2(2)+3=4+4+3=11$. What is the value of $f$ when $x=0$?

Example: Exponential function

Consider the exponential function with base two: \[ f(x) = 2^x. \] This function is of crucial importance in computer systems. When $x=1$, $f(1)=2^1=2$. When $x$ is 2 we have $f(2)=2^2=4$. The function is therefore described by the following input-output pairs: $(0,1)$, $(1,2)$, $(2,4)$, $(3,8)$, $(4,16)$, $(5,32)$, $(6,64)$, $(7,128)$, $(8,256)$, $(9,512)$, $(10,1024)$, $(11, 2048)$, $(12,4096)$, etc. (RAM memory chips come in powers of two because the memory space is exponential in the number of “address lines” on the chip.) Some important input-output pairs for the exponential function are $(0,1)$, because by definition any number to the power 0 is equal to 1, and $(-1,\frac{1}{2^1}=\frac{1}{2}), (-2,\frac{1}{2^2}=\frac{1}{4}$), because negative exponents tells you that you should dividing by that number this many times instead of multiplying.

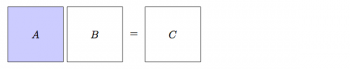

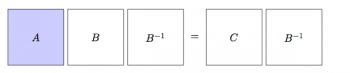

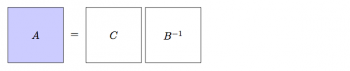

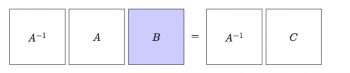

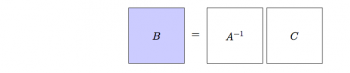

Function inverse

Recall that a bijective function is a one-to-one correspondence between

the set of inputs and the set of output values.

If $f$ is a bijective function, then there exists an inverse function $f^{-1}$,

which performs the inverse mapping of $f$.

Thus, if you start from some $x$, apply $f$ and then apply $f^{-1}$,

you will get back to the original input $x$:

\[

x = f^{-1}\!\left( \; f(x) \; \right).

\]

This is represented graphically in the diagram on the right.

Recall that a bijective function is a one-to-one correspondence between

the set of inputs and the set of output values.

If $f$ is a bijective function, then there exists an inverse function $f^{-1}$,

which performs the inverse mapping of $f$.

Thus, if you start from some $x$, apply $f$ and then apply $f^{-1}$,

you will get back to the original input $x$:

\[

x = f^{-1}\!\left( \; f(x) \; \right).

\]

This is represented graphically in the diagram on the right.

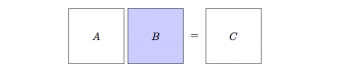

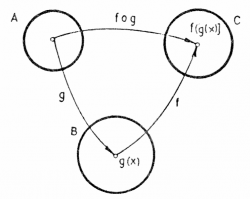

Function composition

We can combine two simple functions to build a more complicated function

by chaining them together.

The resulting function is denoted

\[

z = f\!\circ\!g \, (x) \equiv z = f\!\left( \: g(x) \: \right).

\]

We can combine two simple functions to build a more complicated function

by chaining them together.

The resulting function is denoted

\[

z = f\!\circ\!g \, (x) \equiv z = f\!\left( \: g(x) \: \right).

\]

The diagram on the left shows a function $g:A\to B$ acting on some input $x$ to produce an intermediary value $y \in B$, which is then input to the function $f:B \to C$ to produce the final output value $z = f(y) = f(g(x))$.

The composition of applying $g$ first followed by $f$ is a function of the form: $f\circ g: A \to C$ defined through the equation $f\circ g(x) = f(g(x))$. Note that “first” in the context of function composition means the first to first to touch the input.

Discussion

In the next sections, we will look into the different functions that you will be dealing with. What we present here is far from and exhaustive list, but if you get a hold of these ones, you will be able to solve any problem a teacher can throw at you.

Links

[ Tank game where you specify the function of the projectile trajectory ]

http://www.graphwar.com/play.html

NOINDENT

[ Gallery of function graphs ]

http://mpmath.googlecode.com/svn/gallery/gallery.html

Functions and their inverses

As we saw in the section on solving equations, the ability to “undo” functions is a key skill to have when solving equations.

Example

Suppose you have to solve for $x$ in the equation \[ f(x) = c. \] where $f$ is some function and $c$ is some constant. Our goal is to isolate $x$ on one side of the equation but there is the function $f$ standing in our way.

The way to get rid of $f$ is to apply the inverse function (denoted $f^{-1}$) which will “undo” the effects of $f$. We find that: \[ f^{-1}\!\left( f(x) \right) = x = f^{-1}\left( c \right). \] By definition the inverse function $f^{-1}$ does the opposite of what the function $f$ does so together they cancel each other out. We have $f^{-1}(f(x))=x$ for any number $x$.

Provided everything is kosher (the function $f^{-1}$ must be defined for the input $c$), the manipulation we made above was valid and we have obtained the answer $x=f^{-1}( c)$.

\[ \ \]

Note the new notation for denoting the function inverse $f^{-1}$ that we introduced in the above example. This notation is borrowed from the notion of “inverse number”. Multiplication by the number $d^{-1}$ is the inverse operation of multiplication by the number $d$: $d^{-1}dx=1x=x$. In the case of functions, however, the negative one exponent does not mean the inverse number $\frac{1}{f(x)}=(f(x))^{-1}$ but functions inverse, i.e., the number $f^{-1}(y)$ is equal to the number $x$ such that $f(x)=y$.

You have to be careful because sometimes the applying the inverse leads to multiple solutions. For example, the function $f(x)=x^2$ maps two input values ($x$ and $-x$) to the same output value $x^2=f(x)=f(-x)$. The inverse function of $f(x)=x^2$ is $f^{-1}(x)=\sqrt{x}$, but both $x=+\sqrt{c}$ and $x=-\sqrt{c}$ would be solutions to the equation $x^2=c$. A shorthand notation to indicate the solutions for this equation is $x=\pm c$.

Formulas

Here is a list of common functions and their inverses:

\[ \begin{align*} \textrm{function } f(x) & \ \Leftrightarrow \ \ \textrm{inverse } f^{-1}(x) \nl x+2 & \ \Leftrightarrow \ \ x-2 \nl 2x & \ \Leftrightarrow \ \ \frac{1}{2}x \nl -x & \ \Leftrightarrow \ \ -x \nl x^2 & \ \Leftrightarrow \ \ \pm\sqrt{x} \nl 2^x & \ \Leftrightarrow \ \ \log_{2}(x) \nl 3x+5 & \ \Leftrightarrow \ \ \frac{1}{3}(x-5) \nl a^x & \ \Leftrightarrow \ \ \log_a(x) \nl \exp(x)=e^x & \ \Leftrightarrow \ \ \ln(x)=\log_e(x) \nl \sin(x) & \ \Leftrightarrow \ \ \arcsin(x)=\sin^{-1}(x) \nl \cos(x) & \ \Leftrightarrow \ \ \arccos(x)=\cos^{-1}(x) \end{align*} \]

The function-inverse relationship is reflexive. This means that if you see a function on one side of the above table (no matter which), then its inverse is on the opposite side.

Example

Let's say your teacher doesn't like you and right away on the first day of classes, he gives you a serious equation and wants you to find $x$: \[ \log_5\left(3 + \sqrt{6\sqrt{x}-7} \right) = 34+\sin(5.5)-\Psi(1). \] Do you see now what I meant when I said that the teacher doesn't like you?

First note that it doesn't matter what $\Psi$ is, since $x$ is on the other side of the equation. We can just keep copying $\Psi(1)$ from line to line and throw the ball back to the teacher in the end: “My answer is in terms of your variables dude. You have to figure out what the hell $\Psi$ is since you brought it up in the first place.” The same goes with $\sin(5.5)$. If you don't have a calculator, don't worry about it. We will just keep the expression $\sin(5.5)$ instead of trying to find its numerical value. In general, you should try to work with variables as much as possible and leave the numerical computations for the last step.

OK, enough beating about the bush. Let's just find $x$ and get it over with! On the right side of the equation, we have the sum of a bunch of terms and no $x$ in them so we will just leave them as they are. On the left-hand side, the outer most function is a logarithm base $5$. Cool. No problem. Looking in the table of inverse functions we find that the exponential function is the inverse of the logarithm: $a^x \Leftrightarrow \log_a(x)$. To get rid of the $\log_5$ we must apply the exponential function base five to both sides: \[ 5^{ \log_5\left(3 + \sqrt{6\sqrt{x}-7} \right) } = 5^{ 34+\sin(5.5)-\Psi(1) }, \] which simplifies to: \[ 3 + \sqrt{6\sqrt{x}-7} = 5^{ 34+\sin(5.5)-\Psi(1) }, \] since $5^x$ canceled the $\log_5 x$.

From here on it is going to be like if Bruce Lee walked into a place with lots of bad guys. Addition of $3$ is undone by subtracting $3$ on both sides: \[ \sqrt{6\sqrt{x}-7} = 5^{ 34+\sin(5.5)-\Psi(1) } - 3. \] To undo a square root you take the square \[ 6\sqrt{x}-7 = \left(5^{ 34+\sin(5.5)-\Psi(1) } - 3\right)^2. \] Add $7$ to both sides \[ 6\sqrt{x} = \left(5^{ 34+\sin(5.5)-\Psi(1) } - 3\right)^2+7. \] Divide by $6$: \[ \sqrt{x} = \frac{1}{6}\left(\left(5^{ 34+\sin(5.5)-\Psi(1) } - 3\right)^2+7\right), \] and then we square again to get the final answer: \[ \begin{align*} x &= \left[\frac{1}{6}\left(\left(5^{ 34+\sin(5.5)-\Psi(1) } - 3\right)^2+7\right) \right]^2. \end{align*} \]

Did you see what I was doing in each step? Next time a function stands in your way, hit it with its inverse, so that it knows not to ever challenge you again.

Discussion

The recipe I have outlined above is not universal. Sometimes $x$ isn't alone on one side. Sometimes $x$ appears in several places in the same equation so can't just work your way towards $x$ as shown above. You need other techniques for solving equations like that.

The bad news is that there is no general formula for solving complicated equations. The good news is that the above technique of “digging towards $x$” is sufficient for 80% of what you are going to be doing. You can get another 15% if you learn how to solve the quadratic equation: \[ ax^2 +bx + c = 0. \]

Solving third order equations $ax^3+bx^2+cx+d=0$ with pen and paper is also possible, but at this point you really might as well start using a computer to solve for the unknown(s).

There are all kinds of other equations which you can learn how to solve: equations with multiple variables, equations with logarithms, equations with exponentials, and equations with trigonometric functions. The principle of digging towards the unknown and applying the function inverse is very important so be sure to practice it.

Basic rules of algebra

It's important for you to know the general rules for manipulating numbers and variables (algebra) so we will do a little refresher on these concepts to make sure you feel comfortable on that front. We will also review some important algebra tricks like factoring and completing the square which are useful when solving equations.

When an expression contains multiple things added together, we call those things terms. Furthermore, terms are usually composed of many things multiplied together. If we can write a number $x$ as $x=abc$, we say that $x$ factors into $a$, $b$ and $c$. We call $a$, $b$ and $c$ the factors of $x$.

Given any four numbers $a,b,c$ and $d$, we can use the following algebra properties:

- Associative property: $a+b+c=(a+b)+c=a+(b+c)$ and $abc=(ab)c=a(bc)$.

- Commutative property: $a+b=b+a$ and $ab=ba$.

- Distributive property: $a(b+c)=ab+ac$.

We use the distributive property every time we expand a bracket. For example $a(b+c+d)=ab + ac + ad$. The opposite operation of expanding is called factoring and consists of taking out the common parts of an expression to the front of a bracket: $ac+ac = a(b+c)$. We will discuss both of these operations in this section and illustrate what they are used for.

Expanding brackets

The distributive property is useful when you are dealing with polynomials: \[ (x+3)(x+2)=x(x+2) + 3(x+2)= x^2 +x2 +3x + 6. \] We can now use the commutative property on the second term $x2=2x$, and then combine the two $x$ terms into a single one to obtain \[ (x+3)(x+2)= x^2 + 5x + 6. \]

This calculation shown above happens so often that it is good idea to see it in more abstract form: \[ (x+a)(x+b) = x(x+b) + a(x+b) = x^2 + (a+b)x + ab. \] The product of two linear terms (expressions of the form $x+?$) is equal to a quadratic expression. Furthermore, observe that the middle term on the right-hand side contains the sum of the two constants on the left-hand side while the third term contains the their product.

It is a very common for people to get this wrong and write down false equations like $(x+a)(x+b)=x^2+ab$ or $(x+a)(x+b)=x^2+a+b$ or some variation of the above. You will never make such a mistake if you keep in mind the distributive property and expand the expression using a step-by-step approach. As a second example, consider the slightly more complicated algebraic expression and its expansion: \[ \begin{align*} (x+a)(bx^2+cx+d) &= x(bx^2+cx+d) + a(bx^2+cx+d) \nl &= bx^3+cx^2+dx + abx^2 +acx +ad \nl &= bx^3+ (c+ab)x^2+(d+ac)x +ad. \end{align*} \] Note how we grouped together all the terms which contain $x^2$ in one term and all the terms which contain $x$ in a second term. This is a common pattern when dealing with expressions which contain different powers of $x$.

Example

Suppose we are asked to solve for $t$ in the following equation \[ 7(3 + 4t) = 11(6t - 4). \] The unknown $t$ appears on both sides of the equation so it is not immediately obvious how to proceed.

To solve for $t$ in the above equation, we have to bring all the $t$ terms to one side and all the constant terms to the other side. The first step towards this goal is to expand the two brackets to obtain \[ 21 + 28t = 66t - 44. \] Now we move things around to get all the $t$s on the right-hand side and all the constants on the left-hand side \[ 21 + 44 = 66t - 28t. \] We see that $t$ is contained in both terms on the right-hand side so we can rewrite the equation as \[ 21 + 44 = (66 - 28)t. \] The answer is now obvious $t = \frac{21 + 44}{66 - 28} = \frac{65}{38}$.

Factoring

Factoring means to take out some common part in a complicated expression so as to make it more compact. Suppose you are given the expression $6x^2y + 15x$ and you are asked to simplify it by “taking out” common factors. The expression has two terms and when we split each terms into it constituent factors we obtain: \[ 6x^2y + 15x = (3)(2)(x)(x)y + (5)(3)x. \] We see that the factors $x$ and $3$ appear in both terms. This means we can factor them out to the front like this: \[ 6x^2y + 15x = 3x(2xy+5). \] The expression on the right is easier to read than the expression on the right since it shows that the $3x$ part is common to both terms.

Here is another example of where factoring can help us simplify an expression: \[ 2x^2y + 2x + 4x = 2x(xy+1+2) = 2x(xy+3). \]

Quadratic factoring

When dealing with a quadratic function, it is often useful to rewrite it as a product of two factors. Suppose you are given the quadratic function $f(x)=x^2-5x+6$ and asked to describe its properties. What are the roots of this function, i.e., for what values of $x$ is this function equal to zero? For which values of $x$ is the function positive and for which values is it negative?

When looking at the expression $f(x)=x^2-5x+6$, the properties of the function are not immediately apparent. However, if we factor the expression $x^2+5x+6$, we will be able to see its properties more clearly. To factor a quadratic expression is to express it as product of two factors: \[ f(x) = x^2-5x+6 = (x-2)(x-3). \] We can now see immediately that its solutions (roots) are at $x_1=2$ and $x_2=3$. You can also see that, for $x>3$, the function is positive since both factors will be positive. For $x<2$ both factors will be negative, but a negative times a negative gives positive, so the function will be positive overall. For values of $x$ such that $2<x<3$, the first factor will be positive, and the second negative so the overall function will be negative.

For some simple quadratics like the above one you can simply guess what the factors will be. For more complicated quadratic expressions, you need to use the quadratic formula. This will be the subject of the next section. For now let us continue with more algebra tricks.

Completing the square

Any quadratic expression $Ax^2+Bx+C$ can be written in the form $A(x-h)^2+k$. This is because all quadratic functions with the same quadratic coefficient are essentially shifted versions of each other. By completing the square we are making these shifts explicit. The value of $h$ is how much the function is shifted to the right and the value $k$ is the vertical shift.

Let's try to find the values $A,k,h$ for the quadratic expression discussed in the previous section: \[ x^2+5x+6 = A(x-h)^2+k = A(x^2-2hx + h^2) + k = Ax^2 - 2Ahx + Ah^2 + k. \]

By focussing on the quadratic terms on both sides of the equation we see that $A=1$, so we have \[ x^2+\underline{5x}+6 = x^2 \underline{-2hx} + h^2 + k. \] Next we look at the terms multiplying $x$ (underlined), and we see that $h=-2.5$, so we obtain \[ x^2+5x+\underline{6} = x^2 - 2(-2.5)x + \underline{(-2.5)^2 + k}. \] Finally, we pick a value of $k$ which would make the constant terms (underlined again) match \[ k = 6 - (-2.5)^2 = 6 - (2.5)^2 = 6 - \left(\frac{5}{2}\right)^2 = 6\times\frac{4}{4} - \frac{25}{4} = \frac{24 - 25}{4} = \frac{-1}{4}. \] This is how we complete the square, to obtain: \[ x^2+5x+6 = (x+2.5)^2 - \frac{1}{4}. \] The right-hand side in the above expression tells us that our function is equivalent to the basic function $x^2$, shifted $2.5$ units to the left, and $\frac{1}{4}$ units downwards. This would be really useful information if you ever had to draw this function, since it is easy to plot the basic graph of $x^2$ and then shift it appropriately.

It is important that you become comfortable with the procedure for completing the square outlined above. It is not very difficult, but it requires you to think carefully about the unknowns $h$ and $k$ and to choose their values appropriately. There is a simple rule you can remember for completing the square in an expression of the form $x^2+bx+c=(x-h)^2+k$: you have to use half of the coefficient of the $x$ term inside the bracket, i.e., $h=-\frac{b}{2}$. You can then work out both sides of the equation and choose $k$ so that the constant terms match. Take out a pen and a piece of paper now and verify that you can correctly complete the square in the following expressions $x^{2} - 6 x + 13=(x-3)^2 + 4$ and $x^{2} + 4 x + 1=(x + 2)^2 -3$.

Solving quadratic equations

What would you do if you were asked to find $x$ in the equation $x^2 = 45x + 23$? This is called a quadratic equation since it contains the unknown variable $x$ squared. The name name comes from the Latin quadratus, which means square. Quadratic equations come up very often so mathematicians came up with a general formula for solving these equations. We will learn about this formula in this section.

Before we can apply the formula, we need to rewrite the equation in the form \[ ax^2 + bx + c = 0, \] where we moved all the numbers and $x$s to one side and left only $0$ on the other side. This is the called the standard form of the quadratic equation. For example, to get the expression $x^2 = 45x + 23$ into the standard form, we can subtract $45x+23$ from both sides of the equation to obtain $x^2 - 45x - 23 = 0$. What are the values of $x$ that satisfy this formula?

Claim

The solutions to the equation \[ ax^2 + bx + c = 0, \] are \[ x_1 = \frac{-b + \sqrt{b^2-4ac} }{2a} \ \ \text{ and } \ \ x_2 = \frac{-b - \sqrt{b^2-4ac} }{2a}. \]

Let us now see how this formula is used to solve the equation $x^2 - 45x - 23 = 0$. Finding the two solutions is a simple mechanical task of identifying $a$, $b$ and $c$ and plugging these numbers into the formula: \[ x_1 = \frac{45 + \sqrt{45^2-4(1)(-23)} }{2} = 45.5054\ldots, \] \[ x_2 = \frac{45 - \sqrt{45^2-4(1)(-23)} }{2} = -0.5054\ldots. \]

Proof of claim

This is an important proof. You should know how to derive the quadratic formula in case your younger brother asks you one day to derive the formula from first principles. To derive this formula, we will use the completing-the-square technique which we saw in the previous section. Don't bail out on me now, the proof is only two pages.

Starting from the equation $ax^2 + bx + c = 0$, our first step will be to move $c$ to the other side of the equation \[ ax^2 + bx = -c, \] and then to divide by $a$ on both sides \[ x^2 + \frac{b}{a}x = -\frac{c}{a}. \]

Now we must complete the square on the left-hand side, which is to say we ask the question: what are the values of $h$ and $k$ for this equation to hold \[ (x-h)^2 + k = x^2 + \frac{b}{a}x = -\frac{c}{a}? \] To find the values for $h$ and $k$, we will expand the left-hand side to obtain $(x-h)^2 + k= x^2 -2hx +h^2+k$. We can now identify $h$ by looking at the coefficients in front of $x$ on both sides of the equation. We have $-2h=\frac{b}{a}$ and hence $h=-\frac{b}{2a}$.

So what do we have so far: \[ \left(x + \frac{b}{2a} \right)^2 = \left(x + \frac{b}{2a} \right)\!\!\left(x + \frac{b}{2a} \right) = x^2 + \frac{b}{2a}x + x\frac{b}{2a} + \frac{b^2}{4a^2} = x^2 + \frac{b}{a}x + \frac{b^2}{4a^2}. \] If we want to figure out what $k$ is, we just have to move that last term to the other side: \[ \left(x + \frac{b}{2a} \right)^2 - \frac{b^2}{4a^2} = x^2 + \frac{b}{a}x. \]

We can now continue with the proof where we left off \[ x^2 + \frac{b}{a}x = -\frac{c}{a}. \] We replace the left-hand side by the complete-the-square expression and obtain \[ \left(x + \frac{b}{2a} \right)^2 - \frac{b^2}{4a^2} = -\frac{c}{a}. \] From here on, we can use the standard procedure for solving equations. We put all the constants on the right-hand side \[ \left(x + \frac{b}{2a} \right)^2 = -\frac{c}{a} + \frac{b^2}{4a^2}. \] Next we take the square root of both sides. Since the square function maps both positive and negative numbers to the same value, this step will give us two solutions: \[ x + \frac{b}{2a} = \pm \sqrt{ -\frac{c}{a} + \frac{b^2}{4a^2} }. \] Let's take a moment to cleanup the mess on the right-hand side a bit: \[ \sqrt{ -\frac{c}{a} + \frac{b^2}{4a^2} } = \sqrt{ -\frac{(4a)c}{(4a)a} + \frac{b^2}{4a^2} } = \sqrt{ \frac{- 4ac + b^2}{4a^2} } = \frac{\sqrt{b^2 -4ac} }{ 2a }. \]

Thus we have: \[ x + \frac{b}{2a} = \pm \frac{\sqrt{b^2 -4ac} }{ 2a }, \] which is just one step away from the final answer \[ x = \frac{-b}{2a} \pm \frac{\sqrt{b^2 -4ac} }{ 2a } = \frac{-b \pm \sqrt{b^2 -4ac} }{ 2a }. \] This completes the proof.

Alternative proof of claim

To have a proof we don't necessarily need to show the derivation of the formula as we did. The claim was that $x_1$ and $x_2$ are solutions. To prove the claim we could have simply plugged $x_1$ and $x_2$ into the quadratic equation and verified that we get zero. Verify on your own.

Applications

The Golden Ratio

The golden ratio, usually denoted $\varphi=\frac{1+\sqrt{5}}{2}=1.6180339\ldots$ is a very important proportion in geometry, art, aesthetics, biology and mysticism. It comes about from the solution to the quadratic equation \[ x^2 -x -1 = 0. \]

Using the quadratic formula we get the two solutions: \[ x_1 = \frac{1+\sqrt{5}}{2} = \varphi, \qquad x_2 = \frac{1-\sqrt{5}}{2} = - \frac{1}{\varphi}. \]

You can learn more about the various contexts in which the golden ratio appears from the excellent wikipedia article on the subject. We will also see the golden ratio come up again several times in the remainder of the book.

Explanations

Multiple solutions

Often times, we are interested in only one of the two solutions to the quadratic equation. It will usually be obvious from the context of the problem which of the two solutions should be kept and which should be discarded. For example, the time of flight of a ball thrown in the air from a height of $3$ meters with an initial velocity of $12$ meters per second is obtained by solving a quadratic equation $0=(-4.9)t^2+12t+3$. The two solutions of the quadratic equation are $t_1=-0.229$ and $t_2=2.678$. The first answer $t_1$ corresponds to a time in the past so must be rejected as invalid. The correct answer is $t_2$. The ball will hit the ground after $t=2.678$ seconds.

Relation to factoring

In the previous section we discussed the quadratic factoring operation by which we could rewrite a quadratic function as the product of two terms $f(x)=ax^2+bx+c=(x-x_1)(x-x_2)$. The two numbers $x_1$ and $x_2$ are called the roots of the function: this is where the function $f(x)$ touches the $x$ axis.

Using the quadratic equation you now have the ability to factor any quadratic equation. Just use the quadratic formula to find the two solutions $x_1$ and $x_2$ and then you can rewrite the expression as $(x-x_1)(x-x_2)$.

Some quadratic expression cannot be factored, however. These correspond to quadratic functions whose graphs do not touch the $x$ axis. They have no solutions (no roots). There is a quick test you can use to check if a quadratic function $f(x)=ax^2+bx+c$ has roots (touches or crosses the $x$ axis) or doesn't have roots (never touches the $x$ axis). If $b^2-4ac>0$ then the function $f$ has two roots. If $b^2-4ac=0$, the function has only one root. This corresponds to the special case when the function touches the $x$ axis only at one point. If $b^2-4ac<0$, the function has no real roots. If you try to use the formula for finding the solutions, you will fail because taking the square root of a negative number is not allowed. Think about it—how could you square a number and obtain a negative number?

Polynomials

The polynomials are a very simple and useful family of functions. For example quadratic polynomials of the form $f(x) = ax^2 + bx +c$ often arise in the description of physics phenomena.

Definitions

- $x$: the variable

- $f(x)$: the polynomial. We sometimes sometimes denote polynomials $P(x)$ to

distinguish them from generic function $f(x)$.

- degree of $f(x)$: the largest power of $x$ that appears in the polynomial

- roots of $f(x)$: the values of $x$ for which $f(x)=0$

Polynomials

The most general polynomial of the first degree is a line $f(x) = mx + b$, where $m$ and $b$ are arbitrary constants.

The most general polynomial of second degree is $f(x) = a_2 x^2 + a_1 x + a_0$, where again $a_0$, $a_1$ and $a_2$ are arbitrary constants. We call $a_k$ the coefficient of $x^k$ since this is the number that appears in front of it.

By now you should be able to guess that a third degree polynomial will look like $f(x) = a_3 x^3 + a_2 x^2 + a_1 x + a_0$.

In general, a polynomial of degree $n$ has equation: \[ f(x) = a_n x^n + a_{n-1}x^{n-1} + \cdots + a_2 x^2 + a_1 x + a_0. \] or if you want to use the sum notation we can write it as: \[ f(x) = \sum_{k=0}^n a_kx^k, \] where $\Sigma$ (the capital Greek letter sigma) stands for summation.

Solving polynomial equations

Very often you will have to solve a polynomial equations of the form: \[ A(x) = B(x), \] where $A(x)$ and $B(x)$ are both polynomials. Remember that solving means to find the value of $x$ which makes the equality true.

For example, say the revenue of your company, as function of the number of products sold $x$ is given by $R(x)=2x^2 + 2x$ and the costs you incur to produce $x$ objects is $C(x)=x^2+5x+10$. A very natural question to ask is the amount of product you need to produce to break even, i.e., to make your revenue equal your costs $R(x)=C(x)$. To find the break-even $x$, you will have to solve the following equation: \[ 2x^2 + 2x = x^2+5x+10. \]

This may seem complicated since there are $x$s all over the place and it is not clear how to find the value of $x$ that makes this equation true. No worries though, we can turn this equation into the “standard form” and then use the quadratic equation. To do this, we will move all the terms to one side until we have just zero on the other side: \[ \begin{align} 2x^2 + 2x \ \ \ -x^2 &= x^2+5x+10 \ \ \ -x^2 \nl x^2 + 2x \ \ \ -5x &= 5x+10 \ \ \ -5x \nl x^2 - 3x \ \ \ -10 &= 10 \ \ \ -10 \nl x^2 - 3x -10 &= 0. \end{align} \]

Remember that if we do the same thing on both sides of the equation, it remains true. Therefore, the values of $x$ that satisfy \[ x^2 - 3x -10 = 0, \] namely $x=-2$ and $x=5$, will also satisfy \[ 2x^2 + 2x = x^2+5x+10, \] which was the original problem that we were trying to solve.

This “shuffling of terms” approach will work for any polynomial equation $A(x)=B(x)$. We can always rewrite it as some $C(x)=0$, where $C(x)$ is a new polynomial that has as coefficients the difference of the coefficients of $A$ and $B$. Don't worry about which side you move all the coefficients to because $C(x)=0$ and $0=-C(x)$ have exactly the same solutions. Furthermore, the degree of the polynomial $C$ can be no greater than that of $A$ or $B$.

The form $C(x)=0$ is the standard form of a polynomial and, as you will see shortly, there are formulas which you can use to find the solution(s).

Formulas

The formula for solving the polynomial equation $P(x)=0$ depend on the degree of the polynomial in question.

First

For first degree: \[ P_1(x) = mx + b = 0, \] the solution is $x=b/m$. Just move $b$ to the other side and divide by $m$.

Second

For second degree: \[ P_2(x) = ax^2 + bx + c = 0, \] the solutions are $x_1=\frac{-b + \sqrt{ b^2 -4ac}}{2a}$ and $x_2=\frac{-b - \sqrt{b^2-4ac}}{2a}$.

Note that if $b^2-4ac < 0$, the solutions involve taking the square root of a negative number. In those cases, we say that no real solutions exist.

Third

The solutions to the cubic polynomial equation \[ P_3(x) = x^3 + ax^2 + bx + c = 0, \] are given by \[ x_1 = \sqrt[3]{ q + \sqrt{p} } \ \ + \ \sqrt[3]{ q - \sqrt{p} } \ -\ \frac{a}{3}, \] and \[ x_{2,3} = \left( \frac{ -1 \pm \sqrt{3}i }{2} \right)\sqrt[3]{ q + \sqrt{p} } \ \ + \ \left( \frac{ -1 \pm \sqrt{3}i }{2} \right) \sqrt[3]{ q - \sqrt{p} } \ - \ \frac{a}{3}, \] where $q \equiv \frac{-a^3}{27}+ \frac{ab}{6} - \frac{c}{2}$ and $p \equiv q^2 + \left(\frac{b}{3}-\frac{a^2}{9}\right)^3$.

Note that, in my entire career as an engineer, physicist and computer scientist, I have never used the cubic equation to solve a problem by hand. In math homework problems and exams you will not be asked to solve equations of higher than second degree, so don't bother memorizing the solutions of the cubic equation. I included the formula here just for completeness.

Higher degrees

There is also a formula for polynomials of degree $4$, but it is complicated. For polynomials with order $\geq 5$, there does not exist a general analytical solution.

Using a computer

When solving real world problems,

you will often run into much more complicated equations.

For anything more complicated than the quadratic equation,

I recommend that you use a computer algebra system like

sympy to find the solutions.

Go to http://live.sympy.org and type in:

>>> solve( x**2 - 3*x +2, x) [ shift + Enter ] [1, 2]

Indeed $x^2-3x+2=(x-1)(x-2)$ so $x=1$ and $x=2$ are the two solutions.

Substitution trick

Sometimes you can solve polynomials of fourth degree by using the quadratic formula. Say you are asked to solve for $x$ in \[ g(x) = x^4 - 3x^2 -10 = 0. \] Imagine this comes up on your exam. Clearly you can't just type it into a computer, since you are not allowed the use of a computer, yet the teacher expects you to solve this. The trick is to substitute $y=x^2$ and rewrite the same equation as: \[ g(y) = y^2 - 3y -10 = 0, \] which you can now solve by the quadratic formula. If you obtain the solutions $y=\alpha$ and $y=\beta$, then the solutions to the original fourth degree polynomial are $x=\sqrt{\alpha}$ and $x=\sqrt{\beta}$ since $y=x^2$.

Of course, I am not on an exam, so I am allowed to use a computer:

>>> solve(y**2 - 3*y -10, y) [-2, 5] >>> solve(x**4 - 3*x**2 -10 , x) [sqrt(2)i, -sqrt(2)i, -sqrt(5) , sqrt(5) ]

Note how a 2nd degree polynomial has two roots and a fourth degree polynomial has four roots, two of which are imaginary, since we had to take the square root of a negative number to obtain them. We write $i=\sqrt{-1}$. If this was asked on an exam though, you should probably just report the two real solutions: $\sqrt{5}$ and $-\sqrt{5}$ and not talk about the imaginary solutions since you are not supposed to know about them yet. If you feel impatient though, and you want to know about the complex numbers right now you can skip ahead to the section on complex numbers.

Logarithms

The word “logarithm” makes most people think about some mythical mathematical beast. Surely logarithms are many headed, breathe fire and are extremely difficult to understand. Nonsense! Logarithms are simple. It will take you at most a couple of pages to get used to manipulating them, and that is a good thing because logarithms are used all over the place.

For example, the strength of your sound system is measured in logarithmic units called decibels $[\textrm{dB}]$. This is because your ear is sensitive only to exponential differences in sound intensity. Logarithms allow us to compare very large numbers and very small numbers on the same scale. If we were measuring sound in linear units instead of logarithmic units then your sound system volume control would have to go from $1$ to $1048576$. That would be weird no? This is why we use the logarithmic scale for the volume notches. Using a logarithmic scale, we can go from sound intensity level $1$ to sound intensity level $1048576$ in 20 “progressive” steps. Assume each notch doubles the sound intensity instead of increasing it by a fixed amount, the first notch corresponds to $2$, the second notch is $4$ (still probably inaudible) but by the time you get to sixth notch you are at $2^6=64$ sound intensity (audible music). The tenth notch corresponds to sound intensity $2^{10}=1024$ (medium strength sound) and the finally the twentieth notch will be max power $2^{20}=1048576$ (at this point the neighbours will come knocking to complain).

Definitions

You are probably familiar with these concepts already:

- $b^x$: the exponential function base $b$

- $\exp(x)=e^x$: the exponential function base $e$, Euler's number

- $2^x$: exponential function base $2$

- $f(x)$: the notion of a function $f:\mathbb{R}\to\mathbb{R}$

- $f^{-1}(x)$: the inverse function of $f(x)$. It is defined in terms of

$f(x)$ such that the following holds $f^{-1}(f(x))=x$, i.e.,

if you apply $f$ to some number and get the output $y$,

and then you pass $y$ through $f^{-1}$ the output will be $x$ again.

The inverse function $f^{-1}$ undoes the effects of the function $f$.

NOINDENT In this section we will play with the following new concepts:

- $\log_b(x)$: logarithm of $x$ base $b$. This is the inverse function of $b^x$

- $\ln(x)$; the “natural” logarithm base $e$. This is the inverse of $e^x$

- $\log_2(x)$: the logarithm base $2$ is is the inverse of $2^x$

I say play, because there is nothing much new to learn here: logarithms are just a clever way to talk about the size of number – i.e., how many digits the number has.

Formulas

The main thing to realize is that $\log$s don't really exist on their own. They are defined as the inverses of the corresponding exponential function. The following statements are equivalent: \[ \log_b(x)=m \ \ \ \ \ \Leftrightarrow \ \ \ \ \ b^m=x. \]

For logarithms with base $e$ one writes $\ln(x)$ for “logarithme naturel” because $e$ is the “natural” base. Another special base is $10$ because we use the decimal system for our numbers. $\log_{10}(x)$ tells you roughly the size of the number $x$—how many digits the number has.

Example

When someone working for the system (say someone with a high paying job in the financial sector) boasts about his or her “six-figure” salary, they are really talking about the $\log$ of how much money they make. The “number of figures” $N_S$ in you salary is calculated as one plus the logarithm base ten of your salary $S$. The formula is \[ N_S = 1 + \log_{10}(S). \] So a salary of $S=100\:000$ corresponds to $N_S=1+\log_{10}(100\:000)=1+5=6$ figures. What will be the smallest “seven figure” salary? We have to solve for $S$ given $N_S=7$ in the formula. We get $7 = 1+\log_{10}(S)$ which means that $6=\log_{10}(S)$ and using the inverse relationship between logarithm base ten and exponentiation base ten we find that $S=10^6 = 1\:000\:000$. One million per year. Yes, for this kind of money I see how someone might want to work for the system. But I don't think most system pawns ever make it to the seven figure level. Even at the higher ranks, the salaries are more in the $1+\log_{10}(250\:000) = 1+5.397=6.397$ digits range. There you have it. Some of the smartest people out there selling their brains out to the finance sector for some lousy $0.397$ extra digits. What wankers! And who said you need to have a six digit salary in the first place? Why not make $1+\log_{10}(44\:000)=5.64$ digits as a teacher and do something with your life that actually matters?

Properties

Let us now discuss two important properties that you will need to use when dealing with logarithms. Pay attention because the arithmetic rules for logarithms are very different from the usual rules for numbers. Intuitively, you can think of logarithms as a convenient of referring to the exponents of numbers. The following properties are the logarithmic analogues of the properties of exponents

Property 1

The first property states that the sum of two logarithms is equal to the logarithm of the product of the arguments: \[ \log(x)+\log(y)=\log(xy). \] From this property, we can derive two other useful ones: \[ \log(x^k)=k\log(x), \] and \[ \log(x)-\log(y)=\log\left(\frac{x}{y}\right). \]

Proof: For all three equations above we have to show that the expression on the left is equal to the expression on the right. We have only been acquainted with logarithms for a very short time, so we don't know each other that well. In fact, the only thing we know about $\log$s is the inverse relationship with the exponential function. So the only way to prove this property is to use this relationship.

The following statement is true for any base $b$: \[ b^m b^n = b^{m+n}, \] which follows from first principles. Exponentiation means multiplying together the base many times. If you count the total number of $b$s on the left side you will see that there is a total of $m+n$ of them, which is what we have on the right.

If you define some new variables $x$ and $y$ such that $b^m=x$ and $b^n=y$ then the above equation will read \[ xy = b^{m+n}, \] if you take the logarithm of both sides you get \[ \log_b(xy) = \log_b\left( b^{m+n} \right) = m + n = \log_b(x) + \log_b(y). \] In the last step we used the definition of the $\log$ function again which states that $b^m=x \ \ \Leftrightarrow \ \ m=\log_b(x)$ and $b^n=y \ \ \Leftrightarrow \ \ n=\log_b(y)$.

Property 2

We will now discuss the rule for changing from one base to another. Is a relation between $\log_{10}(S)$ and $\log_2(S)$?

There is. We can express the logarithm in any base $B$ in terms of a ratio of logarithms in another base $b$. The general formula is: \[ \log_{B}(x) = \frac{\log_b(x)}{\log_b(B)}. \]

This means that: \[ \log_{10}(S) =\frac{\log_{10}(S)}{1} =\frac{\log_{10}(S)}{\log_{10}(10)} = \frac{\log_{2}(S)}{\log_{2}(10)}=\frac{\ln(S)}{\ln(10)}. \]

This property is very useful in case when you want to compute $\log_{7}$, but your calculator only gives you $\log_{10}$. You can simulate $\log_7(x)$ by computing $\log_{10}(x)$ and dividing by $\log_{10}(7)$.

Geometry

Triangles

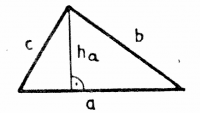

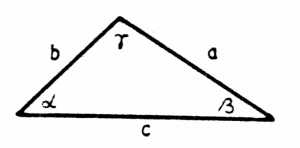

The area of a triangle is equal to $\frac{1}{2}$

times the length of the base times the height:

\[

A = \frac{1}{2} a h_a.

\]

Note that $h_a$ is the height of the triangle relative to the side $a$.

The area of a triangle is equal to $\frac{1}{2}$

times the length of the base times the height:

\[

A = \frac{1}{2} a h_a.

\]

Note that $h_a$ is the height of the triangle relative to the side $a$.

The perimeter of the triangle is: \[ P = a + b + c. \]

Consider now a triangle with internal angles $\alpha$, $\beta$ and $\gamma$. The sum of the inner angles in any triangle is equal to two right angles: $\alpha+\beta+\gamma=180^\circ$.

The sine law is:

\[

\frac{a}{\sin(\alpha)}=\frac{b}{\sin(\beta)}=\frac{c}{\sin(\gamma)},

\]

where $\alpha$ is the angle opposite to $a$, $\beta$ is the angle opposite to $b$ and $\gamma$ is the angle opposite to $c$.

The sine law is:

\[

\frac{a}{\sin(\alpha)}=\frac{b}{\sin(\beta)}=\frac{c}{\sin(\gamma)},

\]

where $\alpha$ is the angle opposite to $a$, $\beta$ is the angle opposite to $b$ and $\gamma$ is the angle opposite to $c$.

The cosine rules are: \[ \begin{align} a^2 & =b^2+c^2-2bc\cos(\alpha), \nl b^2 & =a^2+c^2-2ac\cos(\beta), \nl c^2 & =a^2+b^2-2ab\cos(\gamma). \end{align} \]

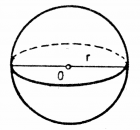

Sphere

A sphere is described by the equation \[ x^2 + y^2 + z^2 = r^2. \]

Surface area: \[ A = 4\pi r^2. \]

Volume: \[ V = \frac{4}{3}\pi r^3. \]

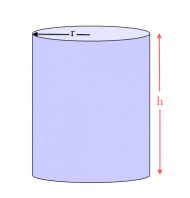

Cylinder

The surface area of a cylinder consists of the top and bottom circular surfaces plus the area of the side of the cylinder: \[ A = 2 \left( \pi r^2 \right) + (2\pi r) h. \]

The volume is given by product of the area of the base times the height of the cylinder: \[ V = \left(\pi r^2 \right)h. \]

Example

You open the hood of your car and see 2.0L written on top of the engine. The 2[L] refers to the total volume of the four pistons, which are cylindrical in shape. You look in the owner's manual and find out that the diameter of each piston (bore) is 87.5[mm] and the height of each piston (stroke) is 83.1[mm]. Verify that the total volume of the cylinder displacement of your engine is indeed 1998789[mm$^3$] $\approx 2$[L].

Links

[ A formula for calculating the distance between two points on a sphere ]

http://www.movable-type.co.uk/scripts/latlong.html

Definitions

Calculus is the study of functions $f(x)$ over the real numbers $\mathbb{R}$: \[ f: \mathbb{R} \to \mathbb{R}. \] The function $f$ takes as input some number, usually called $x$ and gives as output another number $f(x)=y$. You are familiar with many functions and have used them in many problems.

In this chapter we will learn about different operations that can be performed on functions. It worth understanding these operations because of the numerous applications which they have.

Differential calculus

Differential calculus is all about derivatives:

- $f'(x)$: the derivative of $f(x)$ is the rate of change of $f$ at $x$.

The derivative is also a function of the form

\[

f': \mathbb{R} \to \mathbb{R},

\]

The output of $f'(x)$ represents the //slope// of

a line parallel (tangent) to $f$ at the point $(x,f(x))$.

Integral calculus

Integral calculus is all about integration:

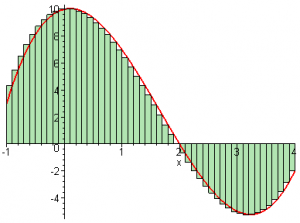

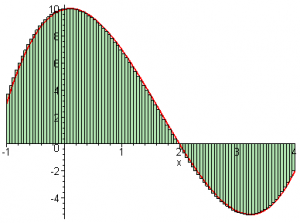

- $\int_a^b f(x)\:dx$: the integral of $f(x)$ from $x=a$ to $x=b$

corresponds to the area under $f(x)$ between $a$ and $b$:

\[

A(a,b) = \int_a^b f(x) \: dx.

\]

The $\int$ sign is a mnemonic for //sum//.

The integral is the "sum" of $f(x)$ over that interval.

* $F(x)=\int f(x)\:dx$: the anti-derivative of the function $f(x)$

contains the information about the area under the curve for

//all// limits of integration.

The area under $f(x)$ between $a$ and $b$ is computed as the

difference between $F(b)$ and $F(a)$:

\[

A(a,b) = \int_a^b f(x)\;dx = F(b)-F(a).

\]

Sequences and series

Functions are usually defined for continuous inputs $x\in \mathbb{R}$, but there are also functions which are defined only for natural numbers $n \in \mathbb{N}$. Sequences are the discrete analogue functions.

- $a_n$: sequence of numbers $\{ a_0, a_1, a_2, a_3, a_4, \ldots \}$.

You can think about each sequence as a function

\[

a: \mathbb{N} \to \mathbb{R},

\]

where the input $n$ is an integer (index into the sequence) and

the output is $a_n$ which could be any number.

NOINDENT The integral of a sequence is called a series.

- $\sum$: sum.

The summation sign is the short way to express

the sum of several objects:

\[

a_3 + a_4 + a_5 + a_6 + a_7

\equiv \sum_{3 \leq i \leq 7} a_i

\equiv \sum_{i=3}^{7} a_i.

\]

Note that summations could go up to infinity.

* $\sum a_i$: the series corresponds to the running total of a sequence until $n$:

\[

S_n = \sum_{i=1}^{n} a_i = a_1 + a_2 + \cdots + a_{n-1} + a_n.

\]

* $f(x)=\sum_{i=0}^\infty a_i x^i$: a //power series// is a series

which contains powers of some variable $x$.

Power series give us a way to express any function $f(x)$ as

an infinitely long polynomial.

For example, the power series of $\sin(x)$ is

\[

\sin(x)

= x - \frac{x^3}{3!} + \frac{x^5}{5!}

- \frac{x^7}{7!} + \frac{x^9}{9!}+ \ldots.

\]

Don't worry if you don't understand all the notions and the new notation in the above paragraphs. I just wanted to present all the calculus actors in the first scene. We will talk about each of them in more detail in the following sections.

Limits

Actually, we have not mentioned the main actor yet: the limit. In calculus, we do a lot of limit arguments in which we take some positive number $\epsilon>0$ and we make it progressively smaller and smaller:

- $\displaystyle\lim_{\epsilon \to 0}$: the mathematically rigorous

way of saying that the number $\epsilon$ becomes smaller and smaller. We can also take limits to infinity, that is, we imagine some number $N$ and we make that number bigger and bigger:

- $\displaystyle\lim_{N \to \infty}$: the mathematical

way of saying that the number $N$ will get larger and larger.

Indeed, it wouldn't be wrong to say that calculus is the study of the infinitely small and the infinitely many. Working with infinitely small quantities an infinitely large numbers can be tricky business but it is extremely important that you become comfortable with the concept of a limit which is the rigorous way of talking about infinity. Before we learn about derivatives, integrals and series we will spend some time learning about limits.

Limits

To understand the ideas behind derivatives and integrals, you need to understand what a limit is and how to deal with the infinitely small, infinitely large and the infinitely many. In practice, using calculus doesn't actually involve taking limits since we will learn direct formulas and algebraic rules that are more convenient than doing limits. Do not skip this section though just because it is “not on the exam”. If you do so, you will not know what I mean when I write things like $0,\infty$ and $\lim$ in later sections.

Introduction in three acts

Zeno's paradox

The ancient greek philosopher Zeno once came up with the following argument. Suppose an archer shoots an arrow and sends it flying towards a target. After some time it will have travelled half the distance, and then at some later time it will have travelled the half of the remaining distance and so on always getting closer to the target. Zeno observed that no matter how little distance remains to the target, there will always be some later instant when the arrow will have travelled half of that distance. Thus, he reasoned, the arrow must keep getting closer and closer to the target, but never reaches it.

Zeno, my brothers and sisters, was making some sort of limit argument, but he didn't do it right. We have to commend him for thinking about such things centuries before calculus was invented (17th century), but shouldn't repeat his mistake. We better learn how to take limits, because limits are important. I mean a wrong argument about limits could get you killed for God's sake! Imagine if Zeno tried to verify experimentally his theory about the arrow by placing himself in front of one such arrow!

Two monks

Two young monks were sitting in silence in a Zen garden one autumn afternoon.

“Can something be so small as to become nothing?” asked one of the monks, braking the silence.

“No,” replied the second monk, “if it is something then it is not nothing.”

“Yes, but what if no matter how close you look you cannot see it, yet you know it is not nothing?”, asked the first monk, desiring to see his reasoning to the end.

The second monk didn't know what to say, but then he found a counterargument. “What if, though I cannot see it with my naked eye,

I could see it using a magnifying glass?”.

The first monk was happy to hear this question, because he had already prepared a response for it. “If I know that you will be looking with a magnifying glass, then I will make it so small that you cannot see with you magnifying glass.”

“What if I use a microscope then?”

“I can make the thing so small that even with a microscope you cannot see it.”

“What about an electron microscope?”

“Even then, I can make it smaller, yet still not zero.” said the first monk victoriously and then proceeded to add “In fact, for any magnifying device you can come up with, you just tell me the resolution and I can make the thing smaller than can be seen”.

They went back to concentrating on their breathing.

Epsilon and delta

The monks have the right reasoning but didn't have the right language to express what they mean. Zeno has the right language, the wonderful Greek language with letters like $\epsilon$ and $\delta$, but he didn't have the right reasoning. We need to combine aspects of both of the above stories to understand limits.

Let's analyze first Zeno's paradox. The poor brother didn't know about physics and the uniform velocity equation of motion. If an object is moving with constant speed $v$ (we ignore the effects of air friction on the arrow), then its position $x$ as a function of time is given by \[ x(t) = vt+x_i, \] where $x_i$ is the initial location where the object starts from at $t=0$. Suppose that the archer who fired the arrow was at the origin $x_i=0$ and that the target is at $x=L$ metres. The arrow will hit the target exactly at $t=L/v$ seconds. Shlook!

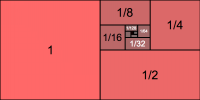

It is true that there are times when the arrow will be $\frac{1}{2}$, $\frac{1}{4}$, $\frac{1}{8}$th, $\frac{1}{16}$th, and so forth distance from the target. In fact there infinitely many of those fractional time instants before the arrow hits, but that is beside the point. Zeno's misconception is that he thought that these infinitely many timestamps couldn't all fit in the timeline since it is finite. No such problem exists though. Any non-zero interval on the number line contains infinitely many numbers ($\mathbb{Q}$ or $\mathbb{R}$).

Now let's get to the monks conversation. The first monk was talking about the function $f(x)=\frac{1}{x}$. This function becomes smaller and smaller but it never actually becomes zero: \[ \frac{1}{x} \neq 0, \textrm{ even for very large values of } x, \] which is what the monk told us.

Remember that the monk also claimed that the function $f(x)$ can be made arbitrarily small. He wants to show that, in the limit of large values of $x$, the function $f(x)$ goes to zero. Written in math this becomes \[ \lim_{x\to \infty}\frac{1}{x}=0. \]

To convince the second monk that he can really make $f(x)$ arbitrarily small, he invents the following game. The second monk announces a precision $\epsilon$ at which he will be convinced. The first monk then has to choose an $S_\epsilon$ such that for all $x > S_\epsilon$ we will have \[ \left| \frac{1}{x} - 0 \right| < \epsilon. \] The above expression indicates that $\frac{1}{x}\approx 0$ at least up to a precision of $\epsilon$.

The second monk will have no choice but to agree that indeed $\frac{1}{x}$ goes to 0 since the argument can be repeated for any required precision $\epsilon >0$. By showing that the function $f(x)$ approaches $0$ arbitrary closely for large values of $x$, we have proven that $\lim_{x\to \infty}f(x)=0$.

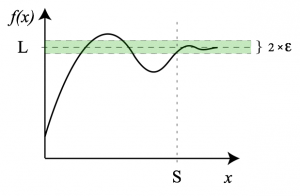

More generally, the function $f(x)$ can converge to any number $L$

for as $x$ takes on larger and larger values:

\[

\lim_{x \to \infty} f(x) = L.

\]

The above expressions means that, for any precision $\epsilon>0$,

there exists a starting point $S_\epsilon$,

after which $f(x)$ equals its limit $L$ to within $\epsilon$ precision:

\[

\left|f(x) - L\right| <\epsilon, \qquad \forall x \geq S_\epsilon.

\]

More generally, the function $f(x)$ can converge to any number $L$

for as $x$ takes on larger and larger values:

\[

\lim_{x \to \infty} f(x) = L.

\]

The above expressions means that, for any precision $\epsilon>0$,

there exists a starting point $S_\epsilon$,

after which $f(x)$ equals its limit $L$ to within $\epsilon$ precision:

\[

\left|f(x) - L\right| <\epsilon, \qquad \forall x \geq S_\epsilon.

\]

Example

You are asked to calculate $\lim_{x\to \infty} \frac{2x+1}{x}$, that is you are given the function $f(x)=\frac{2x+1}{x}$ and you have to figure out what the function looks like for very large values of $x$. Note that we can rewrite the function as $\frac{2x+1}{x}=2+\frac{1}{x}$ which will make it easier to see what is going on: \[ \lim_{x\to \infty} \frac{2x+1}{x} = \lim_{x\to \infty}\left( 2 + \frac{1}{x} \right) = 2 + \lim_{x\to \infty}\left( \frac{1}{x} \right) = 2 + 0, \] since $\frac{1}{x}$ tends to zero for large values of $x$.