The page you are reading is part of a draft (v2.0) of the "No bullshit guide to math and physics."

The text has since gone through many edits and is now available in print and electronic format. The current edition of the book is v4.0, which is a substantial improvement in terms of content and language (I hired a professional editor) from the draft version.

I'm leaving the old wiki content up for the time being, but I highly engourage you to check out the finished book. You can check out an extended preview here (PDF, 106 pages, 5MB).

<texit info> author=Ivan Savov title=MATH and PHYSICS Funamentals backgroundtext=off </texit>

Long division

When there are large numbers, we need to follow a procedure to divide them.

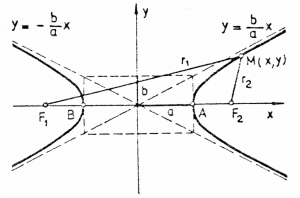

Hyperbolas

No not exaggerated figures of speech, but the mathematical shape.

Formulas

Applications

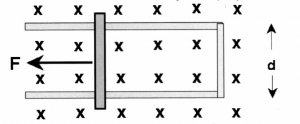

Repulsive collision of two particles.

Links

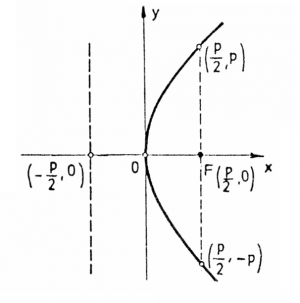

Parabola

Geometry

The shape of the quadratic function is called a parabola and obeys a very important rule. Say we have a sideways parabola: \[ x = f(y) = Ay^2. \]

Imagine rays (show pic!) of light coming from infinity from the right and striking the surface of the parabola. All the rays will be reflected towards the point $F=(\frac{p}{2},0)$ where $p = \frac{1}{2A}$.

( probably need a better drawing.)

Satellite dishes

You put the receiver in the focus point, and you get all the power incident on the dish concentrated right on your receiver.

Base representation

other topics:

working in base 2 and base 16 discrete exponentiation Hamming distance (& friends)

modular arithmetic primality testing basic stats (standard deviation & variance)

Number systems

Decimal system

Binary system

Hexadecimal system

Formulas

Discussion

maybe as a review – sequences/circuits/polynomials ?

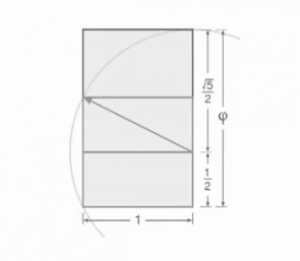

Golden ratio

The golden ratio, usually denoted $\phi\approx 1.6180339887$ is very important proportion in geometry, art, aesthetics, biology and mysticism. If you have a stick of length $1$m and you wan to cut it into two pieces, say from $0$ (the left) to $x$ and from $x$ to $1$ (the right end). You have to pick the point $x$ closer to the right end, such that the ratio of the lengths of the short piece and the long piece is the same as the ratio of the long piece and the whole stick. Mathematically, this means this: \[ \frac{l_{\text{remaining short}}} {l_{\text{long}}} \equiv \frac{1-x}{x} = \frac{x}{1m} \equiv \frac{l_{\text{long}}}{l_{\text{whole}}}. \]

To see how the quadratic equation comes about, just multiply both sides by $x$ to get: \[ 1-x = x^2, \] which after moving all the terms to one side becomes \[ x^2 +x -1 = 0. \]

Using the quadratic formula we get the two solutions which are \[ x_1 = \frac{-1+\sqrt{5}}{2} = \frac{1}{\phi} \approx 0.618030, \qquad x_2 = \frac{-1-\sqrt{5}}{2} = -\phi \approx - 1.61803. \] The solution $x_2$ is some negative number, so it cannot be the $x$ we want – we wanted a ratio, i.e., $0 \leq x\leq 1$. The golden ration then is \[ \begin{align*} \phi &= \frac{1}{x_1} = \frac{2}{\sqrt{5}-1} \nl &= \frac{2}{\sqrt{5}-1}\frac{\sqrt{5}+1}{\sqrt{5}+1} = \frac{2(\sqrt{5}+1)}{5-1} = \frac{\sqrt{5}+1}{2}. \end{align*} \]

Geometry

Trigonometric functions

\[ \cos \left( \frac {\pi} {5} \right) = \cos 36^\circ={\sqrt{5}+1 \over 4} = \frac{\varphi }{2} \]

\[ \sin \left( \frac {\pi} {10} \right) = \sin 18^\circ = {\sqrt{5}-1 \over 4} = {\varphi - 1 \over 2} = {1 \over 2\varphi} \]

Fibonacci connection

The Fibonacci sequence, is defined by the recurrence relation: $F_n=F_{n-1}+F_{n-2}$ with $F_1=1$, and $F_2=1$.

Binet's formula

The $n$th term in the Fibonacci sequence has a closed formed expression \[ F_n=\frac{\phi^n-(1-\phi)^n}{\sqrt{5}}, \] where $\phi=\frac{1+\sqrt{5}}{2}$ is the golden ratio.

in the limit of large $n$, this term will dominate the rate of growth of the Fibonacci sequence. Consider the ratio between $F_n$ and $F_{n-1}$, i.e. at what rate is the sequence growing? \[ \frac{F_n}{F_{n-1}} = \frac{\phi^n-(1-\phi)^n}{\phi^{n-1} - (1- \phi)^{n-1} } \]

http://en.wikipedia.org/wiki/Fibonacci_number#Closed-form_expression

Five-pointed star

The golden ratio also appears in the ratios of two lengths in the pentagram, or five-pointed star. I am choosing not to discuss this here, because the five-pointed star is a symbol associated with satanism, freemasonry and communism, and I want nothing to do with any of these.

Electronic circuits

Links

[ The golden ratio in web design ]

http://www.pearsonified.com/2011/12/golden-ratio-typography.php

FOR MECHANICS

TODO: leverage = mechanical advantage / force conversion TODO: Bicycle Gears = torque converters —> need notion of work torque wrench?

TODO: Pulley problems in force diagrams

TODO: toilet paper now I think of Torque

Waves and optics

Polar coordinates

Definitions

Formulas

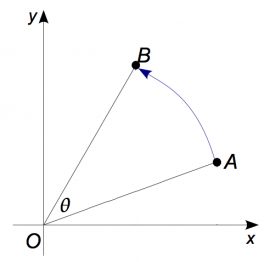

To convert the polar coordinates $r\angle\theta$ to components notation $(x,y)$ use: \[ x=r\cos\theta\qquad\qquad y=r\sin\theta. \]

To convert from the cartesian coordinates $(x,y)$ to polar coordinates $r\angle\theta$ use \[ r=\sqrt{x^2+y^2}\qquad\qquad \theta=\tan^{-1}\left(\frac{y}{x}\right) \]

Explanations

Discussion

Examples

sin review travelling pulses

sound travelling waves standing waves

Optics

Introduction

A camera consists essentially of two parts: a detector and a lens construction. The detector is some surface that can record the light which hits it. Old-school cameras used the chemical reaction of silver-oxidation under light, whereas modern cameras use electronic photo-detectors.

While the detector is important, that which really makes or brakes a camera is the lens. The lens' job is to take the light reflected off some object (that which you are taking a picture of) and redirect it in an optimal way so that a faithful image forms on the detection surface. The image has to form exactly at the right distance $d_i$ (so that it is in focus) and have exactly the right height $h_i$ (so it fits on the detector).

To understand how lenses transform light, there is just one equation you need to know: \[ \frac{1}{d_o} + \frac{1}{d_i} = \frac{1}{f}, \] where $d_o$ is the distance from the object to the lens, $d_i$ is the distance from the lens to the image and $f$ is called the focal length of the lens. This entire chapter is dedicated to this equation and its applications. It turns out that curved mirrors behave very similarly to lenses, and the same equation can be used to calculate the properties of the images formed by mirrors. Before we talk about curved mirrors and lenses, we will have to learn about the basic properties of light and the laws of reflection and refraction.

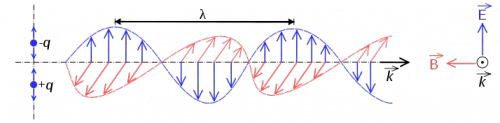

Light

Light is pure energy stored in the form of a travelling electromagnetic wave.

The energy of a light particle is stored in the electromagnetic oscillation. During one moment, light is a “pulse” of electric field in space, and during the next instant it is a “pulse” of pure magnetic energy. Think of sending a “wrinkle pulse” down a long rope – where the pulse of mechanical energy is traveling along the rope. Light is like that, but without the rope. Light is just an electro-magnetic pulse and such pulses happen even in empty space. Thus, unlike most other waves you may have seen until now, light does not need a medium to travel in: empty space will do just fine.

The understanding of light as a manifestation of electro-magnetic energy (electromagnetic radiation) is some deep stuff, which is not the subject of this section. We will get to this, after we cover the concept of electric and magnetic fields, electric and magnetic energy and Maxwell's equations. For the moment, when I say “oscillating energy”, I want you to think of a mechanical mass-spring system in which the energy oscillates between the potential energy of the spring and the kinetic energy of the mass. A photon is a similar oscillation between a “magnetic system” part and the “electric system” part, which travels through space at the speed of light.

In this section, we focus on light rays. The vector $\hat{k}$ in the figure describes the direction of travel of the light ray.

Oh light ray, light ray! Where art thou, on this winter day.

Definitions

Light is made up of “light particles” called photons:

- $p$: a photon.

- $E_p$: the Energy of the photon.

- $\lambda$: the wavelength the photon.

- $f$: the frequency of the photon. (Denoted $\nu$ in some texts.)

- $c$: the speed of light in vacuum. $c=2.9979\times 10^{8}$[m/s].

NOINDENT The speed of light depends on the material in which it travels:

- $v_x$: the speed of light in material $x$.

- $n_x$: the diffraction index of material $x$,

which tells you how much slower light is in that material

relative to the speed of light in vacuum.

$v_x=c/n_x$.

Air is pretty much like vacuum,

so $v_{air} \approx c$ and $n_{air}\approx 1$.

There are different types of glass used in

lens-manifacturing with $n$ values ranging from 1.4 to 1.7.

Equations

Like all travelling waves, the propagation speed of light is equal to the product of its frequency times its wavelength. In vacuum we have \[ c = \lambda f. \]

For example, red light of wavelength $\lambda=700$n[m], has frequency $f=428.27$THz since the speed of light is $c=2.9979\times 10^{8}$[m/s].

The energy of a beam of light is proportional to the intensity of the light (how many photon per second are being emitted) and the energy carried by each photon. The energy of a photon is proportional to its frequency: \[ E_p = \hbar f, \] where $\hbar=1.05457\times 10^{-34}$ is Plank's constant. The above equation is a big deal, since it applies not just to light but to all forms of electromagnetic radiation. The higher the frequency, the more energy per photon there is. Einstein got a Nobel prize for figuring out the photoelectric effect which is a manifestation of the above equation.

The speed of light in a material $x$ with refractive index $n_x$ is \[ v_x = \frac{c}{n_x}. \]

Here is a list of refractive indices for some common materials: $n_{vacuum}\equiv 1.00$, $n_{air} = 1.00029$, $n_{ice}=1.31$, $n_{water}=1.33$, $n_{fused\ quartz}=1.46$, $n_{NaCl}=1.54$, Crown glass 1.52-1.62, Flint glass 1.57-1.75, $n_{sapphire}=1.77$, and $n_{diamond}=2.417$.

Discussion

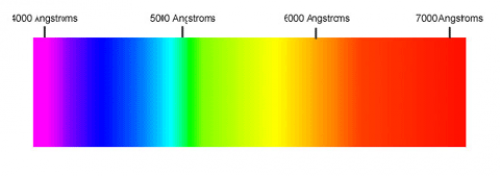

Visible light

Our eyes are able to distinguish certain wavelengths of light as different colours.

| Color | Wavelength (nm) |

|---|---|

| Red | 780 - 622 |

| Orange | 622 - 597 |

| Yellow | 597 - 577 |

| Green | 577 - 492 |

| Blue | 492 - 455 |

| Violet | 455 - 390 |

Note that units of wavelength are tiny numbers like: nanometers $1[\textrm{nm}]=10^{-9}[\textrm{m}]$ or Armstrongs $1[\textrm{A}]=10^{-10}[\textrm{m}]$.

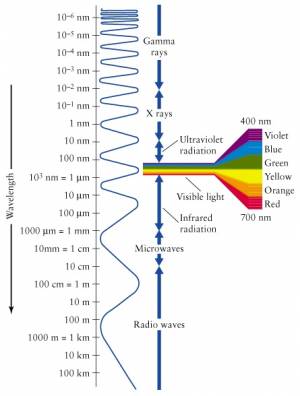

The electromagnetic spectrum

Visible light is only a small part of the electromagnetic spectrum.

Waves with frequency higher than that of violet light are called

ultraviolet (UV) radiation and cannot be seen by the human eye.

Also, frequencies lower than that of red light (infrared)

are not seen, but can sometimes be felt as heat.

Visible light is only a small part of the electromagnetic spectrum.

Waves with frequency higher than that of violet light are called

ultraviolet (UV) radiation and cannot be seen by the human eye.

Also, frequencies lower than that of red light (infrared)

are not seen, but can sometimes be felt as heat.

The EM spectrum extends to all sorts of frequencies (and therefore wavelengths, by $c=\lambda f$). We have different names for the different parts of the EM spectrum. The highest energy particles (highest frequency $\to$ shortest wavelength) are called gamma rays ($\gamma$-rays). We are constantly bombarded by gamma rays coming from outer space with tremendous energy. These $\gamma$-rays are generated by nuclear reactions inside distance stars.

Particles with less energy than $\gamma$-rays are called X-rays. These are still energetic enough that they easily pass through most parts of your body like a warm knife through butter. Only your bones offer some resistance, which is kind of useful in medical imaging since all bone structure can be seen in contrast when taking an X-ray picture.

The frequencies below the visible range (wavelengths longer than that of visible light) are populated by radio waves. And when I say radio, I don't mean specifically radio, but any form of wireless communication. Starting from 4G (or whatever cell phones have gotten to these days), then the top GSM bands at 2.2-2.4GHz, the low GSM bands 800-900MHz, and then going into TV frequencies, FM frequencies (87–108MHz) and finally AM frequencies (153kHz–26.1MHz). It is all radio. It is all electromagnetic radiation emitted by antennas, travelling through space and being received by other antennas.

Light rays

In this section we will study how light rays get reflected off the surfaces of objects and what happens when light rays reach the boundary between two different materials.

Definitions

The speed of light depends on the material where it travels:

- $v_x$: the speed of light in material $x$.

- $n_x$: the diffraction index of material $x$,

which tells you how much slower light is in that material.

$v_x=c/n_x$.

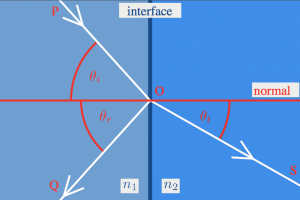

When an incoming ray of light comes to the surface of a transparent object, part of it will be reflected and part of it will be transmitted. We measure all angles with respect to the normal, which is the direction perpendicular to the interface.

- $\theta_{i}$: The incoming or incidence angle.

- $\theta_{r}$: The reflection angle.

- $\theta_{t}$: The transmission angle:

the angle of the light that goes into the object.

Formulas

Reflection

Light that hits a reflective surface will bounce back exactly at the same angle as it came in on: \[ \theta_{i} = \theta_{r}. \]

Refraction

The transmission angle of light when it goes into a material with different refractive index can be calculated from Snell's law: \[ n_i \sin\theta_{i} = n_t \sin \theta_{t}. \]

Total internal refraction

Light coming in from a medium with low refraction index into a medium with high refraction index gets refracted towards the normal. If the light travels in the opposite direction (from high $n$, to low $n$), then it will get deflected away from the normal. In the latter case, an interesting phenomenon called total internal refraction occurs, whereby light rays incident at sufficiently large angles with the normal get trapped inside the material. The angle at which this phenomenon starts to kick in is called the critical angle $\theta_{crit}$.

Consider a light ray inside a material of refractive indeed $n_x$ surrounded by a material with smaller refractive index $n_y$, $n_x > n_y$. To make this more concrete, think of a trans-continental underground optical cable made of glass $n_x=1.7$ surrounded by some plastic with $n_y=1.3$. All light at an angle greater than: \[ \theta_{crit} = \sin^{-1}\left( \frac{n_y}{n_{x}} \underbrace{\sin(90^\circ)}_{=1} \right) = \sin^{-1}\!\left( \frac{n_y}{n_{x}} \right) = \sin^{-1}\!\left( \frac{1.3}{1.7} \right) = 49.88^\circ, \] will get reflected every time it reaches the surface of the optical cable. Thus, if you shine a laser pointer into one end of such a fibre-optical cable in California, 100% of that laser light will come out in Japan. Most high-capacity communication links around the world are based around this amazing property of light. In other words: no total internal refraction means no internet.

Examples

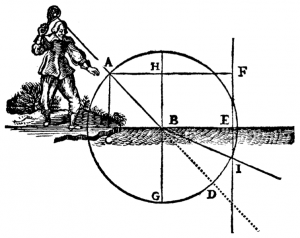

What is wrong in this picture?

Here is an illustration from one of René Descartes' books, which shows a man in funny pants with some sort of lantern which produces a light ray that goes into the water.

Q: What is wrong with the picture?

Hint: Recall that $n_{air}=1$ and $n_{water}=1.33$, so $n_i < n_t$.

Hint 2: What should happen to the angles of the light ray?

A: Suppose that the line $\overline{AB}$, is at $45^\circ$ angle, then after entering the water at $B$, the ray should be deflected towards the normal, i.e., it should pass somewhere between $G$ and $D$. If you wanted to be precise and calculate the transmission angle then we would use: \[ n_i \sin\theta_{i} = n_t \sin \theta_{t}, \] filled in with the values for air and water \[ 1 \sin(45^\circ) = 1.33 \sin( \theta_{t} ), \] and solved for $\theta_{t}$ (the refracted angle) we would get: \[ \theta_{t} = \sin^{-1}\left( \frac{\sqrt{2}}{2\times1.33} \right) = 32.1^\circ. \] The mistake apparently is due to Descartes' printer who got confused and measured angles with respect to the surface of the water. Don't make that mistake: remember to always measure angles with respect to the normal. The correct drawing should have the light ray going at an angle of $32.1^\circ$ with respect to the line $\overline{BG}$.

Explanations

Refraction

To understand refraction you need to imagine “wave fronts” perpendicular to the light rays. Because light comes in at an angle, one part of the wave front will be in material $n_i$ and the other will be in material $n_t$. Suppose $n_i < n_t$, then the part of the wavefront in the $n_t$ material will move slower so angles of the wavefronts will change. The precise relationship between the angles will depend on the refractive indices of the two materials:

\[ n_i \sin\theta_{i} = n_t \sin \theta_{t}. \]

Total internal refraction

Whenever $n_i > n_t$, we reach a certain point where the formula: \[ n_i \sin\theta_{i} = n_t \sin \theta_{t}, \] brakes down. If the transmitted angle $\theta_t$ becomes greater than $90^\circ$ (the critical transmission angle) it will not be transmitted at all. Instead, 100% of the light ray will get reflected back into the material.

To find the critical incident angle solve for $\theta_i$ in: \[ n_i \sin\theta_{i} = n_t \sin 90^\circ, \] \[ \theta_{crit} = \sin^{-1}\left( \frac{n_t}{n_{i}} \right). \]

The summary of the “what happens when a light ray comes to a boundary”-story is as follows:

- If $-\theta_{crit} < \theta_i < \theta_{crit}$, then some part of the light will be

transmitted at an angle $\theta_t$ and some part will be reflected at an angle $\theta_r=\theta_i$.

- If $\theta_i \geq \theta_{crit}$, then all the light will get reflected at an angle $\theta_r=\theta_i$.

Note that when going from a low $n$ medium into a high $n$ medium, there is no critical angle – there will always be some part of the light that is transmitted.

Parabolic shapes

The parabolic curve has a special importance in optics. Consider for example a very weak radio signal coming from a satellite in orbit. If you use just a regular radio receiver, the signal will be so weak as to be indistinguishable from the background noise. However, if you use a parabolic satellite dish to collect the power from a large surface area and focus it on the receiver, then you will be able to detect the signal. This works because of the parabolic shape of the satellite dish: all radio wave coming in from far away will get reflected towards the same point—the focal point of the parabola. Thus, if you put your receiver at the focal point, it will have the signal power from the whole dish redirected right to it.

Depending on the shape of the parabola (which way it curves and how strong the curvature is) the focal point or focus will be at a different place. In the next two sections, we will study parabolic mirrors and lenses. We will use the “horizontal rays get reflected towards the focus”-fact to draw optics diagrams and calculate where images will be formed.

Mirrors

Definitions

To understand how curved mirrors work, we imagine some test object (usually drawn as an arrow, or a candle) and the test image it forms.

- $d_o$: The distance of the object from the mirror.

- $d_i$: The distance of the image from the mirror.

- $f$: The focal length of the mirror.

- $h_o$: The height of the object.

- $h_i$: The height of the image.

- $M$: The magnification $M=h_i/h_o$.

When drawing optics diagrams with mirrors, we can draw the following three rays:

- $R_\alpha$: A horizontal incoming ray which gets redirected towards

the focus after it hits the mirror.

- $R_\beta$: A ray that passes through the focus and gets redirected horizontally

after it hits the mirror.

- $R_\gamma$: A ray that hits the mirror right in the centre and bounces back

at the same angle at which it came in.

Formulas

The following formula can be used to calculate where an image will be formed, given that you know the focal length of the mirror and the distance $d_o$ of the object: \[ \frac{1}{d_o} + \frac{1}{d_i} = \frac{1}{f}. \]

We follow the convention that distances measured from the reflective side of the mirror are positive, and distances behind the mirror are negative.

The magnification is defined as: \[ M = \frac{h_i}{h_o} = \frac{|d_i|}{|d_o|} \] How much bigger is the image compared to the object?

Though it might sound confusing, we will talk about magnification even when the image is smaller than the object; in those cases we say we have fractional magnification.

Examples

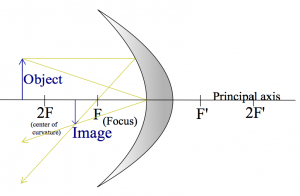

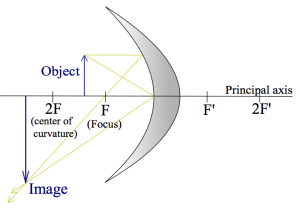

Visual examples

Mirrors reflect light, so it is usual to see an image formed on the same side as where it came from. This leads to the following convention:

- If the image forms on the usual side (in front of the mirror),

then we say it has positive distance $d_i$.

- If the image forms behind the mirror, then it has negative $d_i$.

Let us first look at the kind of mirror that you see in metro tunnels: convex mirror. These mirrors will give you a very broad view, and if someone is coming around the corner the hope is that your peripheral vision will be able to spot them in the mirror and you won't bump into each other.

I am going to draw $R_\alpha$ and $R_\gamma$:

Note that the image is “virtual”, since it appears to form inside the mirror.

Here is a drawing of a concave mirror instead, with the rays $R_\alpha$ and $R_\gamma$ drawn again.

Can you add the ray $R_\beta$ (through the focus)? As you can see, any two rays out of the three are sufficient to figure out where the image will be: just find the point where the rays meet.

Here are two more examples where the object its placed closer and closer to the mirror.

These are meant to illustrate that the same curved surface, and the same object can lead to very different images depending on where the object is placed relative to the focal point.

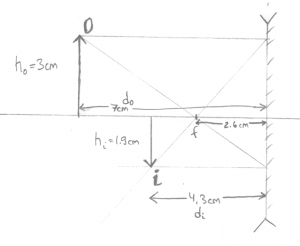

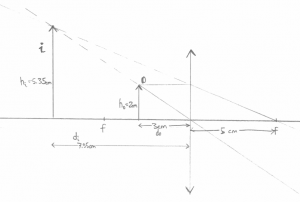

Numerical example 1

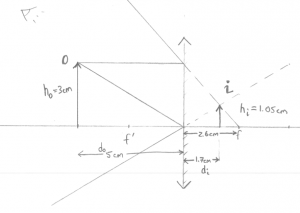

OK, let's do an exercise of the “can you draw straight lines using a ruler” type now. You will need a piece of white paper, a ruler and a pencil. Go get this stuff, I will be waiting right here.

Q: A convex mirror (like in the metro) is placed at the origin. An object of height 3[cm] is placed $x=5$[cm] away from the mirror. Where will the image be formed?

Geometric answer: Instead of trying to draw a curved mirror, we will draw a straight line. This is called the thin lens approximation (in this case, thin mirror) and it will make the drawing of lines much simpler. Take out the ruler and draw the two rays $R_\alpha$ and $R_\gamma$ as I did:

Then I can use the ruler to measure out $d_i\approx 1.7cm$.

Formula Answer: Using the formula \[ \frac{1}{d_o} + \frac{1}{d_i} = \frac{1}{f}, \] with the appropriate values filled in \[ \frac{1}{5} + \frac{1}{d_i} = \frac{1}{-2.6}, \] or \[ d_i = 1.0/(-1.0/2.6 - 1.0/5) = -1.71 \text{[cm]}. \] Nice.

Observe that (1) I used a negative focal point for the mirror since in some sense the focal point is “behind” the mirror, and (2) the image is formed behind the mirror, which means that it is virtual: this is where the arrow will appear to an the observing eye drawn in the top left corner.

Numerical example 2

Now we have a concave mirror with focal length $f=2.6cm$ and we measure the distances the same way (positive to the left).

Q: An object is placed at $d_0=7$[cm] from the mirror. Where will the image form? What is the height of the image?

Geometric answer: Taking out the ruler, you can choose to draw any of the three rays. I picked $R_\alpha$ and $R_\beta$ since they are the easiest to draw:

Then measuring with the ruler I find that $d_i \approx 4.3$[cm], and that the image is height $h_i\approx-1.9$[cm], where negative height means that the image is upside down.

Formula Answer: With the formula now. We start from \[ \frac{1}{d_o} + \frac{1}{d_i} = \frac{1}{f}, \] and fill in what we know \[ \frac{1}{7} + \frac{1}{d_i} = \frac{1}{2.6}, \] then solve for $d_i$: \[ d_i = 1.0/(1.0/2.6 - 1.0/7.0) = 4.136 \text{[cm]}. \] To find the height of the image we use \[ \frac{h_i}{h_o} = \frac{d_i}{d_o}, \] so \[ h_i = 3 \times \frac{4.136}{7.0} = 3 \times 4.13/7.0 = 1.77 \text{[cm]}. \] You still need the drawing to figure out that the image is inverted though.

Generally, I would trust the numeric answers from the formula more, but read the signs of the answers from the drawing. Distances in front of the mirror are positive whereas images formed behind the mirror have negative distance.

Links

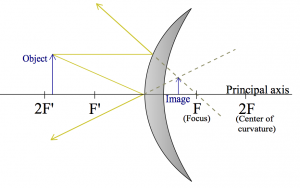

Lenses

Definitions

To understand how lenses work, we imagine again some test object. (an arrow) and the test image it forms.

- $d_o$: The distance of the object from the lens.

- $d_i$: The distance of the image from the lens.

- $f$: The focal length of the lens.

- $h_o$: The height of the object.

- $h_i$: The height of the image.

- $M$: The magnification $M=h_i/h_o$.

When drawing lens diagrams, we use the following representative rays:

- $R_\alpha$: A horizontal incoming ray which gets redirected towards

the focus after it passes through the lens.

- $R_\beta$: A ray that passes through the focus and gets redirected horizontally

after the lens.

- $R_\gamma$: A ray that passes exactly through the centre of the lens

and travels in a straight line.

Formulas

\[ \frac{1}{d_o} + \frac{1}{d_i} = \frac{1}{f} \]

\[ M = \frac{h_i}{h_o} = \frac{|d_i|}{|d_o|} \]

Examples

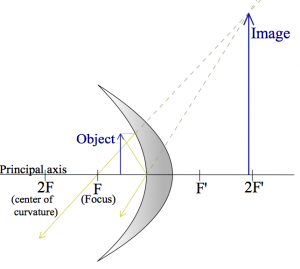

Visual

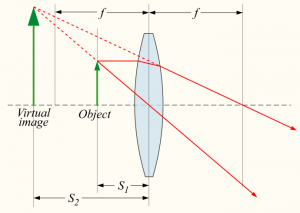

Fist consider the typical magnifying glass situation. You put the object close to the lens, and looking from the side, the object will appear magnified.

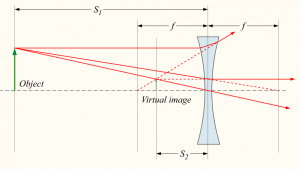

A similar setup with a diverging lens. This time the image will appear to the observer to be smaller than the object.

Note that in the above two examples, if you used the formula you would get a negative $d_i$ value since the image is not formed on the “right” side. We say the image is virtual.

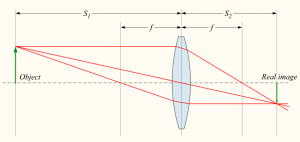

Now for an example where a real image is formed:

In this example all the quantities $f$, $d_o$ and $d_i$ are positive.

Numerical

An object is placed at a distance of 3[cm] from a magnifying glass of focal length 5[cm]. Where will the object appear to be?

You should really try this on your own. Just reading about light rays is kind of useless. Try drawing the above by yourself with the ruler. Draw the three kinds of rays: $R_\alpha$, $R_\beta$, and $R_\gamma$.

Here is my drawing.

Numerically we get \[ \frac{1}{d_o} + \frac{1}{d_i} = \frac{1}{f}, \] \[ \frac{1}{3.0} + \frac{1}{d_i} = \frac{1}{5.0}, \] \[ d_i = 1.0/(1.0/5.0 - 1.0/3.0) = -7.50 \text{[cm]}. \]

As you can see, drawings are not very accurate. Always trust the formula for the numeric answers to $d_o$, $d_i$ type of questions.

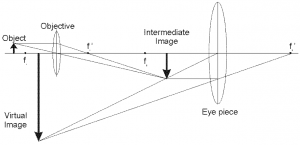

Multiple lenses

Imagine that the “output” image formed by the first lens is the “input” image to a second lens.

It may look complicated, but if you solve the problem in two steps (1) how the object forms an intermediary image, and (2) how the intermediary image forms the final image you will get things right.

You can also trace all the rays as they pass through the double-lens apparatus:

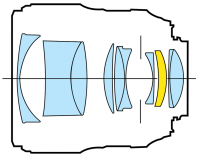

We started this chapter talking about real cameras, so I want to

finish on that note too. To form a clear image, with variable focus and

possibly zoom functionality, we have to use a whole series of lenses,

not just one or two.

We started this chapter talking about real cameras, so I want to

finish on that note too. To form a clear image, with variable focus and

possibly zoom functionality, we have to use a whole series of lenses,

not just one or two.

For each lens though, we can use the formula and calculate the effects of that lens on the light coming in.

Note that the real world is significantly more complicated than the simple ray picture which we have been using until now. For one, each frequency of light will have a slightly different refraction angle, and sometimes the lens shapes will not be perfect parabolas, so the light rays will not be perfectly redirected towards the focal point.

Discussion

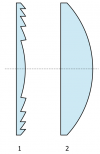

Fresnel lens

Thicker lenses are stronger. The reason is that the curvature

of a thick lens is bigger and thus light will be refracted more

when it hits the surface.

The actual thickness of the lens is of no importance.

The way rays get deflected by lenses only depends on the angles of

incidence. Indeed, we can cut out all the middle part

of the lens and leave a highly curved surface parts.

This is called a Fresnel lens and it is used in car headlights.

Thicker lenses are stronger. The reason is that the curvature

of a thick lens is bigger and thus light will be refracted more

when it hits the surface.

The actual thickness of the lens is of no importance.

The way rays get deflected by lenses only depends on the angles of

incidence. Indeed, we can cut out all the middle part

of the lens and leave a highly curved surface parts.

This is called a Fresnel lens and it is used in car headlights.

interference diffraction double slit expriment dispersion

Electricity and Magnetism

Electricity & magnetism

This course is about all things electrical and magnetic. Every object which has a mass $m$ will be affected by gravity. Similarly, every object which has a charge $q$ will feel the electric force $\vec{F}_e$. Furthermore, if a charge is moving then it will also be affected by the magnetic force $\vec{F}_b$. The formula for the electric force between objects is very similar to the formula for the gravitational force, but the magnetism stuff is totally new. Get read for some mind expansion!

Understanding the laws of electricity and magnetism will make you very powerful. Have you heard of Nikola Tesla? He is a pretty cool guy, and he was a student of electricity and magnetism just like you:

This course requires a good understanding of vector and basic calculus techniques. If you are feel a little rusty on these subjects, I highly recommend that you review the general ideas of vectors and integration before you start reading the material.

Below is a short overview of the topics which we will discuss.

Electricity

We start of with a review of Newton's formula for the gravitation force ($F_g=\frac{GMm}{r^2}$), then learn about electrostatics ($F_e=\frac{kQq}{r^2}$) and discuss three related concepts: the electric potential energy $U_e=\frac{kQq}{r}$, the electric field ($E=\frac{GQ}{r^2}$, $F_e=qE$) and the electric potential ($V=\int E\;dx$, $\vec{E}=\frac{dV}{dx}$).

Circuits

Electrostatic interactions between two points in space A and B take on a whole new nature if a charge-conducting wire is used to connect the two points. Charge will be able to flow from one point to the other along the wire. This flow of charge is called electric current. Current is denoted as $I$[A] and measured in Amperes.

The flow of current is a abstract way of describing moving charges. If you understand that well, you can start to visualize stuff and it will be all simple. The current $I$[A] is the “total number of electrons” passing in the wire in one second.

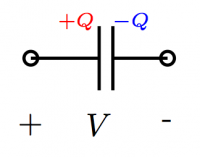

Electric current can be “accumulated” in charge containers called capacitors.

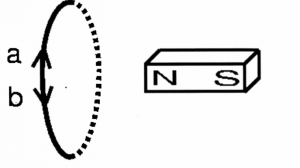

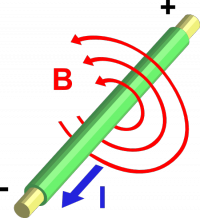

Magnetism

Understanding current is very important because each electron by virtue of its motion through

space is creating a magnetic field around it.

The strength of the magnetic field created by each electron is tiny—we could just ignore it.

Understanding current is very important because each electron by virtue of its motion through

space is creating a magnetic field around it.

The strength of the magnetic field created by each electron is tiny—we could just ignore it.

However if there is a current of 1[A] flowing through the wire, you know how many electrons that makes? It means there is a flow of $6.242 \times 10^{18}$ electrons per second in that wire. This is something we can't ignore. The magnetic field created by this wire will be quite powerful. You can use the magnetic field to build electromagnets (to lift cars in junk yards, or for magnetic locks—when you are fighting the front doors of McConnell to get into Blues Pub after 9PM—you are fighting with the magnetic force). You can also have two magnets push on each other while turning an engine forward—this is called an electric motor (think electric cars).

But hey, you don't have time to learn all of this now. Read a couple of pages and then go practice on the exams from previous years! If you have any problems come ask here: http://bit.ly/XYOhE1 (only on April 21st, 22nd, and 23rd)

Links

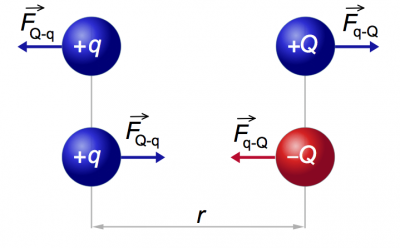

Electrostatics

Electrostatics is the study of charge and the electric forces that exist between charges. The same way that the force of gravity exists between any two objects with mass, the electric force (Coulomb force) exists between any two charged objects. We will see, however, that unlike gravity which is always attractive (tends to bring masses closer together), the electrostatic force can sometimes be repulsive (tends to push charges apart).

Electrostatics is a big deal. You are alive right now, because of the electric forces that exist between the amino acid chains (proteins) in your body. The attractive electric force that exists between protons and electrons helps to make atoms stable. The electric force is also an important factor in many chemical reactions.

The study of charged atoms and their chemistry can be kind of complicated. Each atom contains many charged particles: the positively charged protons in the negatively charged electrons. For example, a single iron atom has 26 positively charged particles (protons) in the nucleus and 26 negatively charged electrons in various energy shells surrounding the nucleus. To keep things simple, in this course we will study the electric force and potential energy of only a few charges at a time.

Example: Cathode ray tube

When I was growing up, television sets and computer monitors were bulky objects in which electrons were accelerated and crashed onto a phosphorescent surface to produce the image on the screen. A cathode ray tube (CRT) is a vacuum tube containing an electron gun (a source of electrons). What is the speed of the electrons which produce the image on an old-school TV?

Suppose the Voltage used to drive the electron gun is $4000$[V]. Since voltage is energy per unit charge, this means that each electron that goes through the electron gun will lose the following amount of potential energy \[ U_e = q_e V = 1.602\times10^{-19} \ \times \ 4000 \qquad \text{[J]}. \] In fact the potential energy is not lost but converted to kinetic energy \[ U_e \to K_e = \frac{1}{2}m_e v^2 = \frac{1}{2}(9.109\times10^{-31})v^2, \] where we have used the formula for the kinetic energy of an object with mass $m_e = 9.109\times10^{-31}$ [Kg]. Numerically we get: \[ 1.602\times10^{-19} \ \times \ 4000 = \frac{1}{2}(9.109\times10^{-31})v^2 \qquad \text{[J]}, \] where $v$, the velocity of the electrons, is the only unknown in the equation. Solving for $v$ we find that the elections inside the TV are flying at \[ v = \sqrt{\frac{2 q_e V}{m_e}} = \sqrt{\frac{2 \times 1.602\times10^{-19} \times 4000 }{9.109\times10^{-31}}} = 3.751\times 10^{7} \text{[m/s]}. \] This is pretty fast.

Concepts

- $q$: Electric charge of some particle or object. It is measured in Coulombs $[C]$. If there are multiple charges in the proble we can call them $q,Q$ or $q_1, q_2, q_3$ to distinguish them.

- $\vec{r}$: The vector-distance between two charges.

- $r \equiv |\vec{r}|$: Distance between two charges, measured in meters $[m]$

- $\hat{r} \equiv \frac{ \vec{r} }{ |\vec{r}|}$: A direction vector (unit length vector) in the $\vec{r}$ direction.

- $\vec{F}_e$: Electic force strength and direction, measured in Newtons $[N]$

- $U_e$: The electric potential energy, measured in Joules $[J]=[N*m]$

- $\varepsilon_0=8.8542\ldots\times 10^{-12}$ $\left[\frac{\mathrm{F}}{\mathrm{m}}\right]$: The permittivity of free space, which is one of the fundamental constants of Nature.

- $k_e=8.987551\times 10^9$ $\left[\frac{\mathrm{Nm^2}}{\mathrm{C}^{2}}\right]$: The electric constant. It is related to the permittivity of free space by $k_e=\frac{1}{4 \pi \varepsilon_0}$.

Charge

One of the fundamental properties of matter is charge, which is measured in Coulombs [C]. An electron has the charge $q_e=-1.602\times10^{-19}$ [C]. The electric charge of the nucleus of a Helium atom is $q_{He}=2\times1.602\times10^{-19}$, because it contains two protons and each proton has a charge of $1.602\times10^{-19}$ [C].

Unlike mass, of which there is only one kind, there are two kinds of charge: positive and negative. Using the sign (positive vs. negative) to denote the “type” of charge is nothing more than a convenient mathematical trick. We could have instead called the two types of charges “hot” and “cold”. The important thing is that there are two kinds with “opposite” properties in some sense. In what sense opposite? In the sense of their behaviour in physical experiments. If the two charges are of the same kind, then they try to push each other away, but if the two charges are of different kinds then they will attract each other.

Formulas

Coulomb's law

Two point charges $Q$ and $q$ placed at a distance $r$ meters apart will interact

via the electric force.

The magnitude of the electric force is given by the following formula

\[

|\vec{F}_e({r})| = \frac{k_eQq}{r^2} \qquad \text{[N]},

\]

which is known as Coulomb's law.

Two point charges $Q$ and $q$ placed at a distance $r$ meters apart will interact

via the electric force.

The magnitude of the electric force is given by the following formula

\[

|\vec{F}_e({r})| = \frac{k_eQq}{r^2} \qquad \text{[N]},

\]

which is known as Coulomb's law.

If the charges are different (one positive and one negative) then the force will be attractive – it will tend to draw the two charges together. If the two charges are of the same sign then the force will be repulsive.

Electric potential energy

Every time you have a force, you can calculate the potential energy associated with that force, which represents the total effect (the integral) of the force over some distance. We now define the electric potential energy $U_e$, i.e., how much potential energy is stored in the configuration of two charges $Q$ and $q$ separated by a distance of $r$. The formula is \[ U({r}) = \frac{kQq}{r} \qquad \text{[J]}, \] which is very similar to the formulate for $|\vec{F}_e(Q,q,r)|$ above, but with a one-over-r relationship instead of a one-over-r-squared.

We learned in mechanics, that often times the most elegant way to solve problems in physics is not to calculate the forces involved directly, but to use the principle of conservation of energy. By simple accounting of the different types of energy: kinetic (K), potential (U) and the work done (W), we can often arrive at the answer.

In mechanics we studied the gravitational potential energy $U_g=mgh$ and the spring potential energy $U_s=\frac{1}{2}kx^2$ associated with the gravitational force and spring force respectively. Now you have a new kind of potential energy to account for: $U_e=\frac{kQq}{r}$.

Examples

Example 1

A charge $Q=20$[$\mu$C] is placed 2.2 [m] away from a second charge $q=3$[$\mu$C]. What will be the magnitude of the force between them? Is the force attractive or repulsive?

Example 2

A charge $Q=6$[$\mu$C] is placed at the origin $(0,0)$ and a second charge $q=-5$[$\mu$C] is placed at $(3,0)$ [m]. What will be the force on $q$. Express your answer as a vector.

If the charge $q$ was placed instead at $(0,3)$[m], what would be the resulting electric vector?

What if the charge $q$ is placed at $(2,4)$[m]. What will be the electric force on $q$ then? Express your answer both in terms of magnitude-and-direction and in component notation.

Example 3

A fixed charge of $Q=3$[$\mu$C] and a movable charge $q=2$ [$\mu$C] are placed at a distance of 30 [cm] apart. If the charge $q$ is released it will fly off into the distance. How fast will it be going when it is $4$[m] away from $Q$?

Explanations

Coulomb's law

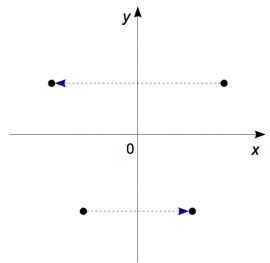

The electric force is a vector quantity so the real formula for the electric force must be written as a vector.

Let $\vec{r}$ be the vector distance from $Q$ to $q$. The electric force on the charge $q$ is \[ \vec{F}_e({r}) = \frac{k_eQq}{r^2}\hat{r} \qquad \text{[N]}, \] where $\hat{r}$ is a direction vector pointing away from $Q$. This formula will automatically take care of the direction of the vector in both the attractive and repulsive cases. If $Q$ and $q$ are of the same charge the force will be in the positive $\hat{r}$ direction (repulsive force), but if the charges have opposite sign, the force will be in the negative $\hat{r}$ direction.

In general, it is easier to think of the magnitude of the electric force, and then add the vector part manually by thinking in terms of attractive/repulsive rather than to depend on the sign in the vector equation to figure out the direction for you.

From force to potential energy

The potential energy of a configuration of charges is defined as the negative of the amount of work which would be necessary in order to bring the charges into this configuration: $U_e = - W_{done}$.

To derive the potential energy formula for charges $Q$ and $q$ separated by a distance $R$ in meters, we can imagine that $Q$ is at the origin and the charge $q$ starts off infinitely far away on the $x$-axis and is brought to a distance of $R$ from the origin slowly. The electric potential energy is given by the following integral: \[ \Delta U_e = - W_{done} = - \int_{r=\infty}^{r=R} \vec{F}_{ext}({r}) \cdot d\vec{s}. \] By bringing the charge $q$ from infinitely far away we make sure that the initial potential energy is going to be zero. Just like with all potentials, we need to specify a reference point with respect to which we will measure it. We define the potential at infinity to be zero, so that $\Delta U_e = U_e({R})-U_e(\infty) = U_e({R})-0= U_e({R})$.

OK, so the charge $q$ starts at $(\infty,0)$ and we sum-up all the work that will be necessary to bring it to the coordinate $(R,0)$. Note that we need an integral an integral to calculate the work, because the strength of the force changes during the process.

Before we do the integral, we have to think about the direction of the force and the direction of the integration steps. If we want to obtain the correct sign, we better be clear about all the negative signs in the expression:

- The negative sign in the front of the integral comes from the definition $U_e \equiv - W_{done}$.

- The electric force on the charge $q$ when it is a distance $x$ away

will be $\vec{F}_e({x}) = \frac{k_eQq}{x^2}\hat{x}$.

Therefore if we want to move the charge $q$ towards $Q$ we have

to apply an external force $\vec{F}_{ext}$ on the charge in the opposite direction.

The magnitude of the external force needed

to hold the charge in place (or to move it towards the origin at a constant speed)

is given by $\vec{F}_{ext}({x}) = -\frac{k_eQq}{x^2}\hat{x}$.

* The displacement vector $d\vec{s}$ always points in the

negative direction, since we start from $+\infty$ and move back to the origin.

Therefore, in terms of the positive $x$-direction the displacements

are small negative steps $d\vec{s} = - dx\; \hat{x}$.

The negative of the $W_{done}$ from $\infty$ to $R$ is given by the following integral: \[ \begin{align} \Delta U_e & = - W_{done} = - \int_{r=\infty}^{r=R} \vec{F}_{ext}({r}) \cdot d\vec{s} \nl & = -\int_{x=\infty}^{x=R} \left( - \frac{k_eQq}{x^2}\hat{x}\right) \cdot \left( -\hat{x}dx\right) \nl & = - \int_{\infty}^{R} \frac{k_eQq}{x^2} \ (\hat{x}\cdot\hat{x}) \ dx \nl & = - k_eQq \int_{\infty}^{R} \frac{1}{x^2} \ 1 \ dx \nl & = - k_eQq \left[ \frac{-1}{x} \right]_{\infty}^{R} \nl & = k_eQq \left[ \frac{1}{R} - \frac{1}{\infty} \right] \nl & = \frac{k_eQq}{R}. \end{align} \]

So we have that we have \[ \Delta U_e \equiv U_{ef} - U_{ei} = U_e({R}) - U_e(\infty), \] and since $U_e(\infty)=0$ we have derived that \[ U_e({R}) = \frac{k_eQq}{R}. \]

We say that the work done to bring the two charges together is stored in the electric potential energy $U_e({r})$ because if we were to let go of these charges they would fly away from each other, and give back all that energy as kinetic energy.

From potential to force

We can also use the relationship between force and potential energy in the other direction. If I were to tell you that the potential energy of two charges is \[ U({r}) = \frac{k_eQq}{r}, \] then, by definition, the force associated with that potential is given by \[ \vec{F}({r}) \equiv - \frac{dU({r}) }{dr} = \frac{k_eQq}{r^2} \hat{r}. \]

Discussion

More intuition about charge

Opposite charges cancel out. If you have a sphere with $5$[$\mu$C] of charge on it, and you add some negative charge to it, say $-1$[$\mu$C], then the resulting charge on the sphere will be $4$[$\mu$C].

Charged particles will redistribute themselves between different objects brought into contact so as to minimize the repulsive force between them. This means that charge is always maximally spread out over the entire surface of the object. For example, if charge is placed on a metal ball made of conducting material the charge will all go to the surface of the body and will not penetrate into the interior.

As another example, consider two metal spheres that are connected by a conducting wire with a total charge $Q$ placed on the system. Because charge is free to move along the wire, it will end up distributed uniformly over the total area $A =A_1 +A_2$, where $A_1$ and $A_2$ are the surface areas of the two spheres. The surface charge density will be $\sigma = Q/A$ [C/m$^2$]. The charge on each sphere will be proportional to the surface area of the object: \[ Q_1 = \sigma A_1 = \frac{A_1}{A_1+A_2} Q, \qquad Q_2 = \sigma A_2 = \frac{A_2}{A_1+A_2} Q. \qquad \textrm{[C]} \] Note that the $Q_1 + Q_2=Q$ as expected.

Links

Electric field

We will now discuss a new language for dealing with electrostatic problems.

So far we saw that the electric force, $\vec{F}_e$, exists between two charges $Q$ and $q$, and that the formula is given by Coulomb's law $\vec{F}_e=\frac{k_eQq}{r^2}\hat{r}$. How exactly this force is produced, we don't know. We just know from experience that it exists.

The electric field is an intuitive way to explain how the electric force works. We imagine that the charge $Q$ creates an electric field everywhere in space described by the formula $\vec{E} = \frac{k_eQ}{r^2}\hat{r}$ $[N/C]$. We further say that any charge placed in an electric field will feel an electric force proportional to the strength of the electric field. A charge $q$ placed in an electric field of strength $\vec{E}$ will feel an electric force $\vec{F}_e = q \vec{E}=\frac{k_eQq}{r^2}\hat{r}$.

This entire chapter is about this change of narrative when explaining electrostatic phenomena. There is no new physics. The electric field is just a nice way of thinking in terms of cause and effect. The charge $Q$ caused the electric field $\vec{E}$ and the electric field $\vec{E}$ caused the force $\vec{F}_e$ on the charge $q$.

You have to admit that this new narrative is nicer, than just saying that somehow the electric force “happens”.

Concepts

Recall the concepts from electrostatics:

- $q,Q,q_1,q_2$: The electric charge of some particle or object. It is measured in Coulombs $[C]$.

- $\vec{F}$: Electic force strength and direction, measured in Newtons $[N]$

- $U$: Potential energy (electrical), measured in Joules $[J]=[N*m]$

- $\vec{r}$: The vector-distance between two charges.

- $r \equiv |\vec{r}|$: Distance between two charges, measured in meters $[m]$

- $\hat{r}$: A direction vector (unit length vector) in the $\vec{r}$ direction.

In this section we will introduce a new language to talk about the same ideas.

- $\vec{E}$: Electric field strength and direction, measured in $[V/m]$ or Gauss

- $V$: Electric potential, measured in Volts $[V]$

Formulas

Electric field

The electric field caused by a charge $Q$ at a distance $r$ is given by \[ \vec{E}({r}) = \frac{kQ}{r^2}\hat{r} \qquad \text{[N/C]=[V/m]}. \]

Electric force

When asked to calculate the force between two particles we simply have to multiply the electric field times the charge \[ \vec{F}_e({r}) = q\vec{E}({r}) = q\frac{kQ}{r^2}\hat{r} = \frac{kQq}{r^2}\hat{r} \qquad \text{[N]}. \]

Electric potential

The electric potential $V$ (not to be confused with the electric potential energy $U_e$) of a charge $Q$ is given by \[ V({r})= \frac{kQ}{r} \qquad \text{[V]} \equiv \text{[J/C]} \]

Electric potential energy

The electric potential energy necessary to bring charge $q$ to point where an electric potential $V({r})$ exists is given by \[ U_e({r}) = q V({r}) = q\frac{kQ}{r} = \frac{kQq}{r} \qquad \text{[J]}. \]

Relations between the above four quantities

We can think of the electric field $\vec{E}$ as an electric force per unit charge. Indeed the dimensions of the electric field is $\text{[N/C]}$, so the electric field tells us the amount of force that a test charge of $q=1$[C] would feel at that point. Similarly, the electric potential is $V$ is the electric potential energy per unit charge, as can be seen from the dimensions: $\text{[V]}=\text{[J/C]}$.

In the electrostatics chapter we saw that, \[ U_e({R}) = - W_{done} = - \int_{\infty}^R \vec{F}_e({r}) \cdot d\vec{s}, \qquad \qquad \vec{F}_e({r}) = - \frac{dU({r}) }{dr}. \]

An analogous relation exists between the per unit charge quantities. \[ V({R}) = - \int_{\infty}^R \vec{E}({r}) \cdot d\vec{s}, \qquad \qquad \qquad \qquad \ \ \vec{E}({r}) = - \frac{dV({r}) }{dr}. \]

Explanations

Electric potential

A major issue in understanding the ideas of electromagnetism is to get an intuitive understanding of the concept of electric potential $V$. First, there is the naming problem. There are at least four other terms for the concept: voltage, potential difference, electromotive force and even electromotance! Next, we have the possible source of confusion with the concept of electric potential energy, which doesn't help the situation. Perhaps the biggest problem with the concept of electric potential is that it doesn't exist in the real world: like the electric field to which it is related, it is simply a construct of the mind, which we use to solve problems and do calculations.

Despite the seemingly unsurmountable difficulty of describing the nature of something which doesn't exist, I will persist in this endeavour. I want to give you a proper intuition about voltage, because this concept will play an extremely important role in circuits. While it is true that voltage doesn't exist, energy does exist and energy is just $U=qV$. Voltage, therefore, is electric potential energy per unit charge, and we can talk about the voltage in the language of energy.

Every time you need to think about some electric potential, just imagine what would happen to a unit test charge: q=1[C], and then think in terms of energy. If the potential difference between point (a) and point (b) is $V_{ab}=16$[V], this means that a charge of 1[C] that goes from (a) to (b) will gain 16[J] of energy. If you have some circuit with a 3[V] battery in it, then each Coulomb of charge that is pumped through the battery gains $3$[J] of energy. This is the kind of reasoning we used in the opening example in the beginning of electrostatics, in which we calculated the kinetic energy of the electrons inside an old-school TV.

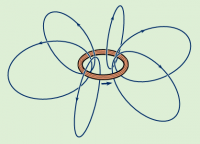

Field lines

We can visualize the electric field caused by some charge as

electric field lines everywhere around it.

For a positive charge ($Q>0$), the field lines will be leaving it

in all directions towards negative charges or expanding to infinity.

We say that a positive charge is a source of electric field lines

and that a negative charge ($Q<0$) is a sink for electric field lines,

i.e., it will have electric field lines going into it

The diagram on the right illustrates the field lines for two isolated

charges. If these charges were placed next to each other, then

the field lines leaving the (+) charge would all curve around and go into the (-) charge.

We can visualize the electric field caused by some charge as

electric field lines everywhere around it.

For a positive charge ($Q>0$), the field lines will be leaving it

in all directions towards negative charges or expanding to infinity.

We say that a positive charge is a source of electric field lines

and that a negative charge ($Q<0$) is a sink for electric field lines,

i.e., it will have electric field lines going into it

The diagram on the right illustrates the field lines for two isolated

charges. If these charges were placed next to each other, then

the field lines leaving the (+) charge would all curve around and go into the (-) charge.

Links

[ A guided tour of the electie vield lines ]

http://web.mit.edu/8.02t/www/802TEAL3D/visualizations/electrostatics/index.htm

{{page>electricity:electron_gun}}

{{page>electricity:crt}}

Electrostatic integrals

The electric field produced by a point charge $Q$ placed at the origin is given by $\vec{E}(\vec{r})=\frac{k_eQ}{r^2}\hat{r}$. What if the charge is not a point but some continuous object? It could be a line-charge, or some charged surface. How would you calculate the electric field produced by such an object $O$?

What you will do is cut up the object into little pieces $dO$ and calculate the electric field produced by each piece and then add up all the contribution. In other words you need to do an integral.

Concepts

- $Q$: the total charge. The units are Coulombs [C].

- $\lambda$: linear charge density. The units are coulombs per meter [C/m].

The charge density of a long wire of length $L$ is $\lambda = \frac{Q}{L}$.

- $\sigma$: the surface charge density. Units are [C/m$^2$].

The charge density of a disk with radius $R$ is $\sigma = \frac{Q}{\pi R^2}$.

The charge on a sphere of radius $R$ made of conducting material will be concentrated

on its surface and will have density $\rho =\frac{Q}{4 \pi R^2}$.

* $\rho$: the volume charge density. Units are [C/m$^3$].

The charge density of a cube of uniform charge and side length $c$ is $\rho = \frac{Q}{c^3}$.

The charge density of a solid sphere made of insulator with a uniform

charge distribution will be $\rho = \frac{Q}{\frac{4}{3} \pi R^3}$.

One-over-r-squared quantities:

- $\vec{F}_e$: Electric force.

- $\vec{E}$: Electric field.

One-over-r quantities:

- $U$: electric potential energy.

- $V$: electric potential.

Integration techniques review

Both the formulas for electric force (field) and potential energy (electric potential) contain a denominator of the form $r\equiv |\vec{r}| = \sqrt{x^2 + y^2}$. As you can imagine, these kind of integrals will be quite hairy to calculate if you don't know what you are doing.

But you know what you are doing! Well, you know if you remember your techniques_of_integration. Now I realize we saw this quite a long time ago so a little refresher is in order.

The reason why they make you practice all those trigonometric substitutions is that they will be useful right now. For example, how would you evaluate the integral \[ \int_{-\infty}^{\infty} \frac{1}{(1+x^2)^{\frac{3}{2}} } \ dx, \] if you were forced to – like on an exam question or something. Relax. You are not in an exam. I just said that to get your attention. The above integral may look complicated, but actually you will see that it is not too hard: we just have to use a trig substitution trick. You will see that all that time spent learning about integration techniques was not wasted.

Recall that the trigonometric substitution trick necessary to handle the terms like $\sqrt{1 + x^2}$is to use the identity: \[ 1 + \tan^2 \theta = \sec^2 \theta, \] which comes from $\cos^2 \theta + \sin^2 \theta = 1$ divided by $\cos^2 \theta$.

If we make the substitution $x=\tan\theta$, $dx=\sec^2\theta \ d\theta$ in the above integral we will get \[ 1 + x^2 = \sec^2 \theta. \] But we don't just have $1+x^2$, but $(1+x^2)^{\frac{3}{2}}$. So we need to take the $\frac{3}{2}$th power of the above equation, which is equivalent to taking the square root and then raising to the third power: \[ (1+x^2)^{\frac{3}{2}} = (\sec^2\theta)^{\frac{3}{2}} = \left( \sqrt{ \sec^2\theta} \right)^{3} = (\sec\theta)^{3} = \sec^3\theta. \] Next, we have to calculate the new limits of integration due to the change of variable $x=\tan\theta$. The upper limit $x_f=+\infty$ becomes $\theta_f = \tan^{-1}(+\infty)=\frac{\pi}{2}$ and the lower limit $x_i=-\infty$ becomes $\theta_i = \tan^{-1}(-\infty)=-\frac{\pi}{2}$.

Ok now let's see how all of this comes together: \[ \begin{align} \int_{x=-\infty}^{x=\infty} \frac{1}{(1+x^2)^{\frac{3}{2}} } \ dx &= \int_{ \theta=-\frac{\pi}{2} }^{ \theta=\frac{\pi}{2} } \frac{1}{(1+\tan^2\theta)^{\frac{3}{2}} } \sec^2 \theta \ d\theta \nl &= \int_{ -\frac{\pi}{2} }^{ \frac{\pi}{2} } \frac{1}{\sec^3\theta} \sec^2 \theta \ d\theta \nl &= \int_{ -\frac{\pi}{2} }^{ \frac{\pi}{2} } \cos \theta \ d\theta \nl &= \sin \theta \bigg|_{ -\frac{\pi}{2} }^{ \frac{\pi}{2} } = \sin\left( \frac{\pi}{2} \right) - \sin\left( - \frac{\pi}{2} \right) = 1 - (-1) = 2. \end{align} \]

Exercise

Now I need you to put the book down for a moment and try to reproduce the above steps by practicing on the similar problem: \[ \int_{-\infty}^{\infty} \frac{a}{(a^2+x^2)^{\frac{3}{2}} } \ dx, \] where $a$ is some fixed constant. Hint: substitute $x = a \tan\theta$. This integral corresponds to the strength of the electric field at a distance $a$ from an infinitely long line charge. Ans: $\frac{2}{a}$. We will use this result in Example 1 below, so go take a piece of paper and do it.

The tan substation is also useful when calculating the electric potential, but the denominator will be of the form $\frac{1}{(1+x^2)^{\frac{1}{2}} }$ instead of $\frac{1}{(1+x^2)^{\frac{3}{2}} }$. We show how to compute this integral in Example 3.

Formulas

Let $\vec{E} = ( E_x, E_y )=( \vec{E}\cdot \hat{x}, \vec{E}\cdot \hat{y} )$ be the electric field strength at some point $P$ due to the charge on some object $O$. We can calculate the total electric field by analyzing the individual contribution $dE$ due to each tiny part of the object $dO$.

The total field strength in the $\hat{x}$ direction is given by \[ E_x = \int dE_x = \int_O \vec{E}\cdot \hat{x}\ dO. \]

The above formula is too abstract to be useful. Think of it more as a general statement of the principle that the electric field due to the object as a whole, is equal to sum of the electric field due to its parts.

Charge density

The linear charge density of an object of length $L$ with charge $Q$ on it is \[ \lambda = \frac{Q}{L}, \qquad \textrm{ [C/m] } \] where $\lambda$ is the Greek letter lambda which is also used to denote wavelength.

Similarly the surface charge density is defined as the total charge divided by the total area and the volume charge density as the total charge divided by the total volume: \[ \sigma = \frac{Q}{A} \ \ \ \left[ \frac{\textrm{C}} { \textrm{m}^2} \right], \qquad \rho = \frac{Q}{V} \ \ \ \left[ \frac{ \textrm{C} }{ \textrm{m}^3} \right], \] where $\sigma$ and $\rho$ are the Greek letters sigma and rho.

Examples

Example 1: Electric field of an infinite line charge

Consider a horizontal line charge of charge density $\lambda$ [C/m]. What is the strength of the electric field strength at a distance $a$ from the wire?

The wire has a line symmetry so we can choose any point along the wire, so long as it is $a$[m] away from it. Suppose we pick the point $P=(0,a)$ which lies on the $y$ axis. We want to calculate $\vec{E}({P}) = ( E_x, E_y )=( \vec{E}\cdot \hat{x}, \vec{E}\cdot \hat{y} )$.

Consider first the term $E_y$. It is given by the following integral: \[ \begin{align} E_y & = \int dE_y = \int d\vec{E} \cdot \hat{y} \nl & = \int_{x=-\infty}^{x=\infty} \vec{E}(dx) \cdot \hat{y} \nl & = \int_{x=-\infty}^{x=\infty} \frac{ k_e (\lambda dx)} { r^2} \hat{r} \cdot \hat{y} \nl & = \int_{x=-\infty}^{x=\infty} \frac{ k_e \lambda dx} { r^2} \hat{r} \cdot \hat{y} \nl & = \int_{-\infty}^{\infty} \frac{k_e \lambda}{(a^2+x^2)} ( \hat{r} \cdot \hat{y} ) \ dx \nl & = \int_{-\infty}^{\infty} \frac{k_e \lambda}{(a^2+x^2)} \left( \frac{ a }{ \sqrt{ a^2+x^2} } \right) \ dx \nl & = \int_{-\infty}^{\infty} \frac{k_e \lambda a}{(a^2+x^2)^{\frac{3}{2}} } \ dx. \end{align} \]

We showed how to compute this integral in the review section on integration techniques. If you did as I asked you, you will know that \[ \int_{-\infty}^{\infty} \frac{a}{(a^2+x^2)^{\frac{3}{2}} } \ dx \ = \ \frac{2}{a}. \]

The total electric field in the $y$ direction is therefore given by: \[ E_y = k_e \lambda \int_{-\infty}^{\infty} \frac{ a}{(a^2+x^2)^{\frac{3}{2}} } \ dx \ = \ \frac{ 2 k_e \lambda }{ a }. \]

By symmetry $E_x=0$, since there is an equal amount of charge to the left and to the right of the origin. Therefore, the electric field $\vec{E}({P})$ at the point $P$ at a distance $a$ from the line charge is given by $\vec{E}({P})=(E_x, E_y) = \left( 0, \frac{ 2 k_e \lambda }{ a } \right)$.

Example 2: Charged disk

What is the electric field in the $z$ direction directly above the a disk with charge density $\sigma$[C/m$^2$] and radius $R$ that is lying in the centre of the $xy$-plane?

Example 3: Electric potential of a line charge of finite length

Consider a line charge of length $2L$ and linear charge density $\lambda$. The integral in that case will be \[ \begin{align} \int_{-L}^{L} \frac{1}{ \sqrt{ 1+x^2} } \ dx &= \int \frac{1}{ \sqrt{ 1+\tan^2\theta} } \sec^2 \theta \ d\theta \nl &= \int \frac{1}{\sec\theta} \sec^2 \theta \ d\theta \nl &= \int \sec \theta \ d\theta. \end{align} \] To proceed we need to remember a sneaky trick, which is to use the substitution $u = \tan\theta +\sec\theta$, $du=\sec^\theta + \tan\theta\sec\theta$ and to multiply top and bottom by $\tan\theta +\sec\theta$. \[ \begin{eqnarray} \int \sec(\theta) \, dx &=& \int \sec(\theta)\ 1 \, d\theta \nl &=& \int \sec(\theta)\frac{\tan(\theta) +\sec(\theta)}{\tan(\theta) +\sec(\theta)} \ d\theta \nl &=& \int \frac{\sec^2(\theta) + \sec(\theta) \tan(\theta)}{\tan(\theta) +\sec(\theta)} \ d\theta\nl &=& \int \frac{1}{u} du \nl &=& \ln |u| \nl &=& \ln |\tan(\theta) + \sec(\theta) | \nl &=& \ln \left| x + {\sqrt{ 1 + x^2} } \right| \bigg|_{-L}^L \nl &=& \ln \left| L + {\sqrt{ 1 + L^2}} \right| - \ln \left| -L + {\sqrt{ 1 + L^2} } \right| \nl &=& \ln \left| \frac{ L + {\sqrt{ 1 + L^2} } } { -L + {\sqrt{ 1 + L^2} } } \right|. \end{eqnarray} \]

Exercise: The above calculation is showing the important calculus core of the problem. The necessary physical constants like $\lambda$ (charge density) and $a$ (distance from wire) are missing. Add them to obtain the final answer. You can check your answer in the link below.

Discussion

If you find the steps in this chapter complicated, then you are not alone. I had to think quite hard to get all the things right so don't worry: you won't be expected to do this on an exam on your own. In a homework problem maybe.

The important things to remember is to split the object $O$ into pieces $dO$ and then keep in mind the vector nature of $\vec{E}$ and $\vec{F}_e$ (two integrals: one for the $x$ component of the quantity and one for the $y$ component).

An interesting curiosity is that the electric potential at a distance $a$ from an infinitely long wire is infinite. The potential scales as $\frac{1}{r}$ and so integrating all the way to infinity makes it blow up. This is why we had to choose a finite length $2L$ in Example 3.

Links

Gauss' law

We saw in the previous chapters that the electric field $\vec{E}$ is a useful concept in order to visualize the electromagnetic effects produced by charged objects. More specifically, we imagined electric field lines which are produced by positive charges $+Q$ and end up on negative charges $-Q$. The number of field lines produced by a charge $+2Q$ is the double of the number of field lines produced by a charge $+Q$.

In this section, we learn how to count the number of field lines passing through a surface (electric flux) and infer facts about the amount of charge that the surface contains. The relationship between the electric flux leaving a surface and the amount of charge contained in that surface is called Gauss' law.

Consider the following reasoning. To keep the numbers simple, let us say that a charge of 1[C] produces exactly 10 electric field lines. Someone has given you a closed box $B$ with surface area $S$. Using a special instrument for measuring flux, you find that there are exactly 42 electric field lines leaving the box. You can then infer that there must be a net charge of 4.2[C] contained in the box.

In some sense, Gauss' law is nothing more than a statement of the principle of conservation of field lines. Whatever field lines are created within some surface must leave that surface at some point. Thus we can do our accounting in two equivalent ways: either we do a volume accounting to find the total charge inside the box, or we do a surface accounting and measure the number of field lines leaving the surface of the box.

Concepts

- $Q$: Electric charge of some particle or object. It is measured in Coulombs $[C]$.

- $S$: Some closed surface in a three dimensional space. ex: box, sphere, cylinder.

- $A$: The area of the surface $S$.

- $dA$: A small piece of surface area used for integration. We have that $A=\int_S dA$.

- $d\vec{A}=\hat{n}dA$: A oriented piece of area, which is just $dA$ combined with

a vector $\hat{n}$ which points perpendicular to the surface at that point.

- $\Phi_S$= The electric flux is the total amount of electric field $\vec{E}$ passing through the surface $S$.

- $\varepsilon_0=8.8542\ldots\times 10^{-12}$ $\left[\frac{\mathrm{F}}{\mathrm{m}}\right]$: The permittivity of free space, which is one of the fundamental constants of Nature.

Instead of a point charge $Q$, we can have charge spread out:

- $\lambda$: linear charge density. The units are coulombs per meter [C/m].

The charge density of a long wire of length $L$ is $\lambda = \frac{Q}{L}$.

- $\sigma$: the surface charge density. Units are [C/m$^2$].

The charge density of a disk with radius $R$ is $\sigma = \frac{Q}{\pi R^2}$.

- $\rho$: the volume charge density. Units are [C/m$^3$].

The charge density of a cube of uniform charge and side length $c$ is $\rho = \frac{Q}{c^3}$.

Formulas

Volumes and surface areas

Recall the following basic facts about volumes and surface areas of some geometric solids. The volume of a parallelepiped (box) of sides $a$, $b$, and $c$ is given by $V=abc$, and the surface area is given by $A=2ab+2bc+2ac$. The volume of a sphere of radius $r$ is $V_s=\frac{4}{3}\pi r^3$ and the surface area is $A_s=4\pi r^2$. A cylinder of height $h$ and radius $r$ has volume $V_c=h\pi r^2$, and surface area $A_c=(2\pi r)h + 2 (\pi r^2)$.

Electric flux

For any surface $S$, the electric flux passing through $S$ is given by the following vector integral \[ \Phi_S = \int_S \vec{E} \cdot d\vec{A}, \] where $d\vec{A}=\hat{n} dA$, $dA$ is a piece of surface area and $\hat{n}$ points perpendicular to the surface.

I know what you are thinking “Whooa there Johnny! Hold up, hold up. I haven't seen vector integrals yet, and this expression is hurting my brain because it is not connected to anything else I have seen.” Ok you got me! You will learn about vector integrals for real in the course Vector Calculus, but you already have all the tools you need to understand the above integral: the dot product and integrals. Besides, in first electromagnetism course you will only have to do this integral for simple surfaces like a box, a cylinder or a sphere.

In the case of simple geometries where the strength of the electric field is constant everywhere on the surface and its orientation is always perpendicular to the surface (\hat{E}\cdot\hat{n}=1) the integral simplifies to: \[ \Phi_S = \int_S \vec{E} \cdot d\vec{A} = |\vec{E}| \int_S (\hat{E} \cdot \hat{n}) dA = |\vec{E}| \int_S 1 dA = |\vec{E}|A. \]

In all problems and exams in first year electricity and magnetism we will

have $\Phi_S = |\vec{E}|A$ or $\Phi_S = 0$ (if $\vec{E}$ is parallel to the surface),

so essentially you don't have to worry about the vector integral.

I had to tell you the truth though, because this is the minireference way.

Gauss' law

Gauss' law states that the electric flux $\Phi_S$ leaving some closed surface $S$ is proportional to the total amount of charge $Q_{in}$ enclosed inside the surface: \[ \frac{Q_{in}}{\varepsilon_0} = \Phi_S \equiv \int_S \vec{E} \cdot d\vec{A}. \] The proportionality constant is $\varepsilon_0$, the permittivity of free space.

Examples

Sphere

Consider a spherical surface $S$ of radius $r$ enclosing a charge $Q$ at its centre. What is the strength of the electric field strength, $|\vec{E}|$, on the surface of that sphere?

We can find this using Gauss' law as follows: \[ \frac{Q}{\varepsilon_0} = \Phi_S \equiv \int_S \vec{E} \cdot d\vec{A} = |\vec{E}| A = |\vec{E}| 4 \pi r^2. \] Solving for $|\vec{E}|$ we find: \[ |\vec{E}| = \frac{Q}{4 \pi \varepsilon_0 r^2} = \frac{k_eQ}{r^2}. \] I bet you have seen that somewhere before. Coulomb's law can be derived from Gauss' law, and this is why the electric constant is $k_e=\frac{1}{4\pi \epsilon_0}$.

Line charge and cylindrical surface

Consider line charge of charge density $\lambda$ [C/m]. Imagine a charged wire which has 1[C] of charge on each meter of it. What is the strength of the electric field strength at a distance $r$ from the wire?

This is a classical example of a bring your own surface (BYOS) problem: the problem statement didn't mention any surface $S$, so we have to choose it ourselves. Let $S$ be the surface are of a cylinder of radius $r$ and height $h=1$[m] that encloses the line charge at its centre. We now write down Gauss' law for that cylinder: \[ \begin{align} \frac{\lambda (1 [\textrm{m}])}{\varepsilon_0} & = \Phi_S \equiv \int_S \vec{E} \cdot d\vec{A} \nl & = \vec{E}({r}) \cdot \vec{A}_{side} + \vec{E}_{top} \cdot \vec{A}_{top} + \vec{E}_{bottom} \cdot \vec{A}_{bottom} \nl & = (|\vec{E}|\hat{r}) \cdot \hat{r} 2 \pi r (1 [\textrm{m}]) + 0 + 0 \nl & = |\vec{E}| (\hat{r}\cdot \hat{r}) 2 \pi r (1 [\textrm{m}]) \nl & = |\vec{E}| 2 \pi r (1 [\textrm{m}]). \end{align} \]

Solving for $|\vec{E}|$ in the above equation we find \[ |\vec{E}| = \frac{ \lambda }{ 2 \pi \varepsilon_0 r } = \frac{ 2 k_e \lambda }{ r }. \]

Which you should also have seen before (Example 1 in electrostatic_integrals ).

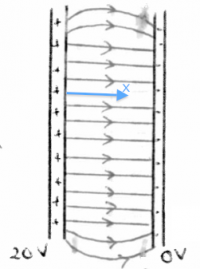

Electric field inside a capacitor

Assume you have two large metallic plates of opposite charges (a capacitor). The (+) plate has charge density $+\sigma$[C/m$^2$] and the (-) plate has $-\sigma$[C/m$^2$]. What is the strength of the electric field between the two plates?

Consider first a surface $S_1$ which makes a cross section of area $A$ that contains sections of both plates. This surface contains no net charge, so by Gauss' law, we conclude that there are no electric field lines entering or leaving this surface. An electric field $\vec{E}$ exits between the two plates and nowhere outside of the capacitor. This is, by the way, why capacitors are useful: since they store a lot of energy in a confined space.

Consider now a surface $S_2$ which also makes a cross section of area $A$, but only goes halfway through the capacitor, enclosing only the (+) plate. The total charge inside the surface $S_2$ is $\sigma A$, therefore by Gauss' law \[ \frac{\sigma A}{\varepsilon_0} = |\vec{E}|A, \] we conclude that the electric field strength inside the capacitor is $|\vec{E}| = \frac{\sigma }{\varepsilon_0}$.

We will see in capacitors, that this result can also be derived by thinking of the electric field as the spacial derivative of the voltage on the capacitor. You should check that the two approaches lead to the same answer for some physical device with area $A$, plate separation $d$.

Explanations

Surface integral

The flux $\Phi_S$ is a measure of the strength of the electric field lines passing through the surface $S$. To compute the flux, we need the concept of directed area, that is, we split the surface $S$ into little pieces of area $d\vec{A} = \hat{n} dA$ where $dA$ is the surface area of a little piece of surface and $\hat{n}$ is a vector that points perpendicular to the surface. We need this kind of vector to calculate the flux leaving through $dA$: \[ d\Phi_{dA} = \vec{E} \cdot \hat{n} dA, \] where the dot product is necessary to account for the relative orientation of the piece surface are and the direction of the electric field. For example if the piece-of-area-perpendicular vector $\hat{n}$ points outwards on the surface and an electric field of strength $|\vec{E}|$ is leaving the surface then the flux integral will be positive. If on the other hand electric field lines are entering the surface, the integral will come out negative since $\vec{E}$ and $d\vec{A}$ will point in opposite directions. Of particular importance are surfaces where the electric field lines are parallel to the surface: in that case $\vec{E} \cdot \hat{n} dA = 0$.

Implications

Have you ever wondered why the equation for the strength of the electric field $|\vec{E}|(r )$ at a function of the distance $r$ is given by the formula $|\vec{E}(r )|=\frac{kQ}{r^2}$? Why is it one-over-$r$-squared exactly? Why not one over $r$ to the third power or the seventh?

The one-over-$r$-squared comes from Gauss' law. The flux $\Phi$ is a conserved quantity in this situation. The field lines emanating from the charge $Q$ (assumed $Q$ is positive) flow outwards away from the charge and uniformly in all directions. Since we know that $\Phi = |\vec{E}|A_s$, then it must be that that $|\vec{E}| \propto 1/A_s$. The surface area of sphere is

Imagine now applying Gauss' law to a small surface which tightly wraps the charge (small $r$) and a larger spherical surface (big $r$). The total flux of electric field through both surfaces is the same. The flux near the charge is due to a very strong electric field that flows out of a small surface area. The flux far away from the charge is due to a very weak field over a very large surface area.

Discussion

So what was this chapter all about? We started with crazy stuff like vector integrals (more specifically surface integrals) denoted by fancy Greek letters like $\Phi$, but in the end we derived only three results which we already knew. What is the point?

The point is that we have elevated our understanding from the formula level to a principle. Gauss' law is a super-formula: a formula that generates formulas. The understanding of such general principles that exist in Nature is what physics is all about.

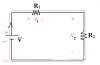

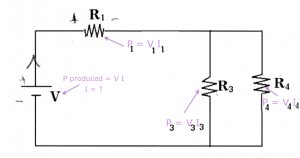

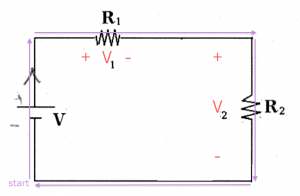

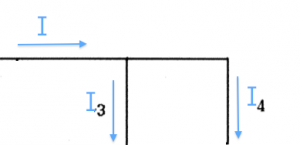

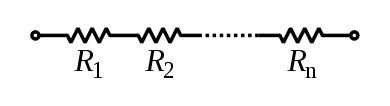

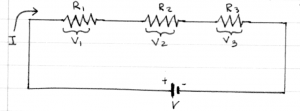

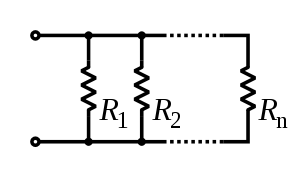

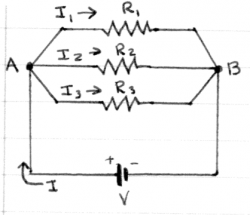

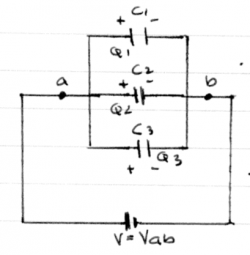

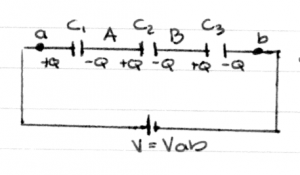

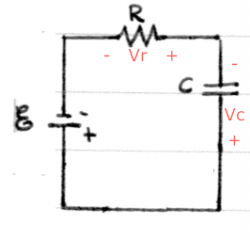

Circuits

Electric circuits are contraptions in which electric current flows through various pipes (wires) and components (resistors, capacitors, inductors, on-off switches, etc.). Because the electric current cannot escape the wire, it is forced to pass through each of the components along its path.

Your sound system is a circuit. Your computer power supply is a circuit. Even the chemical reactions involved in neuronal spiking can be modelled as an electric circuit.

Concepts

$I$: the electric current. It flows through all circuit components. We measure current in Amperes $[A]$.

We use wires to guide the flow of currents: to make them go where we want.

* {{ :electricity:circuit-element-voltage.png|}}

$V$: the electric potential difference between two points. We say //voltage// for short instead of "electric potential difference". There is no notion of "absolute" voltage, we only measure potential difference between //two// points. Thus if you should always label a (+) side and a (-) side when reporting a voltage. Conveniently, the unit of Voltage is the Volt [V], after Volta.

* $P$: the power consumed or produced by some component. Measured in Watts [W].

* $R$: the //resistance// value of a resistor. For resistors, the voltage across the leads is linearly related to current flowing in the resistor. We call //resistance// the ratio between the voltage and the current:

\[

R=\frac{V}{I}.

\]

We measure resistance in Ohms [$\Omega$].

Circuit components

The basic building blocks of circuits are called electric components. In this section we will learn how to use the following components.

\[ V_{\text{wire}} = \text{any}, \quad \qquad I_{\text{wire}} = \text{any} \]

Battery: This is a voltage source. Can provide any current, but always keeps a constant voltage of V volts.

\[

V_{\text{batt}} = V, \qquad \qquad I_{\text{batt}} = \text{any}

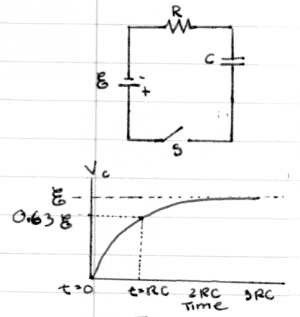

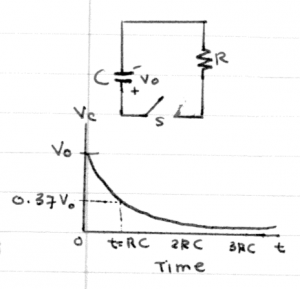

\]