There is an interesting discussion about network protocols going on at hacker news. In just a few posts some very knowledgeable people stepped in to explain what is going on. I saved the URL in the Links section of /miniref/comp/network_programming but it made me think about how much better informal explanation is to formal explanations.

Here you have hackers talking to other hackers. Whether it is a javascript-wielding young blood or an old dude who writes server-side stuff in C, all these people need to send data on the network and know of some some protocols for doing that: TCP:HTTP for web dev mostly while systems people probably think more in terms of (TCP:*).

Everyone jumps in to check what is going on on the HN discussion. And then suddenly learning happens. The discussion is a bit disorganized (shown as discussion tree) but let me give you the walk through.

First chetanahuja tells it like it is:

IP layer is for addressing nodes on the internet and routing packets to them in a stateless manner (more or less… I’m not counting routing table caches and such as “state”). TCP […] are built on top of the IP layer to provide reliable end-to-end data transfers in some sort of session based mechanism.

The key thing to know is that IP is a best-effort protocol. If you send an IP packet from computer A to computer B, the network will try to deliver it but there are not guarantees that it will succeed. No problem though, we can build a reliable protocol (the Transmission Control Protocol) on top of the unreliable one. This is why the Internet is usually referred to as TCP/IP, not just IP even thought IP stands for Internet Protocol. TCP/IP is the internet made reliable.

TCP is important because it allows for reliable communication: When A sends some data to B, B will reply with an ACKnowledge packet to tell A when he received the data. Reliability comes from the fact that A will retransmit all the packets for which no ACKs are received (the sender assumes these packets got lost). The other thing the TCP protocol gives you is the notion of a port — a multiplexing mechanism for running multiple networks services on the same machine. Port 80 is the HTTP port (web browsing). When you type in 11.22.33.44 into the browser, your browser will send a TCP packet to port 80 on 11.22.33.44:80, where the TCP port is separated by a colon. Another important port is port 25 (SMTP) which is used for email. When an email to user@11.22.33.44 is to be delivered, a connection will made to 11.22.33.44:25.

Sometimes you don’t want to have so much transmission control overhead. Imagine that you are sending some voice data so you want to send as many packets (maybe use forward error correcting codes) but you don’t want to bother with retransmission of lost packets. The voice data simply isn’t useful if it is not delivered on time. In such cases we would prefer a more basic protocol which doesn’t isolate us from the unreliability of IP.

This protocol is called UDP and it is really barebones. UDP is basically IP with some added port numbers (and error detection checksums). From now on we can’t simply talk about “port 80″ on host but we must say whether we mean port 80 in the tcp protocol (TCP:80) or in the udp protocol (UDP#80).

Speaking of UDP ports, let me tell you about a really important one. The (206.190.36.45, 98.138.253.109, 98.139.183.24).” My browser will connect to one of these IPs (over HTTP = TCP:80) chosen at random.

Another useful UDP service is DHCP (dynamic host configuration protocol). This is the magical process by which you are automatically assigned an IP address when you join a network. DHCP bootstraps the communication (you joined a new network, ok, but what is the network number for this network? who should you talk to? What IP address should you respond to?). Either you know this information (someone gave it to you on a piece of paper [sysadmin]) or you can make a DHCP request (which is UDP broadcast) and a DHCP server will respond to you and assign you an IP address, tell you what the network number is and tell you which router to talk to to go towards the Internet (the route).

Ok so everyone knows the basics now, but HN doesn’t just give you the basics — it gives you the advanced stuff too. What are the current problems with TCP/IP?

advm says:

Maybe TCP’s issues aren’t apparent when you’re using it to download page assets from AWS over your home Internet connection, but they become apparent when you’re doing large file transfers between systems whose bandwidth-delay products (BDPs) greatly exceed the upper limit of the TCP buffers on the end systems.

This may not be an issue for users of consumer grade Internet service, but it is an issue to organizations who have private, dedicated, high-bandwidth links and need to move a lot of data over large distances (equating to high latency) very quickly and often; CDNs, data centers, research institutions, or, I dunno, maybe someone like Google.

The BDP and the TCP send buffer size impose an upper limit on the window size for the connection. Ideally, in a file transfer scenario, the BDP and the socket’s send buffer size should be equal. If your send buffer size is lower than the BDP, you cannot ever transfer at a greater throughput than buffer_size / link_latency, and thus you cannot ever attain maximum bandwidth. I can explain in more detail why that’s true if you want, but otherwise here’s this: http://www.psc.edu/index.php/networking/641-tcp-tune

Unfortunately for end systems with a high BDP between them, most of the time the maximum send buffer size for a socket is capped by the system to something much lower than the BDP. This is a result of the socket implementation of these systems, not an inherent limitation of TCP.

An accepted user-level solution to this issue is to use multiple sockets in parallel, but that has its own issues, such as breaking fairness and not working well with the stream model. I can explain this more if you want, too, just let me know.There are other problems with TCP, such as

slow start being, well, slow to converge on high-BDP networks,

bad performance in the face of random packet loss (e.g., TCP over Wi-Fi),

congestion control algorithms being too conservative (IMO, not everyone needs to agree on the same congestion control protocol for it to work well, it just needs to converge to network conditions faster, better differentiate types of loss, and yield to fairness more quickly),

TCP features such as selective ACKs not being widely used,

default TCP socket settings sucking and requiring a lot of tuning to get right,

crap with NAT that can’t be circumvented at the user level (UDP-based stream protocols can do rendezvous connections to get around NAT), and more.People write whole papers on all these things. Problem is most of the public research exists as a shitty academic papers you wouldn’t probably bother reading anyway, and most of the people actually studying this stuff in-depth and coming up with solutions are private researchers and engineers working for companies like Google.

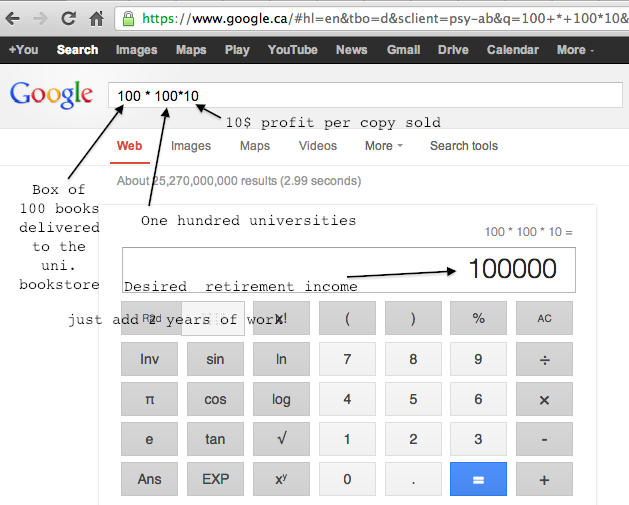

The last paragraph is a good reason why there should be a “No BS guide to computer systems”. I bet I can show services at all layers of the OSI stack and really go into details. The sockets are pretty cool.

pjscott describes the crypto stack in just one sentence:

Their crypto stuff looks pretty reasonable. Key exchange uses ECDH with either the P-256 or curve25519 polynomials. Once the session key is established, it’s encrypted with AES-128 and authenticated with either GCM or HMAC-SHA256. None of this is implemented yet, but it’s at least cause for hope.

latitude also gives other considerations about doing crypto over the Internet.

I’ll tell you a dirty little secret of the protocol design.

Say, you want to design a protocol with reliable delivery and/or loss detection. You will then have ACKs, send window and retransmissions. Guess what? If you don’t follow windowing semantics of TCP, then one of two things will happen on saturated links – either TCP will end up with all the bandwidth or you will.

So – surprise! – you have no choice but to design a TCP clone.That said, there is a fundamental problem with TCP, when it’s used for carrying secure connections. Since TCP acts as a pure transport protocol, it has no per-packet authentication and so any connection can be trivially DoS’d with a single fake FIN or RST packet. There are ways to solve this, e.g. by reversing the TCP and security layers and running TCP over ESP-over-UDP or TLS-over-UDP (OpenVPN protocol). This requires either writing a user-space TCP library or doing some nasty tunneling at the kernel level, but even as cumbersome as this is, it’s still not a reason enough to re-invent the wheel. Also, if you want compression, it’s readily available in TLS or as a part of IPsec stack (IPcomp). If you want FEC, same thing – just add a custom transform to TLS and let the client and server negotiate if to use it or not.

I mean, every network programmer invents a protocol or two in his lifetime. It’s like a right of passage and it’s really not a big deal.

I learned stuff today. I hope you guys learned something too.